Thread:

I've seen many implementations of #eventsourcing that use a message broker to publish events to the read models. I've used that pattern and contributed to ES "frameworks" that implement that pattern.

I think It is a bad idea. I'll explain why:

I've seen many implementations of #eventsourcing that use a message broker to publish events to the read models. I've used that pattern and contributed to ES "frameworks" that implement that pattern.

https://twitter.com/diogojoma/status/1377252320975671298

I think It is a bad idea. I'll explain why:

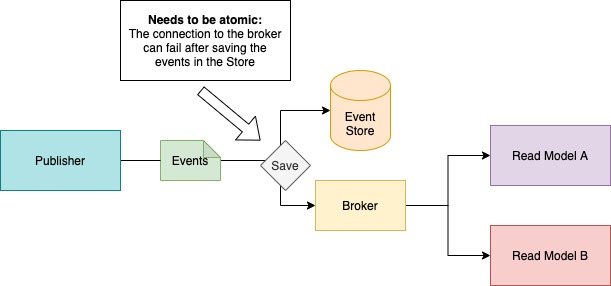

1) Writing to the event store AND publishing events to a broker needs to be atomic.

In most cases a distributed transaction using two phase commits is not an option.

We need to create some mechanism to deal with faulty connections between publisher and broker:

In most cases a distributed transaction using two phase commits is not an option.

We need to create some mechanism to deal with faulty connections between publisher and broker:

To reliably/atomically update the event store and publish events to the broker, we can use the Transactional Outbox Pattern:

microservices.io/patterns/data/…

microservices.io/patterns/data/…

The outbox pattern uses a message relay process to publishes the events inserted into event store to a message broker.

It can be done in two flavours:

a) Use a separate service to handle the relay process allowing a simpler publisher, but requiring a new moving piece.

It can be done in two flavours:

a) Use a separate service to handle the relay process allowing a simpler publisher, but requiring a new moving piece.

b) Implement the relay process logic as part of the publisher code. Less moving pieces but adds complexity to the publisher.

Example of a publisher hat retries to publish undelivered events to the broker whenever a new event load request comes

Example of a publisher hat retries to publish undelivered events to the broker whenever a new event load request comes

Now we have the warranty that events will always get published to the broker, even in cases of broker connection failures. But this is just the start of the story, there are more problems to solve.

2) Events arriving out of order.

2) Events arriving out of order.

Events may be delivered out of order for different reasons:

- concurrent operations in the publisher my cause events to be sent out of order to the broker.

- even ordered events in the broker may be processed out of order by consumers, depending on consumers topology.

- concurrent operations in the publisher my cause events to be sent out of order to the broker.

- even ordered events in the broker may be processed out of order by consumers, depending on consumers topology.

To ensure all events are processed in order by consumers there are two options:

a) ensure events are sent in order to the broker AND ensure events are delivered in order from the broker to the consumers.

b) create a reordering buffer in the consumers.

a) ensure events are sent in order to the broker AND ensure events are delivered in order from the broker to the consumers.

b) create a reordering buffer in the consumers.

The option a) can be implemented using a single process to publish events, a broker with message ordering warranty and a single process to consume events (removing concurrency).

Scaling can be done using sharding. Independent event streams can always run in parallel!

Scaling can be done using sharding. Independent event streams can always run in parallel!

The option b) is using a re-ordering buffer in the consumers. The re-ordering buffer can be:

waiting for previous events to arrive before processing the newest

OR

explicitly requesting the event store to fetch older events if a newer arrives out of order.

waiting for previous events to arrive before processing the newest

OR

explicitly requesting the event store to fetch older events if a newer arrives out of order.

By now we've seen how to ensure all events are published and consumed in order.

We may choose a setup from what was discussed and things will just work.

But then here it comes a new challenge:

3) The system is already in production and we want to create a new read model.

We may choose a setup from what was discussed and things will just work.

But then here it comes a new challenge:

3) The system is already in production and we want to create a new read model.

The new read model needs to process ALL events from the beginning to create a new persisted state representation.

But the broker does not keep the event history. A broker is just used for message delivery, not for message persistence.

But the broker does not keep the event history. A broker is just used for message delivery, not for message persistence.

To bootstrap the new read-model we need to create a side-channel in the broker to republish ALL events from the beginning of history to this new read-model

OR

Expose some event store API to the new read-model so it can fetch ALL events directly from the event store.

OR

Expose some event store API to the new read-model so it can fetch ALL events directly from the event store.

Whatever option we choose, we just created a new mechanism for event publishing, to bootstrap new read models. Our system now has two modes for event delivery: the "live mode" and a "bootstrap mode" used to create new read-models.

Our system will be something like this:

Our system will be something like this:

The last diagram concludes the final architecture we need to have in place an end to end delivery of events from publisher to read models, supporting intermittent connections and the bootstrap of new read-models.

Now we only need to:

4) support the broker in production.

Now we only need to:

4) support the broker in production.

More infra-structure is not free. When dealing with high scalable services with high availability requirements it brings more burden:

- clusters and multiple availability zones

- active/active topology

- disaster recovery

- multi environment

- monitoring

- test everything

- clusters and multiple availability zones

- active/active topology

- disaster recovery

- multi environment

- monitoring

- test everything

By now it's clear that throwing a broker to an event sourcing system comes with lots of costs.

The question we haven't ask so far is:

What is the benefit of having a broker?

My answer is: Probably none.

We don't need a broker to publish events to read-models.

How then?

The question we haven't ask so far is:

What is the benefit of having a broker?

My answer is: Probably none.

We don't need a broker to publish events to read-models.

How then?

We just expose an API from the Event Store AND the read-models directly consume the Event Store API.

That's it.

We use the Event Store as a mean to pass events to consumers.

Doing it removes every challenge we had with the broker.

That's it.

We use the Event Store as a mean to pass events to consumers.

Doing it removes every challenge we had with the broker.

No more need for an outbox, events are just committed to the store in a single transaction.

Reordering is not a problem anymore. Read-models just request the event store passing the last know index position of the stream and events are returned in order.

Reordering is not a problem anymore. Read-models just request the event store passing the last know index position of the stream and events are returned in order.

Bootstrapping new read-models? It's exactly the same of any regular request to fetch more events. It only has to pass 0 as the starting index of the stream.

Intermittent connections? read-models recover from the last know stream position as soon as the connection recovers.

Intermittent connections? read-models recover from the last know stream position as soon as the connection recovers.

Finally, there is no need to maintain a broker infra-structure and all the production support burden it brings.

The architecture of an event sourcing component without a broker is as simple as this:

(every red piece from last diagram removed)

The architecture of an event sourcing component without a broker is as simple as this:

(every red piece from last diagram removed)

Honestly I don't know why the adoption of a message broker as a medium of event publishing in event sourcing become so popular.

I commonly see two main arguments for it:

1) scalability: To offload events publishing to a message broker that is designed for high scalability.

I commonly see two main arguments for it:

1) scalability: To offload events publishing to a message broker that is designed for high scalability.

With a message broker the event store that may be implemented in the system's database is not hit with many queries form the read models.

While this is a real concern for systems with high throughput needs, using a broker is not the only available option in the table.

While this is a real concern for systems with high throughput needs, using a broker is not the only available option in the table.

An event store is actually very easy to scale due to the nature of the data: events are immutable.

Events can be cached forever. It's rather trivial to add a caching layer to the system to scale the reads. I find this option preferable instead of using a broker.

Events can be cached forever. It's rather trivial to add a caching layer to the system to scale the reads. I find this option preferable instead of using a broker.

The second argument I see to use a broker is:

2) (near) real-time updates of read-models.

This argument fails in my opinion in two ways: Most of the times the latency requirement can be easily delivered using polling between the consumers and the event store.

2) (near) real-time updates of read-models.

This argument fails in my opinion in two ways: Most of the times the latency requirement can be easily delivered using polling between the consumers and the event store.

And second, I find the underlying rational behind this near (real-time) requirements a paradox.

The paradox is the following: if the system was designed to use asynchronous updates of read-models it was a design decision to have a given set of properties from the system.

The paradox is the following: if the system was designed to use asynchronous updates of read-models it was a design decision to have a given set of properties from the system.

Whenever we choose to update read models asynchronously, all the system needs to take into account that the WRITE model and READ models can be inconsistent.

The system should be PREPARED to deal with out-of-date read models. Eventual consistency is a design choice.

The system should be PREPARED to deal with out-of-date read models. Eventual consistency is a design choice.

Given the system is supposed to deal with out-of-date read models, it's somehow a paradox the need for real-time updates.

If real-time updates is a REAL requirement then asynchronous update should not be used. Read models should be updated synchronously instead.

If real-time updates is a REAL requirement then asynchronous update should not be used. Read models should be updated synchronously instead.

• • •

Missing some Tweet in this thread? You can try to

force a refresh