This thread walks you through a concrete example of how an algorithm can learn racism. It uses some math but only the minimum amount of math possible and has lots of pictures. It is *very* accessible. If that sounds like your thing read on. 🧵👇

Let's start by learning about statistical bias. Statistical bias is a measure of how well a guessing algorithm is at guessing. It's very straightforward. The bias is the average difference between what an algorithm guesses a value is and what that value actually is. 2/11

The example I'm going to talk about is an algorithm that learns how to measure feelings based on text. We call this measurement a "sentiment score". 3/11

A sentiment score of zero is neutral. A positive score means positive feelings and a negative score means negative feelings. The more positive the sentiment score, the more positive the feelings. The more negative the sentiment score, the more negative the feelings. 4/11

In this example, an amazing thing happens. Our algorithm learns racism! It learns that in general people have negative feelings about certain minorities. Many people will claim the algorithm is malfunctioning but it's not. It's seems to be learning people's actual feelings. 5/11

If we think about the definition of statistical bias at the beginning of the thread, it gives us a hint about what our mistake is. 6/11

What's happening is when we learned feelings from the data, we implicitly defined the "true value" as people's actual feelings. If we don't care about racism then we should have defined it as people's actual feelings excluding racism. 7/11

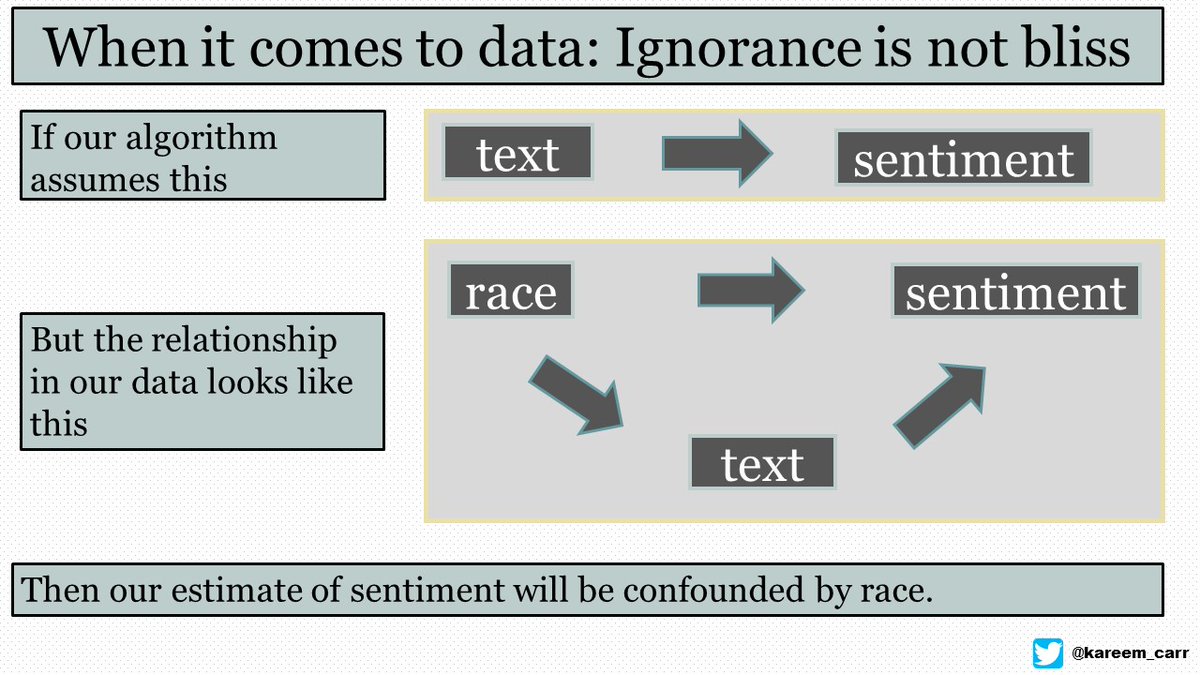

This issue is called confounding. Confounding happens whenever we compare two things and neglect a third variable that could be driving the difference. 8/11

For instance, we might find that Apple users are more happy than Windows users and conclude that this is because of their computer choices but Apple products cost more. So the real reason Apple users are happier might be because Apple users have more money. 9/11

When people collect data and blindly learn whatever relationships are in the data, they can never be sure that what they're learning is what they intend to learn. They're implicitly making potentially false assumptions about the causal relationships in the data. 10/11

This is why it's extremely important to understand the relationships in the data and why "learning from the data" or being "data-driven" isn't enough when your data doesn't come from real experiments that were designed to generate the right kind of information. 11/11

This kind of long-form content takes extra work so if you like it and want to show support, like and retweet the thread, and give me a follow! 🙃

⚠️ I wanted to clarify that E[x] is statistics notation for the average of x. The E stands for the “expected value”. So E[x] is the “expected value of x”. We say “expected” because the average is basically what you should expect if you try something lots of times.

• • •

Missing some Tweet in this thread? You can try to

force a refresh