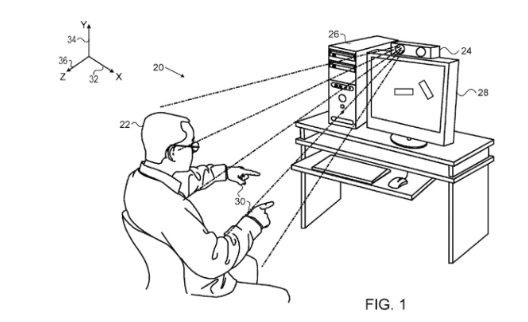

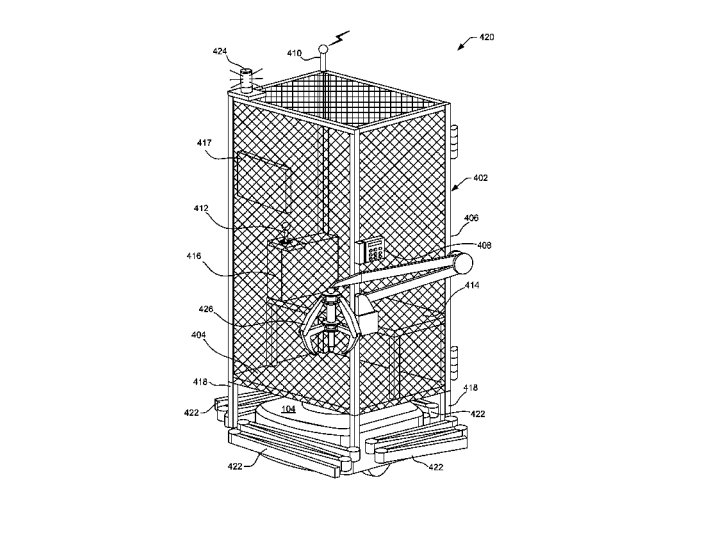

I have a piece in @nature today on the urgent need to regulate emotion recognition tech. During the pandemic, this tech has been pushed further into schools and workplaces. We should reject the phrenological impulse, where unverified systems are used to interpret inner states.

https://twitter.com/nature/status/1379455392904843265

There's lots to read on this topic: see today's article with @luke_stark @EvanSelinger onezero.medium.com/a-i-cant-detec…

And a new thesis by

@damicli (PDF)

lirias.kuleuven.be/retrieve/540729

And I have a chapter on the history of these tools in #AtlasofAI

And a new thesis by

@damicli (PDF)

lirias.kuleuven.be/retrieve/540729

And I have a chapter on the history of these tools in #AtlasofAI

I'm joining the call from many scholars: it's time for a national regulatory agency with a wide scope. See @rcalo on robotics brook.gs/3t96gYs

And Erik Learned-Miller @jovialjoy, @bluevincent @jamiemorgenste1 on facial recognition bit.ly/3uwSbEv.

And Erik Learned-Miller @jovialjoy, @bluevincent @jamiemorgenste1 on facial recognition bit.ly/3uwSbEv.

• • •

Missing some Tweet in this thread? You can try to

force a refresh