Lots of selected thoughts on the draft leaked EU AI regulation follow. Not a summary but hopefully useful. 🧵

Blacklisted art 4 AI (except general scoring) exempts include state use for public security, including by contractors. Tech designed to ‘manipulate’ ppl ‘to their detriment’, to ‘target their vulnerabilities’ or profile comms metadata in indiscriminate way v possible for states.

This is clearly designed in part not to eg further upset France in the La Quadrature du Net case, where black boxes algorithmic systems inside telcos were limited. Same language as CJEU used in Art 4(c). Clear exemptions for orgs ‘on behalf’ of state to avoid CJEU scope creep.

Some could say that by allowing a pathway for manipulation technologies to be used by states, the EU is making a ‘psyops carve out’.

Given this regulation applies to putting AI systems on the market too, it’s unclear to me how Art 4(2) would work for vendors who are in the EU, sell these systems to the public sector, but don’t yet have a customer. Could be drafted more clearly.

Article 8 considers training data. It only applies when the actual resultant system is high risk. It does not include risks from experimentation on populations or similar through infrastructures to train them. AI systems that don’t pose use harms can still pose upstream harm.

Article 8(8) introduces a GDPR legal basis for processing special category data strictly necessary for debiasing. @RDBinns and I wrote about this challenge back in 2017. Some national measures had similar provisions already eg UK DP Act sch 1 para 8 journals.sagepub.com/doi/10.1177/20…

Article 8(9) also extends dataset style provisions mutadis mutandis to eg expert systems and federated learning/multiparty computation, which is sensible.

Logging requirements are interesting in Article 9, and important. The Police DP Directive has similar, and they matter. @jennifercobbe @jatinternet @cnorval have usefully written on decision provenance in automated systems here export.arxiv.org/pdf/1804.05741

User transparency for high risk AI systems resembling labels in other sectors in Art 10. Some reqs on general logics and assumptions but nothing too onerous. You’d expect most of this to be provided by vendors in most sectors already to enable clients to write DPIAs

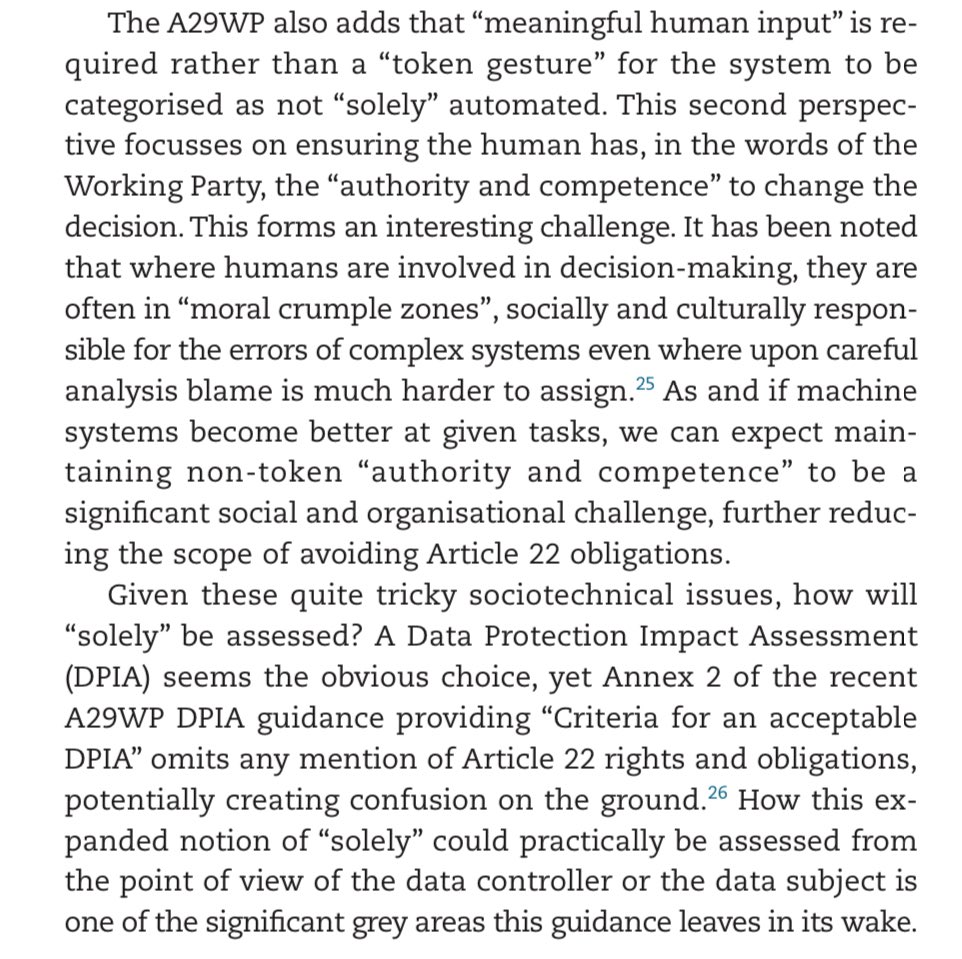

Art 11(c) is interesting, placing organisational requirements to ensure human oversight is meaningful. It responds clearly to @lilianedwards and I in 2018 commenting on the A29WP ADM guidelines. [...]

In that paper (sciencedirect.com/science/articl…) we pointed out that ensuring ‘authority and competence’ was an organisational challenge.

(I elaborated on this with @InaBrass in an OUP chapter on Administration by Algorithm, pointing out the accountability challenges of such organisational requirements for authority and competence) michae.lv/static/papers/…

General obligations for robustness and security in Article 12. Does not cover issues of model inversion and data leakage from models (see @RDBinns @lilianedwards and myself linked, I will stop gratuitous self plugging soon sorry) see royalsocietypublishing.org/doi/10.1098/rs…

The logging provision has a downside. Art 13 obliges providers to keep logs. This assumes they are run as a service, with all the surveillance downsides @sedyst and @jorisvanhoboken have lairs out in Privacy After the Agile Turn osf.io/preprints/soca…

Importer obligations in article 15 seem particularly difficult to enforce given the upstream nature of these challenges.

Monitoring obligations for users are good but quite vague and don’t seem to impose very rigourous obligations

I won’t go in detail into the conformity assessment apparatus which is seen in other EU law areas. Suffice to highlight a few things. Firstly, the Article 40 registration database is useful for journalists and civil society tracking vendors and high risk systems across Europe

Some have already studied using other registration databases for transparency in this field (eg @levendowski papers.ssrn.com/sol3/papers.cf…) but that was with trademark law, so clearly flawed compared to a registration database of actual high risk AI systems.

Also, there are several parts where conformity is assumed under certain conditions. See eg 35(2) which seems to assume all of Europe is the same place for phenomena captured in data. Wishful thinking! Ever closer data distribution.

Article 41 applies to all AI systems

- notification requirements for if you’re taking to a human-sounding machine (@MargotKaminski this was in CCPA too? I think?).

Important given Google’s proposed voice assistant as robotic process automation thing, calling up restaurants etc

- notification requirements for if you’re taking to a human-sounding machine (@MargotKaminski this was in CCPA too? I think?).

Important given Google’s proposed voice assistant as robotic process automation thing, calling up restaurants etc

Article 41(2) creates a notification requirement for emotion recognition systems (@damicli @luke_stark @digi_ad). This is important as some might (arguably) not trigger GDPR if designed using transient data (academic.oup.com/idpl/article-a…)

Disclosure obligations for deep fake users (cc @lilianedwards @daniellecitron) — but with what penalty? Might stop businesses but regime likely flounders against individuals.

EC moved from facial recognition ban to authorisation system. This is all very much in draft and could still disappear I bet. ‘Serious crime’ not ‘crime’ requirement will be a sticking point with member states. Not much point analysing this until it’s in the proposed version.

Article 45 claims to reduce burdens on SMEs by giving them some access to euro initiatives like a regulatory sandbox (Art 44). There’ll be a big push to make this a scale based regime of applicability as these aren’t many concessions.

Interesting glimpse of something in another piece of unannounced regulation “Digital Hubs and Testing Experimentation Facilities”. Could be interesting. Keep eyes out.

Not another board! And this time with special provision to presumably grandfather in the EU HLEG on AI as advisors under Article 49. Nice deal if you can get it. My criticism of that group here: osf.io/preprints/lawa…

The post market monitoring system could be interesting. But heavily up to providers to determine how much they will do (ie next to none). Could also be used to say ‘we have to deliver this as an API, we can’t give it to you’. More pointless servitisation.

Article 59 presents a weird regime saying that if a member state still thinks a system presents a risk despite being in compliance, they can take action. This could be a used (eg freedom of expression) but there are checks built into it with the Commission.

And of course the list of high risk AI in full (can be added to by powers in the reg). Hiring, credit, welfare, policing and tech for the judiciary are all notable. Very little that is delivered by the tech giants as part of their core businesses, that’s clearly in DSA/DMA world

• • •

Missing some Tweet in this thread? You can try to

force a refresh