Deploying with @fastdotai isn't always learn = load_learner(), learn.predict. There are numerous scenarios when you might only want some, part, or none of both the API and the library as a whole. In this thread we will be exploring your options, how they work, and what to do: 1/n

Ideally we have the following context:

DataBlock -> DataLoaders -> Model -> Learner -> Train

This can then stem off to a few things:

1. learn.export() -> Model and DataLoaders (which are now blank) ...

DataBlock -> DataLoaders -> Model -> Learner -> Train

This can then stem off to a few things:

1. learn.export() -> Model and DataLoaders (which are now blank) ...

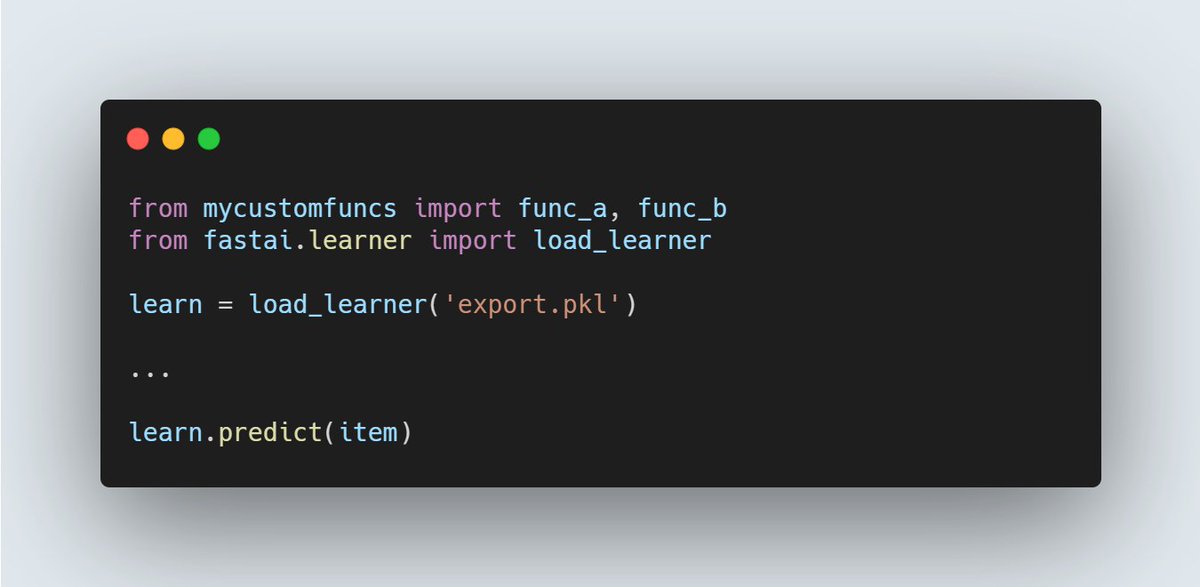

In this scenario, we need to ensure that ALL functions which were used in relation to the data are imported before loading in the learner. This can run into issues when using fastAPI and other platforms when loading in the Learner is done in a multi-process fashion 3/

2. We have my #fastinference library:

learn.to_onnx() -> Model is run through ONNX ort and DataLoaders (which are the same blank DataLoaders from 1) get exported.

Still requires that all related functions are in the namespace still 4/

learn.to_onnx() -> Model is run through ONNX ort and DataLoaders (which are the same blank DataLoaders from 1) get exported.

Still requires that all related functions are in the namespace still 4/

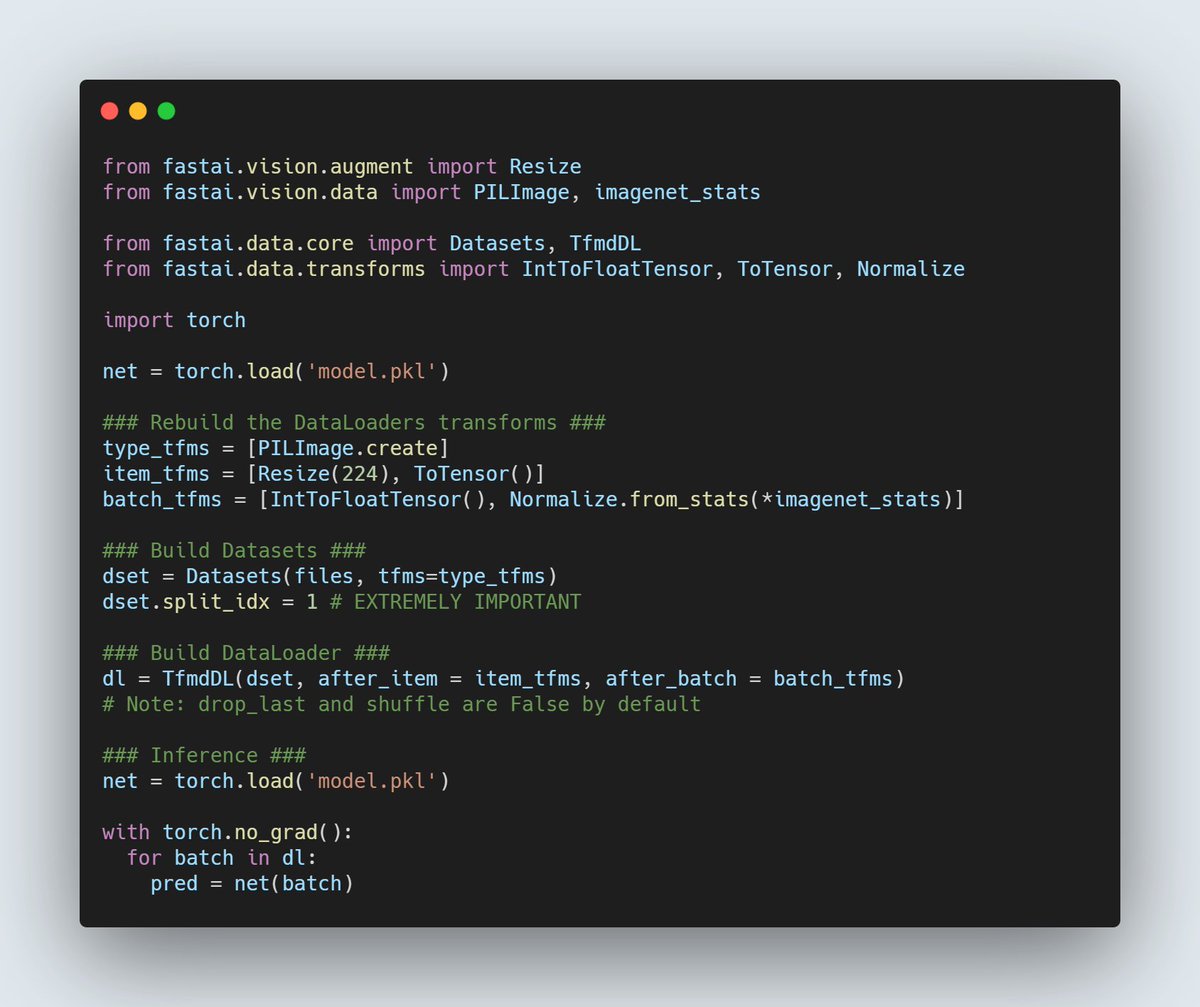

3. We have just saving and loading in the raw @PyTorch models and @fastdotai blank DataLoaders (depicted below): 5/

Now if we want to REMOVE @fastdotai all together, here's some basic to-knows:

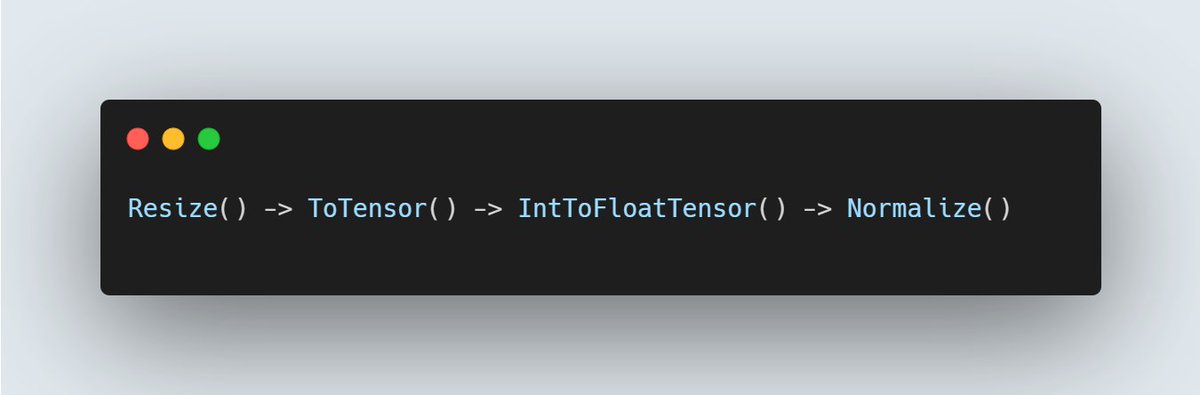

1. fastai's test_dl/predict/etc are based on the validation transforms

2. As a result, cropping is center cropped and at most your transform pipeline is: 6/

1. fastai's test_dl/predict/etc are based on the validation transforms

2. As a result, cropping is center cropped and at most your transform pipeline is: 6/

When deploying we can then have at most 3 situations:

1. Full @fastdotai Learner, need to export any custom functions into a file and import it

2. fastai DataLoaders and raw @PyTorch model

3. Nothing exported from @fastdotai, just raw torch model 7/

1. Full @fastdotai Learner, need to export any custom functions into a file and import it

2. fastai DataLoaders and raw @PyTorch model

3. Nothing exported from @fastdotai, just raw torch model 7/

And for 3, we look at the transforms that were used (I'm assuming image context here, for tabular and text I'd just recommend 2) and do the following with a VERY important note:

ALWAYS set split_idx to 1, this will ensure that your transforms mimic the validation set

10/

ALWAYS set split_idx to 1, this will ensure that your transforms mimic the validation set

10/

You can replace `learn.model` `net` for any model representation, however if you're using ONNX, you should also do the following as ONNX wants raw Numpy:

11/

11/

Finally, if you don't want any fastai WHATSOEVER (this includes DataLoaders), then make sure to mimic what happens on the validation Dataloaders. For images this involves center cropping, and Normalization.

12/

12/

Anything branded as "augmentation" is almost never done on the validation set (during training) and shouldn't be used in production.

Hope this helps folks! I'll link to a few useful libraries I've made in regards to @fastdotai inference below:

13/

Hope this helps folks! I'll link to a few useful libraries I've made in regards to @fastdotai inference below:

13/

fastinference: Can speed up fastai inference and makes some aspects of it a bit more "readable" WITHOUT any changes to the overall API: muellerzr.github.io/fastinference/…

14/

14/

fastinference with ONNX: ONNX wrapper for fastai which operates with situation 2 (fastai DataLoaders, ONNX model) muellerzr.github.io/fastinference/…

15/

15/

fastai_minima: Minimal version of the fastai library. Mostly designed for training but has minimal dependancies and has all of Learner's capabilities: muellerzr.github.io/fastai_minima/

Thanks for reading!

Thanks for reading!

• • •

Missing some Tweet in this thread? You can try to

force a refresh