Is 10 twice as worse as 5? Sometimes it is, but sometimes it's even worse.

This is the question I always ask myself when deciding how to penalize my models.

Read on for more details and a couple of examples:

↓ 1/11

This is the question I always ask myself when deciding how to penalize my models.

Read on for more details and a couple of examples:

↓ 1/11

When we are training a machine learning model, we need to compute how different our predictions are from the expected results.

For example, if we predict a house's price as $150,000, but the correct answer is $200,000, our "error" is $50,000.

↓ 2/11

For example, if we predict a house's price as $150,000, but the correct answer is $200,000, our "error" is $50,000.

↓ 2/11

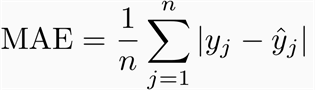

There are multiple ways we can compute this error, but two common choices are:

• RMSE — Root Mean Squared Error

• MAE — Mean Absolute Error

Both of these have different properties that will shine depending on the problem you want to solve.

↓ 3/11

• RMSE — Root Mean Squared Error

• MAE — Mean Absolute Error

Both of these have different properties that will shine depending on the problem you want to solve.

↓ 3/11

Remember that the optimizer uses this error to adjust the model, so we want to set up the right incentives for our model to learn.

Here, I'd like to focus on one important difference between these two metrics, so you can always remember how to use them.

↓ 4/11

Here, I'd like to focus on one important difference between these two metrics, so you can always remember how to use them.

↓ 4/11

When comparing RMSE and MAE, remember that "squared" portion of the first.

It means that we are "squaring" the difference between the prediction and the expected value.

Why is this relevant?

↓ 5/11

It means that we are "squaring" the difference between the prediction and the expected value.

Why is this relevant?

↓ 5/11

Squaring the difference "penalizes" larger values.

Let's go back to the introduction of this thread where I asked whether 10 was twice as bad as 5, and let's see what happens with one example.

↓ 6/11

Let's go back to the introduction of this thread where I asked whether 10 was twice as bad as 5, and let's see what happens with one example.

↓ 6/11

If we expect our prediction to be 2, but we get 10, and we are using RMSE, our error will be (2 - 10)² = 64.

However, if we get a 5, our error will be (2 - 5)² = 9.

64 is definitely much larger than 18 (2 x 9)!

↓ 7/11

However, if we get a 5, our error will be (2 - 5)² = 9.

64 is definitely much larger than 18 (2 x 9)!

↓ 7/11

MAE doesn't have the same property: the error increases proportionally with the difference between predictions and target values.

Understanding this is important to decide which metric is better for each case.

Let's see a couple of examples.

↓ 8/11

Understanding this is important to decide which metric is better for each case.

Let's see a couple of examples.

↓ 8/11

Predicting a house's price is a good example where $10,000 off is twice as bad as $5,000.

We don't necessarily need to rely on RMSE here, and MAE may be all we need.

↓ 9/11

We don't necessarily need to rely on RMSE here, and MAE may be all we need.

↓ 9/11

But predicting the pressure of a tank may work differently: while 5 psi off may be within the expected range, 10 psi off may be a complete disaster.

Here 10 is much worse than just two times 5, so RMSE may be a better approach.

↓ 10/11

Here 10 is much worse than just two times 5, so RMSE may be a better approach.

↓ 10/11

Keep in mind that there is more nuance to MAE and RMSE.

The way they penalize differences is not the only criteria you should look at, but I have always found this a good, easy way to understand how they may help.

↓ 11/11

The way they penalize differences is not the only criteria you should look at, but I have always found this a good, easy way to understand how they may help.

↓ 11/11

If you found this thread helpful, follow me @svpino for weekly posts touching on machine learning and how to use it to build real-life systems.

I always try to hide the math and come up with easy ways to explain boring concepts. If that's your thing, stay tuned for more.

I always try to hide the math and come up with easy ways to explain boring concepts. If that's your thing, stay tuned for more.

• • •

Missing some Tweet in this thread? You can try to

force a refresh