The EU just published an extensive proposal to regulate AI (100+ pages). What does it says? What does it mean? Here, a short explainer on some key aspects of this proposal to regulate AI. /1 🧵 #AIandEdu #AI

digital-strategy.ec.europa.eu/en/library/pro…

digital-strategy.ec.europa.eu/en/library/pro…

First, what is considered AI in this law proposal? Is linear regression AI? AI is defined in Title I & Annex I. My understanding here is that even a simple linear regression model (technically, a "statistical approach" to "supervised learning") would be considered AI. /2

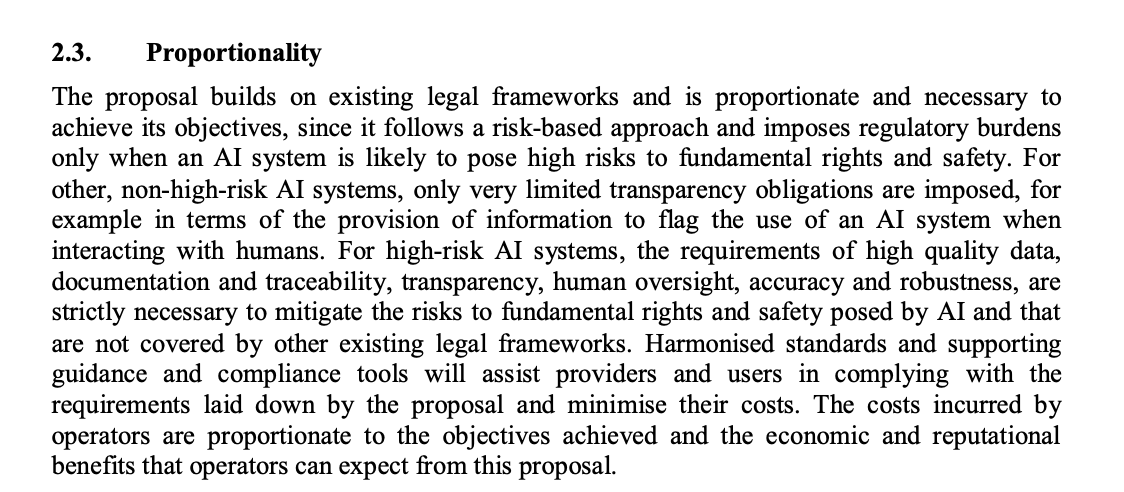

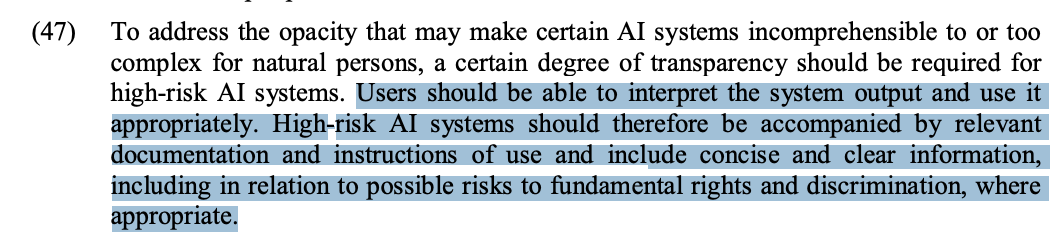

The proposal makes a strong distinction among AI systems based on their application. In fact, it focuses particularly on high-risk systems. These systems would have the highest requirements for transparency, human oversight, data quality, etc.

But what are high-risk systems? /3

But what are high-risk systems? /3

Here we find many examples. First we have remote biometric systems (e.g. public security cameras), no matter if they are real-time or performing biometric identification using previously collected images or video. /4

High-risk systems also include infrastructure management systems. That is, AI systems used in traffic control or the supply of utilities (water, electricity, gas). /5

Systems used in education, for admissions or testing, are also considered high risk, since they may determine the educational and professional course of a person's life. /6

Similarly, AI systems used to recruit or manage workers are considered high risk. The list goes on.

Credit scoring systems, systems used to assign government benefits, systems used in law enforcement, & systems used to deal with migrants & border control, are all high risk. /7

Credit scoring systems, systems used to assign government benefits, systems used in law enforcement, & systems used to deal with migrants & border control, are all high risk. /7

Finally, high risk system include systems intended for the administration of justice and democratic processes.

But how would this regulation affect the development of high-risk AI systems? /8

But how would this regulation affect the development of high-risk AI systems? /8

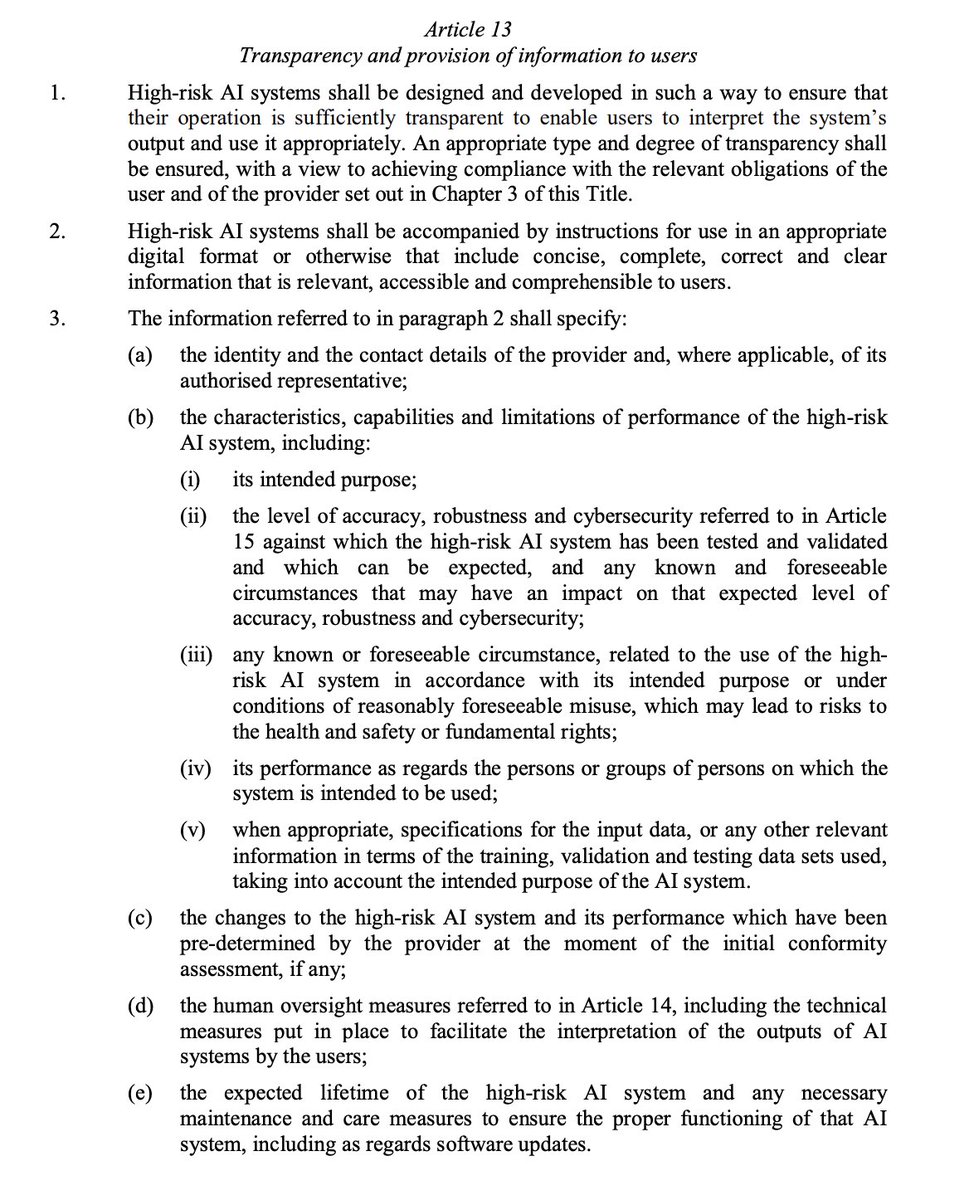

A lot of it involves regulating inputs (e.g. data) and providing documentation & disclosures. E.g. systems should be developed and designed in a way that natural persons can oversee their functioning. Systems should provide documentation and disclaimers. Etc. /9

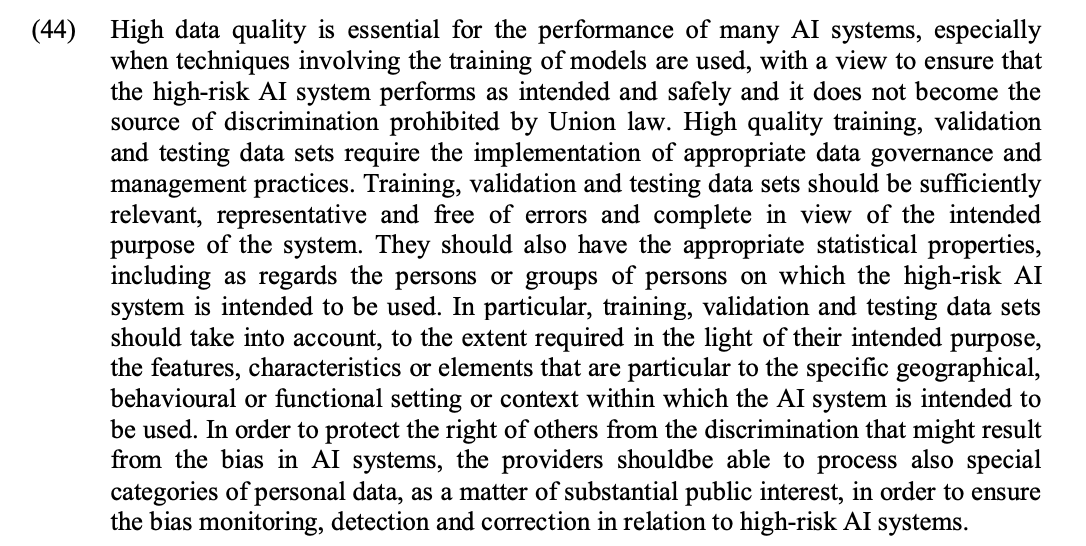

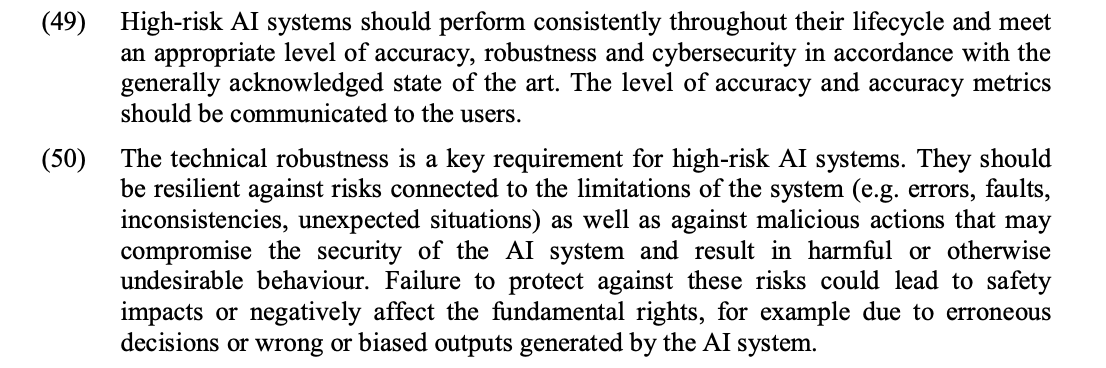

The law also proposes that high-risk systems should be trained on high quality data. High-risk systems also would be required to meet a certain level of accuracy, perform consistently throughout their life, and be robust to attacks. /10

These obligations also are expected to percolate up and down the AI value chain. Providers of software, data, or models, should cooperate--as appropriate--with AI providers and users. /11

High risk systems will also need to include a CE label, to indicate their conformity with the regulation. Yet, in exceptional cases, systems could be deployed without a conformity assessment. /12

But the law also provides the opportunity to test and develop systems within regulatory sandboxes. Think of a "clinical trail" phase for the development of AI. Small scale providers would have priority access to these sandboxes. /13

So what do I see as the immediate impact of such a legislation? The first impact I see would be the creation of a large AI certification industry. The legislation requires AI systems to be deployed together with documentations, disclaimers, and assessments. /14

High risk-systems will need to be registered in a centralized EU database, and also, include post-market monitoring systems. This is a lot of work. So a likely outcome is for large companies to hire specialized firms, or develop in-house teams, to produce such documentation. /15

This will make future AI development more similar to pharma. A consequence of may be that smaller players will need to sell to, or partner with, larger players to exit the sandbox and enter the market (similar to what happens in pharma today). /16

Overall, this is a clearly written & thoughtful document. At the moment, I am still gathering my thoughts on what it means. If you are interested in my views on AI regulation, you can read Chapter 7 of my latest book: How Humans Judge Machines /END judgingmachines.com

• • •

Missing some Tweet in this thread? You can try to

force a refresh