David Blackwell would be turning 102 today.

He's best known for the Blackwell information ordering, the way to formalize when some signals give you more information than other signals.

A thread on Blackwell's lovely theorem and a simple proof you might not have seen.

1/

He's best known for the Blackwell information ordering, the way to formalize when some signals give you more information than other signals.

A thread on Blackwell's lovely theorem and a simple proof you might not have seen.

1/

Blackwell was interested in how a rational decision-maker uses information to make decisions, in a very general sense. Here's a standard formalization of a single-agent decision and an information structure.

2/

2/

One way to formalize that one info structure, φ, dominates another, φ', is that ANY decision-maker, no matter what their actions A and payoffs u, prefers to have the better information structure.

While φ seems clearly better, is it definitely MORE information?

3/

While φ seems clearly better, is it definitely MORE information?

3/

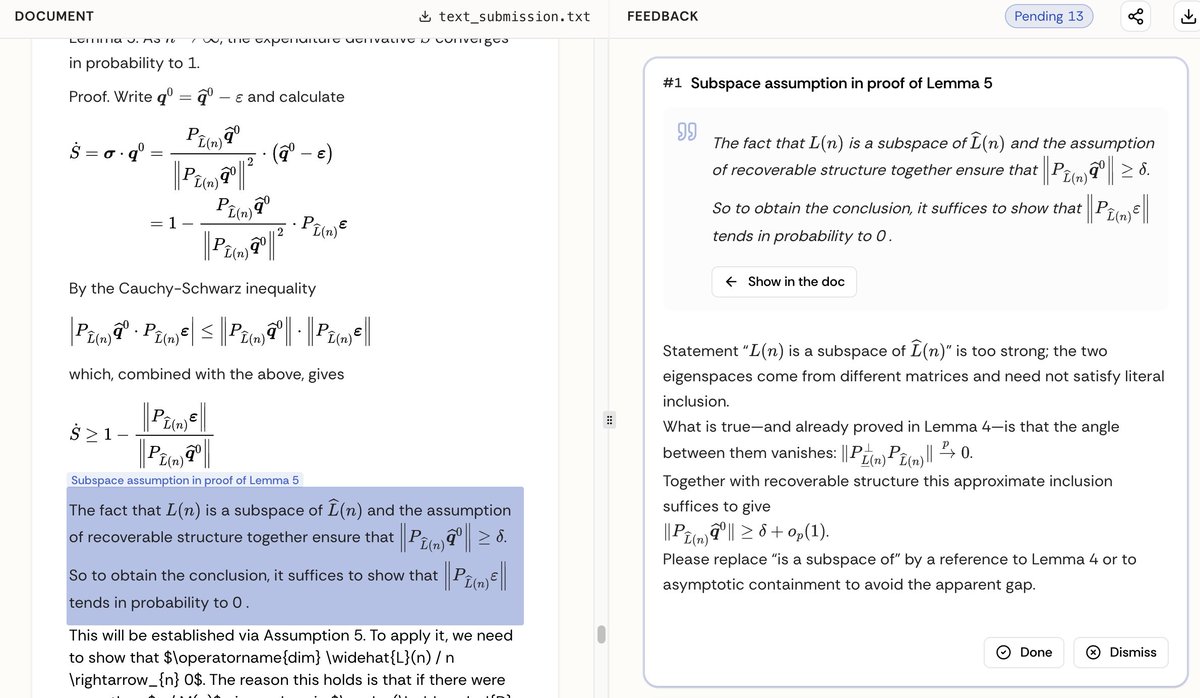

Blackwell found out a way to say that it is. That's what his theorem is about. Most of us, if we learned it, remember some possibly confusing stuff about matrices. This is a distraction: here I discuss a lovely proof due to de Oliveira that distills everything to its essence.

4/

4/

We need a little notation and setup to describe Blackwell's discovery: that the worse info structure is always a *garbling* of a better one.

Let's start by defining some notation for the agent's strategy, which is an instance of a stochastic map -- an idea we'll be using a lot.

Let's start by defining some notation for the agent's strategy, which is an instance of a stochastic map -- an idea we'll be using a lot.

Stochastic maps are nice animals. You can take compositions of them and they behave as you would expect.

Here I just formalize the idea that you can naturally extend an 𝛼 to a map defined on all of Δ(X). And that makes it easy to compose it with other stochastic maps.

Here I just formalize the idea that you can naturally extend an 𝛼 to a map defined on all of Δ(X). And that makes it easy to compose it with other stochastic maps.

Okay! That was really all the groundwork we needed.

Now we can define Blackwell's OTHER notion of what it means for φ to dominate φ'.

It's simpler: it just says that if you have φ you can cook up φ' without getting any other information.

7/

Now we can define Blackwell's OTHER notion of what it means for φ to dominate φ'.

It's simpler: it just says that if you have φ you can cook up φ' without getting any other information.

7/

Blackwell's theorem is that these two definitions (the "any decision-maker" and the "garbling" one) actually give you the same partial ordering of information structures.

"Everyone likes φ better" is equivalent to "you get φ' from φ by running it through a garbling machine 𝛾."

"Everyone likes φ better" is equivalent to "you get φ' from φ by running it through a garbling machine 𝛾."

To state and prove the theorem, we need one more definition, which is the set of all things you can do with an info structure φ.

The set 𝓓(φ) just describes all distributions of behavior you could achieve (conditional on the state ω) by using some strategy.

The set 𝓓(φ) just describes all distributions of behavior you could achieve (conditional on the state ω) by using some strategy.

Now we can state the theorem. We've discussed (1) and (3) already. Point (2) is an important device for linking them, and says that anything you can achieve with the information structure φ', you can achieve with φ.

10/

10/

de Oliveira's insight is that, once you cast things in these terms, the proof is three trivialities and one application of a separation theorem.

So let's dive in!

…oliveira588309899.files.wordpress.com/2020/12/blackw…

So let's dive in!

…oliveira588309899.files.wordpress.com/2020/12/blackw…

Here we show that (1) ⟺ (2).

(1) ⟹ (2). If φ' garbles φ and you HAVE φ, then just do the garbling yourself and get the same distribution.

(2) ⟹ (1). On the other hand, if φ can achieve whatever φ' can, it can achieve "drawing according to φ'(ω)," which makes you the garbling

(1) ⟹ (2). If φ' garbles φ and you HAVE φ, then just do the garbling yourself and get the same distribution.

(2) ⟹ (1). On the other hand, if φ can achieve whatever φ' can, it can achieve "drawing according to φ'(ω)," which makes you the garbling

(2) ⟹ (3) says if 𝓓(φ) contains 𝓓(φ') then you can do at least as well knowing φ': the easiest step.

Note that the agent's payoff depends only on the conditional distribution behavior given the state. Since all distributions in 𝓓(φ') are available w/ φ, agent can't do worse.

Note that the agent's payoff depends only on the conditional distribution behavior given the state. Since all distributions in 𝓓(φ') are available w/ φ, agent can't do worse.

(3) ⟹ (2) is the step that's not unwrapping definitions.

Suppose (2) were false: then you could get some distribution 𝐝' with φ' that you can't get with φ. The set 𝓓(φ) of ones you can get with φ is convex and compact, so .... separation theorem! Separate 𝐝' from it.

14/

Suppose (2) were false: then you could get some distribution 𝐝' with φ' that you can't get with φ. The set 𝓓(φ) of ones you can get with φ is convex and compact, so .... separation theorem! Separate 𝐝' from it.

14/

If we state what "separation" means in symbols, it gives us (*) below. But that tells us exactly how to cook up a utility function so that any distribution in 𝓓(φ), one of those achievable with φ, does worse than our 𝐝'. That's exactly what (3) rules out.

15/

15/

That's it!

Happy birthday David Blackwell, and thanks Henrique de Oliveira. Though I am the world's biggest fan of Markov matrices, there's no need to use them for Blackwell orderings once you know this way of looking at things, which gets at the heart of the matter.

16/16

Happy birthday David Blackwell, and thanks Henrique de Oliveira. Though I am the world's biggest fan of Markov matrices, there's no need to use them for Blackwell orderings once you know this way of looking at things, which gets at the heart of the matter.

16/16

typo! that red arrow label should just be 𝛾

PS/ Tagging in @smorgasborb, who I didn't know was on Twitter and whose fault this all is.

A few typos above that I hope didn't interfere too much w/ exposition of his argument: In 6, the red label was wrong - fixed here. In 13, the first φ' should be φ.

🙏

A few typos above that I hope didn't interfere too much w/ exposition of his argument: In 6, the red label was wrong - fixed here. In 13, the first φ' should be φ.

🙏

• • •

Missing some Tweet in this thread? You can try to

force a refresh