Testers could be investigating products to reveal problems that matter to customers and risk that could lead to loss for the business. Yet many testers write scripts to demonstrate that all is okay, or struggle to find locators on a web page. Wondering why testing isn’t valued?

There IS an antidote to all this. It is simple in one sense, but it’s not easy: deliver the goods. When you clearly report problems that matter to managers and developers, they become too busy arguing with each other how to fix problems before the deadline to hassle you. Or…

…they act like responsible professional people and work things out (sometimes with your help), and thank you for your clear report. Trouble is, there are testers—lots of them—who have been gulled into the idea that their job is to demonstrate that everything is okay. It isn’t.

“It isn’t” refers to two things. 1) Everything isn’t okay, in most projects and products. There are bugs—things about the product that threaten its value to people. That because customers have a multitude of needs and desires for any product. And because everyone is fallible.

Whether working together or working alone, people building and commission and managing any product make mistakes; we all do. And people working together have different ideas and assumptions about the goodness of the product, before, while, and after building it. That’s natural.

It’s natural to have some customers and some purposes and some risks in mind, and not others. It’s normal to forget the needs and desires of some customers. And it’s normal to be unaware of lots of ways in which the product doesn’t work in some sense for some people.

Therefore: we must have an abiding faith that the product doesn’t work. We must be confident that, in any product or service, there are problems that matter. And here comes the other sense of “it isn’t”. 2) It isn’t the testers’ job to show that there aren’t problems that matter.

It’s the tester’s job to believe that there are problems that matter to important people, and to seek those problems out. It’s the tester’s job to suspect that there is trouble at hand, just below everyone else’s level of awareness. No one else has that as their primary mission.

It is the tester’s unique role to investigate the product, seeking trouble, in order to find that trouble so that responsible people can deal with that trouble. But there are sets of misunderstandings, misrepresentations, and misdirections about that role, from many sources.

(If you’re aware of all of these misconceptions yourself, no need to feel insulted or threatened or upset by what I’m saying. I’m not talking about you, and I’m not really talking to you. I’d like to be talking with you, and I’d like you to be talking with me.)

The biggest misunderstanding is that the tester’s role is to “make sure that everything works”. That CANNOT be right, for all kinds of reasons. The first, biggest reason is that it is logically impossible to know that there are no problems now and never will be. We know THAT.

We’ve known that at least since Dijkstra said “Testing can show the presence of errors, but not their absence.” But we’ve known for far longer than that that “all swans are white” is not a fact, but an assumption, easily defeated by the observation of a single black swan.

“All the features work” is the precise equivalent in software of “all swans are white” in the wider world. The key difference: incorrect assumptions about the colour of swans won’t cause loss, harm, or damage to people or businesses. (Extra points for plausible scenarios.)

We could not make sure that the product works unless we were aware of every aspect of the product’s behaviour, given every value of every input, ensuring that both valid and invalid imports were handled properly, on every platform, given every variation of sequence and timing.

But of course, knowing that wouldn’t be enough, because we’d have to be sure that every aspect of the product’s state and behaviour were consistent with every person’s notion of “okay, that works”, now and forever. This is logically impossible. It cannot be done.

Here’s another sense in which testers cannot “make sure that everything works”. If something doesn’t work, we don’t change it to make it work. (The moment we do that, we shift from the tester role to the builder or manager role, and in that moment, testing is suspended.)

Some testers (and developers, and managers) work in flexible roles. That’s fine. But let us remember as we make the role switch into managing or building, we tend not to be super powerful critics of our own work. That’s why people need testers in the first place, isn’t it?

(This thread will continue after a class, which starts shortly.)

Another misunderstanding related to the first set: "a test that produced a specific result last time, and that is repeated, and that produces the same result this time, shows that nothing is broken". That statement is not supported by logic either, but is rarely questioned.

No test, ever, shows that nothing is broken. No observation of swans. and no observation of non-swans provides evidence that black swans don't exist. No test (observation of a product) shows that a bug doesn't exist. No repetition of that test can show a bug doesn't exist either.

Ask any programmer or manager "Imagine seeing a clown with a green nose; then another clown with a green nose; then another. Does that prove there are no clowns with red noses?" "Of course not!" Now, replace "clown" with "check", and "nose" with "result". See the problem now?

If you believe that red-nosed clowns are potentially dangerous, the existence of green-nosed clowns is basically irrelevant. You shouldn't really care that the only clowns that you see in your circus at the moment have green noses. You need to look for the red-nosed ones, right?

Trouble is, in many development shops, testers are given the mandate to ask all clowns to assemble, line up, and submit themselves to nose inspection, and upon seeing a line of green noses, the testers declare that everything is okay. This is a lousy way to find red-nosed clowns.

One problem is that red-nosed clowns aren't disposed to line up politely just because you asked them. Another much bigger problem is that in a circus, lots of things can go wrong, and they can go wrong in unanticipated ways. Especially when the performers are highly ambitious.

The job of the tester is not simply to determine when some clown has changed nose colour from green to red overnight, even if a red nose would frighten the little ones. It's to identify any problem that would cause trouble for the audience OR for the people running the circus.

We're running with the analogy; why not go all in? Someone comes along and says to the safety inspector, "I have something that will end your worries. Yes, it's a visual spectrometer. It can determine the precise RGB value of any nose colour. Results in a second! SO efficient!"

The spectrometer can indeed determine the colour of things, but it's fiddly. It has to be directed right at the clown's nose, and the clown has to stand just so, or else the spectrometer will miss the nose altogether, or will misleadingly report that the red wig is a red nose.

There's a lot of work to get the spectrometer and the clowns in alignment with each other so that each day's nose check can be "efficient". That takes time and effort. Meanwhile, no one notices the stripped threads on the trapeze rig and the broken hinges on the tiger cage.

What I see in a lot of places is that testers are assigned to do the software equivalent of checking clowns' nose colours—and because that's a time-consuming and trivial process, the testers are being asked to figure out how to automate it somehow. Here's an example: logging in.

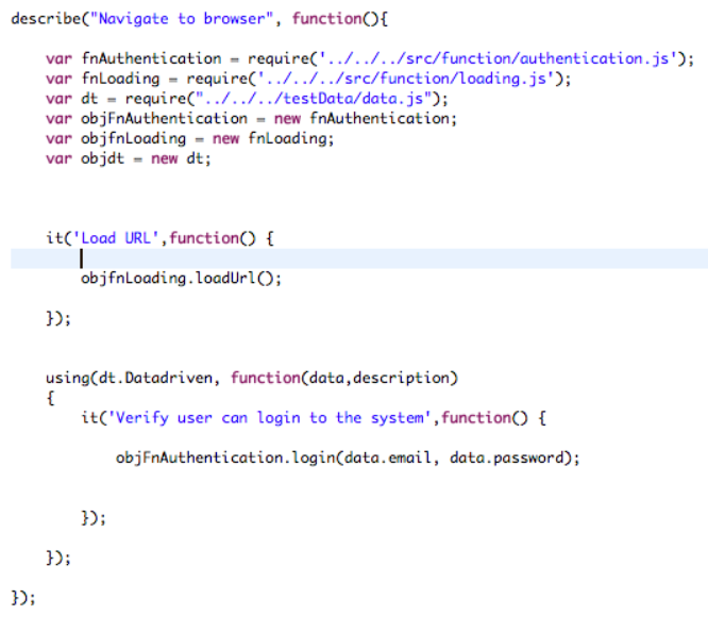

I don't remember where I found this; I'll guarantee you that I didn't write it. But I'll bet that it's a representative example of what's going on in a lot of places. See any problems here? Anything missing?

Here are just a few things: will authorised users face problems logging in? Will they have access to all the resources for which they have permission? Will they be stopped when they try to access forbidden resources? Will the attemped login be logged and tracked properly?

Are there vulnerabilities in the interface that a malicious user can get past? Will error messages reveal information useful to hackers? If a user logs in and does something improper, and then tries to deny it, will there be a record (that's the concept of non-repudiation)?

Can the user log in multiple times simultaneously? Should the user be able to do that? Are failed login attempts tracked? Does an unsuccessful login leave the system in an unstable or insecure state? Is the user appropriately informed of what to do when there's a problem?

These sorts of questions can, and should, go on and on when it's important to do deep testing—as when money or value or health or safety or reputation might be on the line. Yet that kind of testing is disruptive to programmers and to the build process; and it can't be automated.

And it's okay that this kind of testing can't be done at the drop of a hat. We shouldn't expect it to, in the same way that we shouldn't expect an investigative journalism team to present a deep investigation every day, or CERN to overturn the Standard Model in an afternoon.

When products and businesses and serious money and people's well-being are on the line, we want to perform testing to find problems that can elude even a diligent, thoughtful, imaginative, well-disciplined, development team. And let's face it: problems can absolutely do that.

Testing is often undervalued when it is focused on confirming things that well-disciplined development teams have done well and have confirmed already; mostly recapitulating that work; not examining the product; not obtaining direct experience with it; ergo not finding problems.

Then a feedback loop: testers aren't investigating the product deeply for problems; managers don't see the testers investigating; managers don't learn to recognize good, deep testing; managers don't know to require it, nor how to value it; and testers don't provide it. GOTO 0.

To respond to all this, testers frantically offer the coin of the realm: code that operates the product mechanically, and is tricky to write and maintain. Far better, I'd suggest, to use code to probe the product, to generate and manipulate and perturb data to reveal problems.

That kind of code tends, very generally to be easier to write and maintain; less fiddly; more on point. Moreover, that kind of code tends to be written help find problems; to exercise the product rather than simply make it jump through the same damned hoop over and over again.

So: to the degree that you write for testing, prefer code that helps you *engage* with the product, rather than to *disengage* you from it. Notice how often you find real problems while engaging with the product, observing and interacting with it directly. Track that experience.

Challenge the product, and challenge your understanding of it. Don't focus on the expected; look for the unexpected. Look for problems that emerge after putting well-tested components together. Report things that look strange or confusing or annoying. They'll look bad to users.

Doing all this well requires rich models of how to cover the product with testing; it requires rich models of how people might obtain value from the product, and how that value might be at risk. It requires telling the story of your testing persuasively. developsense.com/blog/2018/02/h…

Most of all, it requires focusing on trouble. Managers need to know an answer to these question "Are there problems here? How might they affect people who matter? What problems might threaten the value of the product, or the on-time, successful completion of our work here?"

You could spend the rest of your days trying to figure out XPath syntax for a DOM element that moves around all the time. But bring managers a steady stream of problems that matter, dear testers, and you won't find yourself wondering if you're offering value to the team.

• • •

Missing some Tweet in this thread? You can try to

force a refresh