A thread on the science of stories:

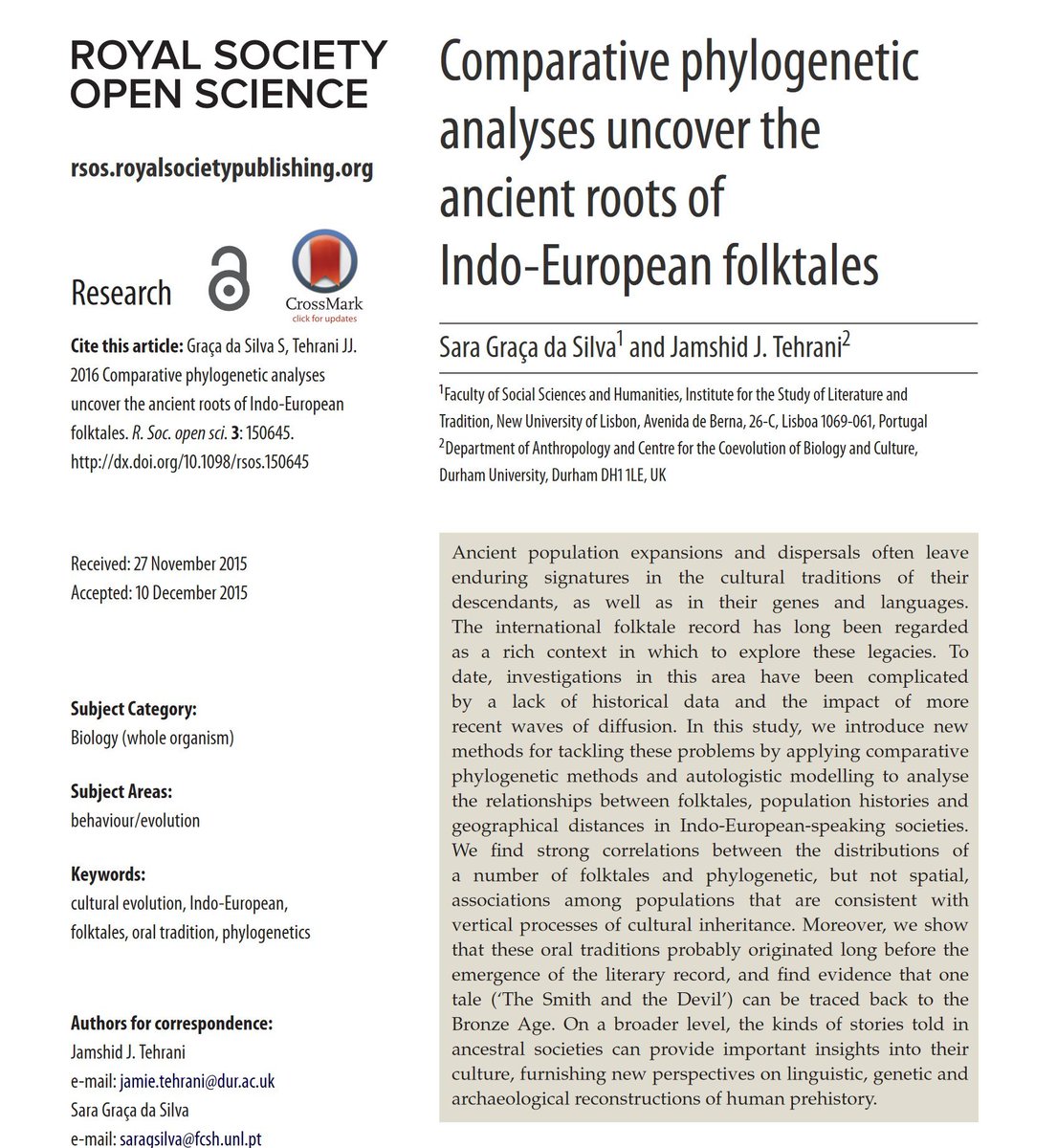

Stories are persistent: this paper traces back fairy tales across languages & cultures to common ancestors, arguing that the oldest go back at least 6,000 years. One of the oldest became the myth of Sisyphus & Thanatos in ancient Greece. 1/

Stories are persistent: this paper traces back fairy tales across languages & cultures to common ancestors, arguing that the oldest go back at least 6,000 years. One of the oldest became the myth of Sisyphus & Thanatos in ancient Greece. 1/

That may be the start: this paper argues some stories may go back 100,000 years. Many cultures, including Aboriginal Australian & Ancient Greek, tell stories of the Plaeades, the 7 sisters star cluster, having a lost star- this was true 100k years ago! 2/ dropbox.com/s/np0n4v72bdl3…

Stories share similar arcs: Analyzing 1.6k novels, this paper argues there are only 6 basic ones:

1 Rags to Riches (rise)

2 Riches to Rags (fall)

3 Man in a Hole (fall rise)

4 Icarus (rise fall)

5 Cinderella (rise fall rise)

6 Oedipus (fall rise fall) 3/

epjdatascience.springeropen.com/articles/10.11…

1 Rags to Riches (rise)

2 Riches to Rags (fall)

3 Man in a Hole (fall rise)

4 Icarus (rise fall)

5 Cinderella (rise fall rise)

6 Oedipus (fall rise fall) 3/

epjdatascience.springeropen.com/articles/10.11…

Stories have links to cultural values. You can make predictions about economic factors from the stories people tell, as this 👇cool paper shows 4/

https://twitter.com/emollick/status/1396597785147977728?s=20

The stories organizations tell matter, too: When firms share stories in which their executives were clever but sneaky, the result is less helping & more deviance! Firms that share stories about low-level people upholding values have increased helping & deceased deviance. 5/

Firms also transmit learning through stories. This paper shows stories of failure work best. They are more easily applied than stories of success, especially if the story is interesting & you believe that it is important to learn from mistakes. But make sure it is a true story. 6

Entrepreneurs especially rely on stories, as, all you have initially is your pitch - a story about your startup. You have to use that to get people to give you resources, buy your product, join your company, etc. Here is a thread on how to do that: 7/

https://twitter.com/emollick/status/1100190246778667008?s=20

One of the most fascinating examples of the power of stories in startups is how the Theranos fraud relied on Elizabeth Holmes’s ability to tell a compelling story, which involved her tapping into the archetypes of what we expect an entrepreneur to be (black turtleneck & all) 👇

https://twitter.com/emollick/status/1108543772739227649

• • •

Missing some Tweet in this thread? You can try to

force a refresh