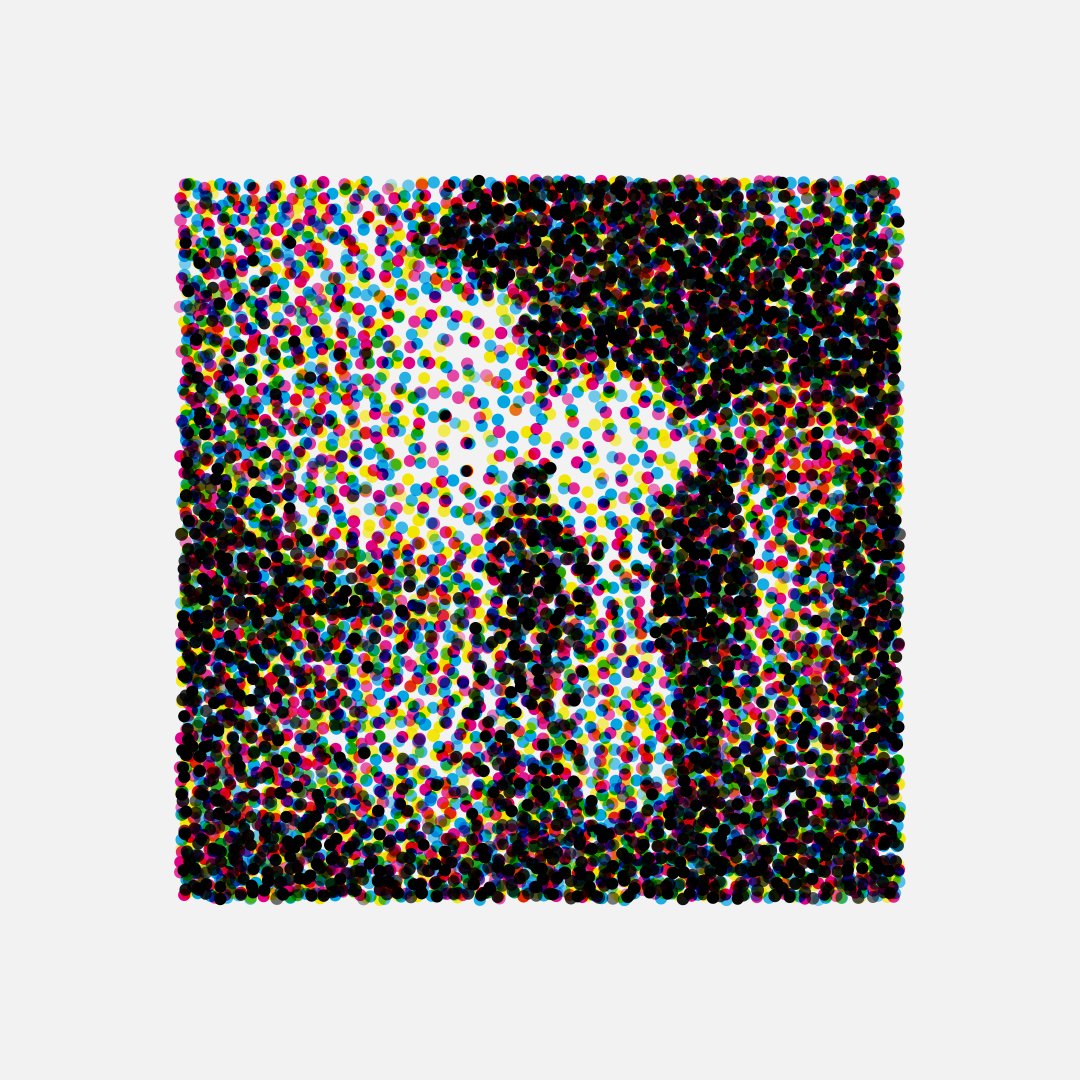

I have a 7-color e-ink screen arriving tomorrow, so I'm experimenting with custom color dithering techniques. Here is Subscapes #39 reduced to a 7-color, 600x448px paletted image.

One thing that is worth more exploration is an error diffusion technique that isn't based on a simple scanline. On the left is left-to-right scanline, on the right first I run through with random jumps, and then apply a second pass with left-to-right, to avoid dither patterns.

Notice the very top is problematic in both. On the left, it repeats noticeably until it 'fixes' itself. On the right, the first scanline hasn't yet received any error diffuse, so it doesn't match the rest.

Something that blows my mind with dithering is that this image is just 7 colors, but putting certain colors near each other tricks our brains into seeing more than the reduced palette.

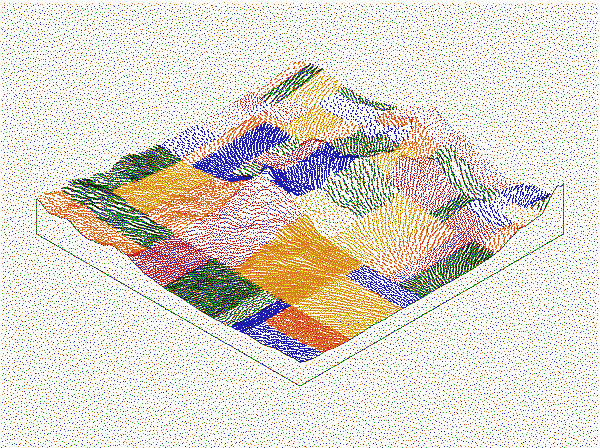

Screen arrived! Easy setup and pretty nice quality. Here is Subscapes #042 and #039 realized on the surface. The former uses just 2 colors, the latter uses all 7.

Notice in the second photo it is unplugged—the image will persist indefinitely, which is the beauty of e-ink!

Notice in the second photo it is unplugged—the image will persist indefinitely, which is the beauty of e-ink!

The update loop is *slow* and chaotic though, around 30 seconds because of all the colors. Perhaps there's some room to improve it if I dive into their Python driver a little.

• • •

Missing some Tweet in this thread? You can try to

force a refresh