Watch carefully as GitHub PR tries to (re)define copyright and set a precedent that the licensing terms of open source code don't apply in this case...

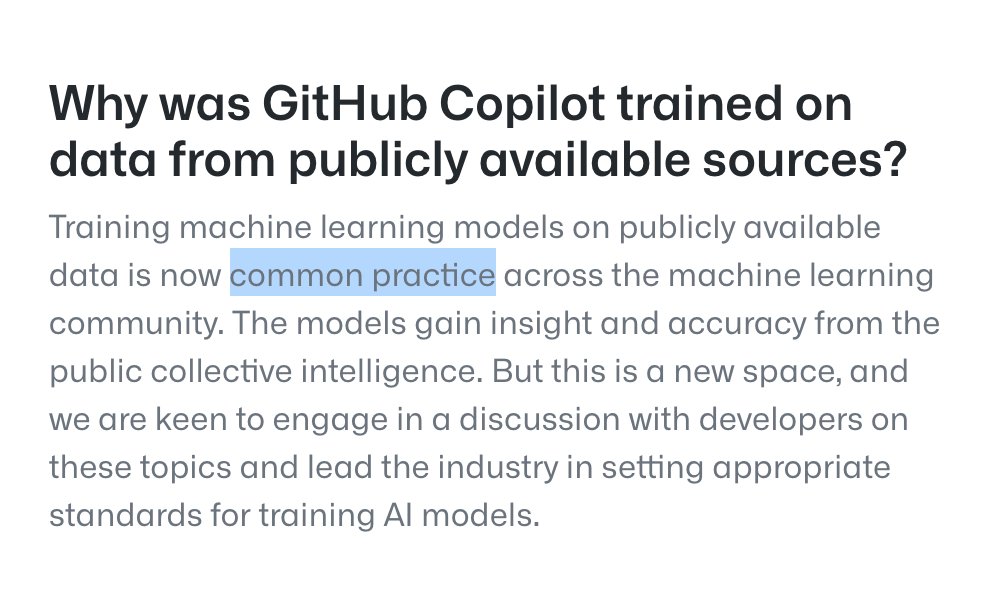

Left: Text from June 29th.

Right: Edited text on July 1st.

See FAQ section at copilot.github.com

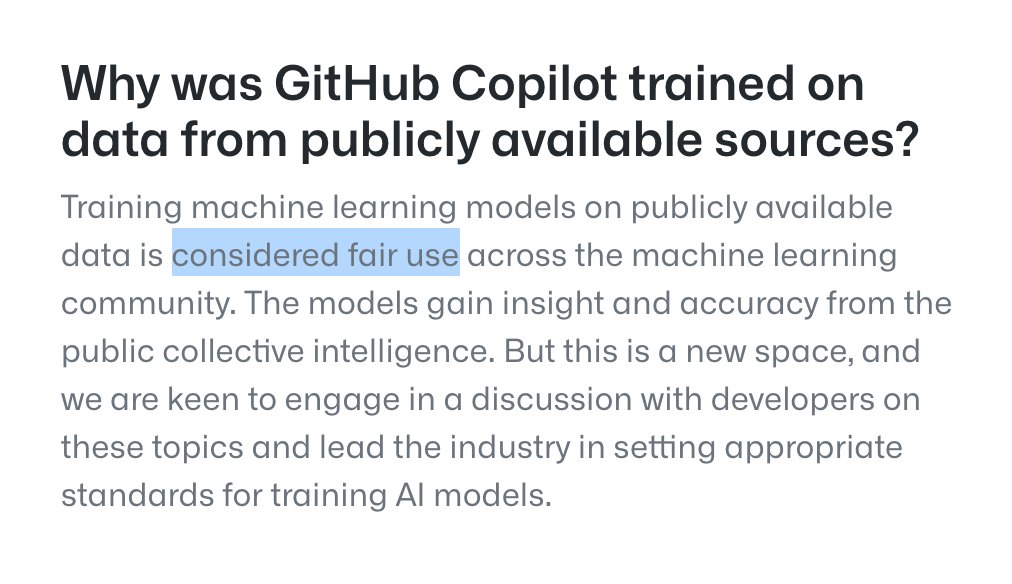

Left: Text from June 29th.

Right: Edited text on July 1st.

See FAQ section at copilot.github.com

Just for the record: it's not considered fair use, @github.

It's highly controversial in the community.

Multiple datasets have been removed from the internet because of such legal issues, and courts have forced companies to delete models built on data acquired questionably.

It's highly controversial in the community.

Multiple datasets have been removed from the internet because of such legal issues, and courts have forced companies to delete models built on data acquired questionably.

There was a mobile "face app" that had questionable terms of service, and the company used the data to train their models. In the ruling, they were ordered to delete the models.

I can't remember the company or app name, will post below when I find it...

I can't remember the company or app name, will post below when I find it...

https://twitter.com/ferrouswheel/status/1410531443458011138

Here it is. techcrunch.com/2021/01/12/ftc…

GitHub is in a similar position because it's using data from users "without properly informing them what it was doing."

In short, "users who did not give express consent to such a use" applies here too since License terms would be broken.

GitHub is in a similar position because it's using data from users "without properly informing them what it was doing."

In short, "users who did not give express consent to such a use" applies here too since License terms would be broken.

It certainly is a different case. But just to refute the original premise: it's not generally considered fair use in the community.

It's a controversial topic and what we're seeing now is a coordinated PR campaign until GitHub can help set the standards.

It's a controversial topic and what we're seeing now is a coordinated PR campaign until GitHub can help set the standards.

https://twitter.com/ferrouswheel/status/1410533552932888576

This is a digital version of the Tragedy Of The Commons.

It's very different in practice as there's no scarcity digitally, but the impact is arguably bigger... en.wikipedia.org/wiki/Tragedy_o…

It's very different in practice as there's no scarcity digitally, but the impact is arguably bigger... en.wikipedia.org/wiki/Tragedy_o…

FWIW, even the Creative Commons website has been nudging people for years towards using licenses that can easily be exploited commercially.

You're not approved for "Free Culture" if you don't let multi-nationals profit from your work!

You're not approved for "Free Culture" if you don't let multi-nationals profit from your work!

I think there's a small window of opportunity to ensure that new legislation doesn't benefit multi-nationals exclusively.

If this matter is left to sponsored Think Tanks, then regular people will suffer...

If this matter is left to sponsored Think Tanks, then regular people will suffer...

https://twitter.com/martinpi/status/1410537527778349059

They understand fully the importance of this.

They have a legal team working on it. They said they want to be a part of defining future standards. If that fails, they'll update their Terms Of Service.

They have a legal team working on it. They said they want to be a part of defining future standards. If that fails, they'll update their Terms Of Service.

https://twitter.com/Banbreach/status/1410539391961862145

The law works differently than you think it does! If you have the money and the intention, *everything* can be challenged.

You ask your expensive lawyer: "I want to win this case and establish this precedent" and they'll find many options.

You ask your expensive lawyer: "I want to win this case and establish this precedent" and they'll find many options.

https://twitter.com/Banbreach/status/1410540448297390081

Let's consider the fact there is no Fair Use directive in the EU, and in the US there are four factors that can be debated and argued until precedent is set in such cases.

https://twitter.com/ExpertDan/status/1410578981884203011

When hosting code on GitHub, it would fall under the Terms Of Service of a traditional business relationship. We possibly have better protection & recourse (e.g. with EU Regulators or FTC, as above) than if repositories were hosted elsewhere...

https://twitter.com/Donzanoid/status/1410586168606003205

They say it's trained on public data, and I highly doubt they trained on private repositories.

GPT-3 and similar size models have been proven to learn samples verbatim, so it'd be a huge breach of contract if information leaked!

GPT-3 and similar size models have been proven to learn samples verbatim, so it'd be a huge breach of contract if information leaked!

https://twitter.com/kcimc/status/1410588638476394497

This is the core of the issue.

GitHub's Terms and Conditions do not allow it to train on your code or exploit it commercially. See section D here: docs.github.com/en/github/site…

GitHub's Terms and Conditions do not allow it to train on your code or exploit it commercially. See section D here: docs.github.com/en/github/site…

https://twitter.com/kcimc/status/1410602339275218960

GitHub can "parse" — but if you claim Deep Learning is just parsing @ylecun will tell you it's extrapolation and that's difficult.

GitHub can "store" — but if you claim Deep Learning is just storing @SchmidhuberAI will find a paper from 1986 to prove you wrong.

GitHub can "store" — but if you claim Deep Learning is just storing @SchmidhuberAI will find a paper from 1986 to prove you wrong.

Since they trained on content hosted on GitHub that already falls under their T&C, when they call it "Fair Use" it's a way to circumvent the explicit license — which could weaken their argument legally and makes it very different from other cases.

https://twitter.com/kcimc/status/1410601885564772352

Facebook regularly trains AI models on photos from Instagram, but I presume they have the right to do anything because their T&C now probably cover everything their lawyers could think of ;-)

Thank you Dan! This is an important thing to do: please make a statement publicly about this or retweet someone else's.

https://twitter.com/QEDanMazur/status/1410603894149767169

It's easy for machines to track licenses, and companies have a responsibility of due care & legally bound to respect such contracts, hence they should do so!

https://twitter.com/Veqtor/status/1410613178166169601

Going to wrap up this thread...

TL;DR I'd also argue that GitHub is in a weak position on Fair Use, an even weaker because of their T&C explicitly does not allow "selling" our code as part of a derived-works like CoPilot.

TL;DR I'd also argue that GitHub is in a weak position on Fair Use, an even weaker because of their T&C explicitly does not allow "selling" our code as part of a derived-works like CoPilot.

https://twitter.com/kylotan/status/1410611316998377473

Reopening this thread with more insights on Copyright and GitHub Copilot. 🔓🖋️

There were so many great / interesting replies I want to track them in one place...

There were so many great / interesting replies I want to track them in one place...

ATTRIBUTION

GitHub is able to track whether generated snippets match the database on a character-level, but it's going to require better matching for users to do correct attribution of code snippets.

GitHub is able to track whether generated snippets match the database on a character-level, but it's going to require better matching for users to do correct attribution of code snippets.

https://twitter.com/KevinAWorkman/status/1410985479231533056

LIABILITY

If the generated code has legal implications (e.g. incompatible license), it's the end-users that will be responsible.

If the generated code has legal implications (e.g. incompatible license), it's the end-users that will be responsible.

https://twitter.com/fakebaldur/status/1410898211217289221

This came up many times!

One solution would be to redesign the product, likely training multiple models based on what licenses are compatible with the end-user's requirements.

One solution would be to redesign the product, likely training multiple models based on what licenses are compatible with the end-user's requirements.

https://twitter.com/m4xm4n/status/1410752754474262528

UNLICENSED CODE

Assuming all public repositories were used for training, even those without a license: this seems to be yet another case for their legal team to handle! It's different than claiming the right to train on GPL or non-commercial licenses.

Assuming all public repositories were used for training, even those without a license: this seems to be yet another case for their legal team to handle! It's different than claiming the right to train on GPL or non-commercial licenses.

https://twitter.com/HelloFillip/status/1410880152054251523

NEW LICENSES

This also came up many times! Should we design licenses that explicitly specify whether ML models can or cannot be trained on the code?

(Right now GitHub's claim of Fair Use is side-stepping the licenses anyway.)

This also came up many times! Should we design licenses that explicitly specify whether ML models can or cannot be trained on the code?

(Right now GitHub's claim of Fair Use is side-stepping the licenses anyway.)

https://twitter.com/sigodme/status/1410771145570271239

VERBATIM CONTENT

Fast-inverse square root is memorized as-is with comments and license. If it can be used as a search engine, maybe it should be treated as such legally?

Fast-inverse square root is memorized as-is with comments and license. If it can be used as a search engine, maybe it should be treated as such legally?

https://twitter.com/mitsuhiko/status/1410886329924194309

DERIVATIVE WORKS

For databases, the index is considered a separate copyright than the content: opendatacommons.org/faq/licenses/

However, models like GPT-3 combine both content & index so the whole thing is a derivative work of the content.

For databases, the index is considered a separate copyright than the content: opendatacommons.org/faq/licenses/

However, models like GPT-3 combine both content & index so the whole thing is a derivative work of the content.

https://twitter.com/_m_libby_/status/1410957198574997512

ACCOUNTABILITY

GitHub / Microsoft is accountable in many ways, it just needs someone to actually *hold* them accountable — otherwise (by default) the company is expected to maximize shareholder value.

GitHub / Microsoft is accountable in many ways, it just needs someone to actually *hold* them accountable — otherwise (by default) the company is expected to maximize shareholder value.

https://twitter.com/MaxALittle/status/1410983337406173186

MIGRATING PLATFORMS

By hosting code on GitHub, it falls under their Terms & Conditions — which (as of now) do *not* allow selling your code, also does not allow training.

In short, the legal options may actually be better by being on GitHub for now...

By hosting code on GitHub, it falls under their Terms & Conditions — which (as of now) do *not* allow selling your code, also does not allow training.

In short, the legal options may actually be better by being on GitHub for now...

https://twitter.com/tastapod/status/1410878292492750848

LEGALEZE

These cases are generally fascinating because there are so many different overlapping aspects! Here, for Ever (face database), it was apparently not about copyright but informed consent for private data.

These cases are generally fascinating because there are so many different overlapping aspects! Here, for Ever (face database), it was apparently not about copyright but informed consent for private data.

https://twitter.com/Andrew_Taylor/status/1410876184372695041

LOBBYING

Public opinion can often sway juries and even judges. This is why you see PR campaigns alongside the court cases, e.g. Epic vs. Apple.

CoPilot's launch is a PR campaign in multiple ways.

Public opinion can often sway juries and even judges. This is why you see PR campaigns alongside the court cases, e.g. Epic vs. Apple.

CoPilot's launch is a PR campaign in multiple ways.

https://twitter.com/noneuclideangrl/status/1410857993076346886

FINE LINE

Doesn't Disney have an entire legal department dedicated to ensuring copyright laws get changed just before Mickey Mouse expires?

This will be fun to watch...

Doesn't Disney have an entire legal department dedicated to ensuring copyright laws get changed just before Mickey Mouse expires?

This will be fun to watch...

https://twitter.com/jpwarren/status/1410746836655038464

FAIR USE

You can find the definition of Fair Use (U.S. only) here:

copyright.gov/fair-use/more-…

However, because it's Case Law, that definition should be interpreted through the lens of the many rulings that have happened over the years.

You can find the definition of Fair Use (U.S. only) here:

copyright.gov/fair-use/more-…

However, because it's Case Law, that definition should be interpreted through the lens of the many rulings that have happened over the years.

https://twitter.com/LeeFlower/status/1410748483028414467

LATE CAPITALISM

Outrage that licenses for content should be respected? Totally justified! It's a foundation of our field. (Companies have legal responsibilities.)

This is a "Think of the Children" argument, appeal to pity: en.wikipedia.org/wiki/Think_of_…

Outrage that licenses for content should be respected? Totally justified! It's a foundation of our field. (Companies have legal responsibilities.)

This is a "Think of the Children" argument, appeal to pity: en.wikipedia.org/wiki/Think_of_…

https://twitter.com/Inoryy/status/1411039391498317833

OPTIMISM

I'm very optimistic how this debate will evolve over the next months. With or without regulation, I expect consortiums to form (already happening) that specialize in providing high-quality, legal & lawful datasets for companies to train on.

I'm very optimistic how this debate will evolve over the next months. With or without regulation, I expect consortiums to form (already happening) that specialize in providing high-quality, legal & lawful datasets for companies to train on.

NON-PROFIT RESEARCH

The European Union has you covered if you're in academia here. Also for heritage/cultural projects! ✅

The European Union has you covered if you're in academia here. Also for heritage/cultural projects! ✅

https://twitter.com/alexjc/status/1411221772960161796

SECRET KEYS

GPT-3 models are known to leak personally identifying information. Until now, GitHub claimed that secrets were hallucinations that "appear plausible" but aren't.

GPT-3 models are known to leak personally identifying information. Until now, GitHub claimed that secrets were hallucinations that "appear plausible" but aren't.

https://twitter.com/fxn/status/1410460805611634696

• • •

Missing some Tweet in this thread? You can try to

force a refresh