I've been talking about ivermectin a bit recently, and every time I mention it someone will link me to this odd website - ivmmeta dot com

So, a bit of a review. I think this falls pretty solidly into the category of pseudoscience 1/n

So, a bit of a review. I think this falls pretty solidly into the category of pseudoscience 1/n

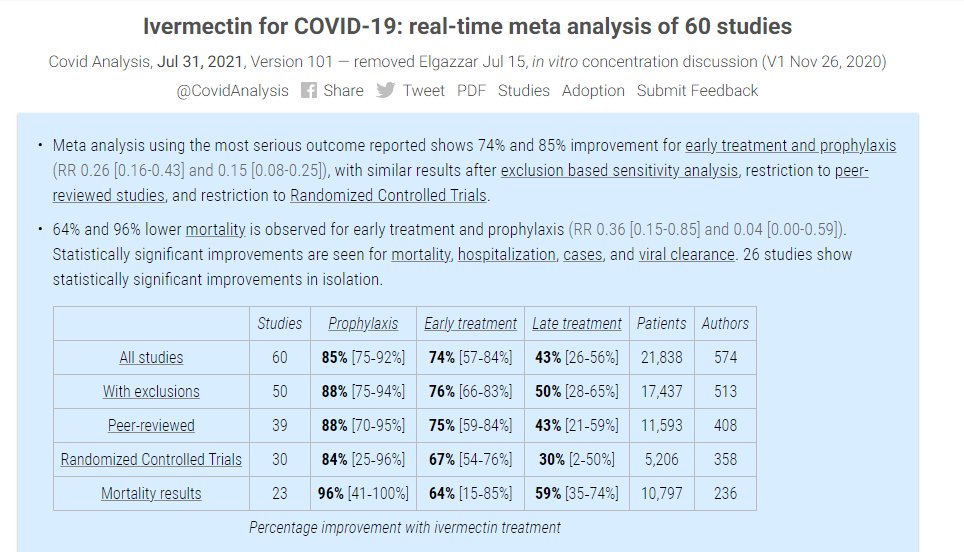

2/n The semi-anonymous site claims to be a "real-time meta analysis" of all published studies on ivermectin, collating an impressive 60 pieces of research

It's flashy, well-designed, and at face value appears very legitimate

It's flashy, well-designed, and at face value appears very legitimate

3/n The benefits that this website show for ivermectin are pretty amazing - 96%(!) lower mortality based on 10,797 patients worth of data is quite astonishing. Sounds like we should all be using ivermectin!

Except, well, these numbers are totally meaningless

Except, well, these numbers are totally meaningless

4/n Digging into the site, you're immediately hit with this error. That's not how p-values work at all, any stats textbook will show you why this statement is entirely untrue

5/n Most of these dotpoints are wrong in some way (heterogeneity causing an underestimate is particularly hilarious) but this statement about CoIs is wild considering that there are several potentially fraudulent studies in the IVM literature

6/n Going back to the heterogeneity point, this is the explanation from the authors about why heterogeneity is not a problem in their analysis. They appear to have entirely misunderstood what heterogeneity is (hint: this is more about BIAS than heterogeneity)

7/n Also worth noting, I've previously shown the heterogeneity is high in meta-analysis of IVM for COVID-19 mortality, and that's almost entirely because there are 2 studies that show a massive benefit and a bunch of studies that show no benefit at all

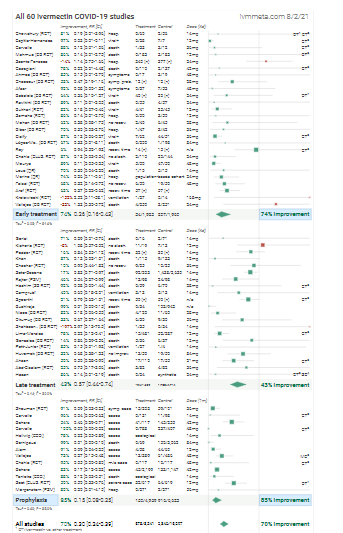

8/n Anyway, back to the website - the authors then present this forest plot of effect estimates

Each dot is a point estimate, and the lines around the dots represent confidence intervals

Each dot is a point estimate, and the lines around the dots represent confidence intervals

9/n Now, any data thug will immediately notice something wildly improbable about this forest plot (H/T @jamesheathers)

Can you see the issue? 👀👀👀👀

Can you see the issue? 👀👀👀👀

10/n While you have a think, here's a graph I made replicating these results. Not very pretty, but the final result is the same (with some minor rounding differences)

11/n Ok, so back to the question - why does this look problematic?

It comes down to confidence intervals. When you've got a bunch of very wide confidence intervals from different studies, you expect the point estimates to move around inside them quite a bit

It comes down to confidence intervals. When you've got a bunch of very wide confidence intervals from different studies, you expect the point estimates to move around inside them quite a bit

12/n Instead, look at those point-estimates! Even though they've all got MASSIVE intervals, virtually all the PEs are within 0.05-0.1 either side of 0.15

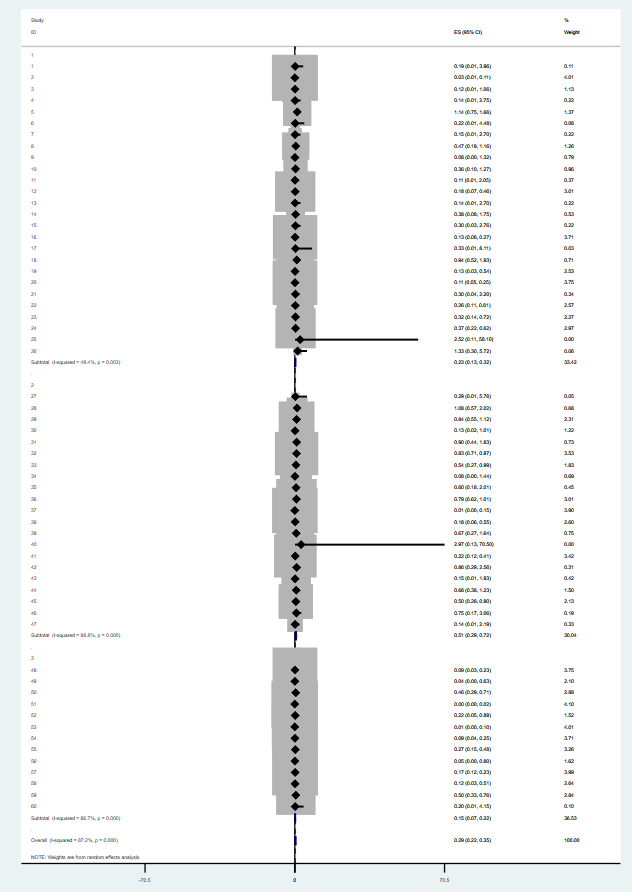

13/n We can actually graph this. In Stata, I made what's called a funnel plot, which basically plots each point estimate against its standard error, with a line at the overall estimate from the meta-analysis model

14/n What you expect to see, if there are no issues, is an equal number of points on either side of the line at similar positions

Instead, ~virtually every point is below the estimate of the effect~

Instead, ~virtually every point is below the estimate of the effect~

15/n I ran an Egger's regression to test the statistical significance of this, and the result is that there is a huge amount of what would usually be called 'publication' bias in the results. In other words, this is extremely weird

16/n What's happening here?

Well, this is where we really get into the weeds

You see, the meta-analysis on this website is REALLY BIZARRE

Well, this is where we really get into the weeds

You see, the meta-analysis on this website is REALLY BIZARRE

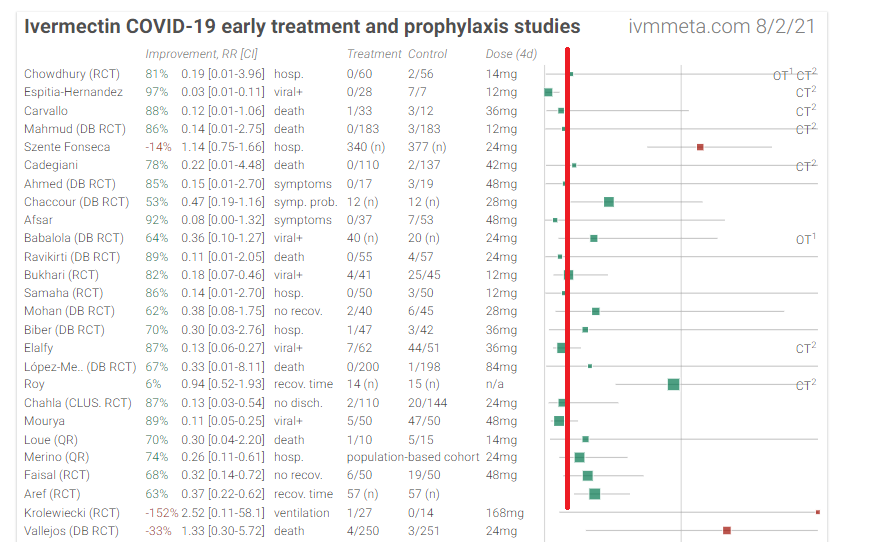

17/n How bizarre? Well, here are the measurements from the 'early' treatment studies - hospitalization is in the same model as % viral positivity, recovery time, symptoms, and death

All in the same model

WILD

All in the same model

WILD

18/n Worse still, these appear to be picked almost entirely arbitrarily. The website claims to choose the "most serious" outcome, but then immediately says that in cases where no patients died or most people recovered a different estimate was used

19/n Even a fairly surface skim shows that what appears to actually be happening here is that the authors choose the outcome that shows the biggest benefit for ivermectin

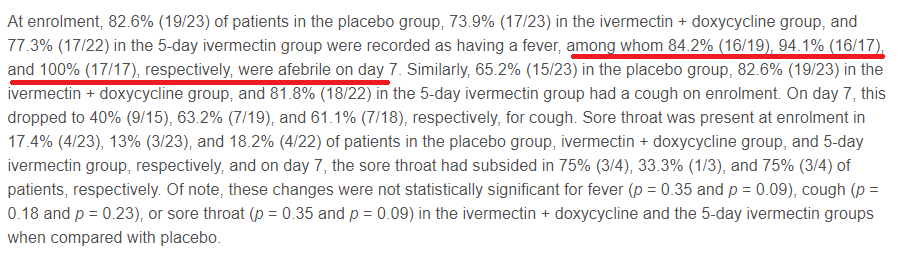

20/n For example, the analysis includes this paper. The primary outcome was viral load, which was identical between groups

Never fear however, because ivmmeta won't take "null findings" as an answer!

Never fear however, because ivmmeta won't take "null findings" as an answer!

21/n If you dig through the supplementaries, what you find is that for "all reported symptoms" there was a large but statistically insignificant difference, represented in this graph of marginal predicted probabilities from a logistic model. It is mostly driven by an/hyposmia

22/n If you eyeball "any symptoms", you get the results that ivmmeta included in their analysis

But that's TOTALLY ARBITRARY. Why not choose cough (where there's no difference) or fever (where IVM did WORSE)

But that's TOTALLY ARBITRARY. Why not choose cough (where there's no difference) or fever (where IVM did WORSE)

23/n Also, hilariously, this study used the last observation carried forward method to account for missing data in symptom reporting. You can actually see this in the supplementaries - it's possible the entire result comes from a few people not filling out their diaries properly

24/n None of this should matter, because the trial found NO BENEFIT FOR IVERMECTIN, but this has been reported and included into ivmmeta dot com as a hugely beneficial result

25/n This explains the bias I noted above - it's not publication bias, it's that the authors appear to have generally chosen whichever result makes ivermectin look better to include in their model

Not really scientific, that!

Not really scientific, that!

26/n But the fun doesn't stop there. The inclusion criteria for this website is any study published on ivermectin, which has led to what I can only call total junk science being lumped in with decent studies

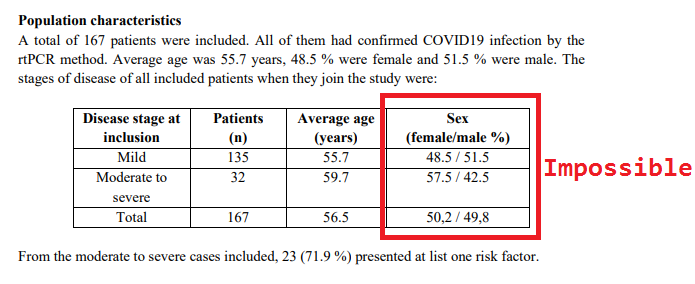

27/n Here's a study with impossible percentages in table 1 that used a comparator of 12 completely random patients as their control. They don't even say if these 12 people had COVID-19

Included in ivmmeta, no questions asked

Included in ivmmeta, no questions asked

28/n ivmmeta includes all of the studies I've been tweeting about recently including this one

And this one

And this one

https://twitter.com/GidMK/status/1421368493975359490?s=20

And this one

https://twitter.com/GidMK/status/1420582871031373824?s=20

And this one

https://twitter.com/GidMK/status/1419557546872819719?s=20

29/n I've now read through about 3/4 of all the studies on the website, and I would say at least 1/2 of them are so low-quality that the figures they report are basically meaningless

30/n Moreover, sometimes the website just does stuff that is wildly strange

Here's a study with no placebo control. They appear to have calculated a relative risk of...whether the patients in this hospital got treated with ivermectin? WHY

Here's a study with no placebo control. They appear to have calculated a relative risk of...whether the patients in this hospital got treated with ivermectin? WHY

31/n I could keep going - there's just so much there. Even just the basic concept of combining literally any number from any study and saying that it makes the model MORE ROBUST is so intrinsically flawed

So. Many. Mistakes

So. Many. Mistakes

32/n But this thread is already too long, so to sum up

- the website looks flashy

- the methodology is totally broken

- I would call this pretty pseudoscientific; all the trappings of science, with none of the rigor

- the website looks flashy

- the methodology is totally broken

- I would call this pretty pseudoscientific; all the trappings of science, with none of the rigor

33/n If the authors do indeed want to make this into a reasonably adequate analysis, there are a few steps:

1. Separate treatment outcomes (i.e. analyze ONLY deaths in a model)

2. Rate QUALITY of included literature (i.e. don't aggregate trash with decent studies)

1. Separate treatment outcomes (i.e. analyze ONLY deaths in a model)

2. Rate QUALITY of included literature (i.e. don't aggregate trash with decent studies)

34/n

3. Separate STUDY TYPES (i.e. don't include an ecological trial with no control group and an RCT)

4. Take out all the weird language about "probabilities" and heterogeneity, pretty much all of that is just flatly incorrect

3. Separate STUDY TYPES (i.e. don't include an ecological trial with no control group and an RCT)

4. Take out all the weird language about "probabilities" and heterogeneity, pretty much all of that is just flatly incorrect

35/n Also, because people always ask when it comes to ivermectin - no, I've never been paid by any pharma companies for anything, I receive no pharma funding, and all of my COVID-19 work is unpaid anyway

36/n Frankly, I don't want your money, give it to something more worthwhile like this charity that provides menstrual products to homeless women they're much more deserving than I am sharethedignity.org.au

37/n One final point - I honestly hope ivermectin works. Based on the current evidence, it looks like the benefit will be modest, but it's still not unlikely that it helps a bit. Problem is, current best evidence is also consistent with harm

38/n Ugh, one other note - one shitty website that makes mistakes does not "disprove" ivermectin, just like the website never proved much itself. The question is still open in my opinion, regardless of ivmmeta

39/n An addition - this was a very unfair thing to say about this piece of research, which is very well-done. The issue is not with these researchers, but the ivmmeta website itself and I should've been more clear

https://twitter.com/GidMK/status/1422044424436011009?s=20

40/n A brief update - this was an unfair point to make - the trial does indeed have a control group (such as it is), just not a very useful one

https://twitter.com/GidMK/status/1422049090007797766?s=20

41/n You can also see some more examples of where the authors of this pseudoscientific website contradict their own stated methodology in this brief thread from Dr. Sheldrick

https://twitter.com/K_Sheldrick/status/1431507081496969222?s=20

42/n And worth noting that we've come forward with serious concerns about fraud for 4 studies that remain up in the main analysis on the website

Not scientific at all, but, well, not unexpected!

Not scientific at all, but, well, not unexpected!

43/n People keep directing me to this pseudoscientific website, so I might as well keep pointing out issues

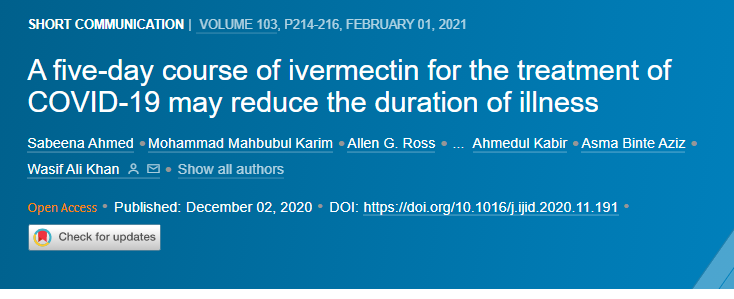

This study - Ahmed et al - found no benefit for ivermectin on duration of hospitalization, cough, or sore throat. So why is it presented as massively positive?

This study - Ahmed et al - found no benefit for ivermectin on duration of hospitalization, cough, or sore throat. So why is it presented as massively positive?

44/n Well, in the ivermectin groups (there were two of them), of those who had a fever at the start of the treatment in one group everyone recovered. In the placebo group, 3 people still had a fever at day 5

45/n Now, obviously this contradicts ivm meta's stated methodology - they should use hospitalization length which is more severe than reported symptoms - but it's also quite funny because they've even excluded ONE OF THE IVERMECTIN GROUPS

46/n In reality, this trial should have a point estimate above 1, because in this trial people treated with ivermectin stayed (non-significantly) longer in hospital than the placebo group, but the website is only interested in promoting ivermectin, not facts

47/n You can even see just how dishonest this website is when looking at cough. It's debatable whether cough is more severe than fever, because there's no assessment in the paper, but the placebo group did better than ivermectin in this trial on cough (p=0.15)

• • •

Missing some Tweet in this thread? You can try to

force a refresh