I was going to write about how Apple's new detector for child sexual abuse material (CSAM), promoted under the umbrella of child protection and privacy, is a firm step towards prevalent surveillance and control, but many others have already written so good stuff. Some of it 👇

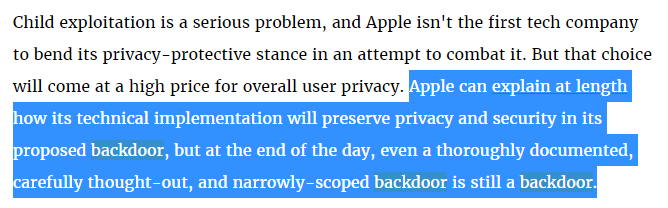

First, don't be fooled by the fancy crypto and the flashy privacy claims. This is a backdoor to encryption. Yes, folks, a privacy-preserving backdoor is no less of a backdoor. We are back to the crypto wars. @EFF goes at lenght about this in their analysis eff.org/deeplinks/2021…

Can be said louder, but not clearer

https://twitter.com/Snowden/status/1423469854347169798

Why do we care about backdoors? They are essentially breaking encryption, once there is a backdoor there is no more "secure storage", "secure messaging", "secure anything". It just breaks all guarantees.

https://twitter.com/matthew_d_green/status/1423072910630080523

Second, backdoors they generate an expandable infrastructure that can't be monitored or technically limited

https://twitter.com/sjmurdoch/status/1423397219491852291

I don't want to re-explain much more what others have said so elocuently. For instance: mitpress.mit.edu/blog/keys-unde…

But the problem with this system is that compared to problems of traditional backdoors it opens a bigger pandora's box. Automated scanning is far from perfect, and that can result on ilegitimate accusations or enable targetting

https://twitter.com/matthew_d_green/status/1423079847803428866

Matt reiterates here on privacy not being the problem, but the lack of accountability, technical barriers to expansion, and no analysis (barely any acknowledgment) of the potential errors

https://twitter.com/matthew_d_green/status/1423378285468209153

Another hidden gem in this story is Apple deciding it is ok to have artificial intelligence mediating the relationship of children and parents. What can go wrong if AI decides what messages from a kid should be revealed to their parents? Some examples

https://twitter.com/KendraSerra/status/1423365222841135114

The above from @KendraSerra is just a taste of how little thought has been given to how this technology may affect minorities and the damage it can bring to social relationships.

https://twitter.com/evacide/status/1423402771236102147

I have not seen anything about misuse, but as any other child monitoring technology this has great potential to become yet another tool by abusive partners. See the work from @TomRistenpart and team

ipvtechresearch.org/research

ipvtechresearch.org/research

More problems. Apple is the first, but won't be last. If we mark such a solution as acceptable soon these controls will be all over the Internet and privacy-preserving surveillance and control will be the norm and expand its scope till we all live in 1984

https://twitter.com/SarahJamieLewis/status/1423403656733290496

Bottom line: Apple's deployment must not happen. Everyone should raise their voice against it.

What is at stake is all the privacy and freedom improvements gained in the last decades.

Let's not lose them now, in the name of privacy.

What is at stake is all the privacy and freedom improvements gained in the last decades.

Let's not lose them now, in the name of privacy.

• • •

Missing some Tweet in this thread? You can try to

force a refresh