I would like to see a thread on the problem of CSAM that doesn’t use automated CSAM reporting tools as the metric to show that there’s a problem.

https://twitter.com/pwnallthethings/status/1423773684272144384

I’m not denying that there’s a CSAM problem in the sense that there is a certain small population of users who promote this terrible stuff, and that there is awful abuse that drives it. But when we say there’s a “problem”, we’re implying it’s getting rapidly worse.

The actually truth here is that we have no idea how bad the underlying problem is. What we have are increasingly powerful automated tools that detect the stuff. As those tools get better, they generate overwhelming numbers of reports.

The overwhelming number of reports is great for tech companies because it lets them say they’re doing something (and close a few easily replaced accounts.) but it floods law enforcement’s very limited ability to deal with the problem.

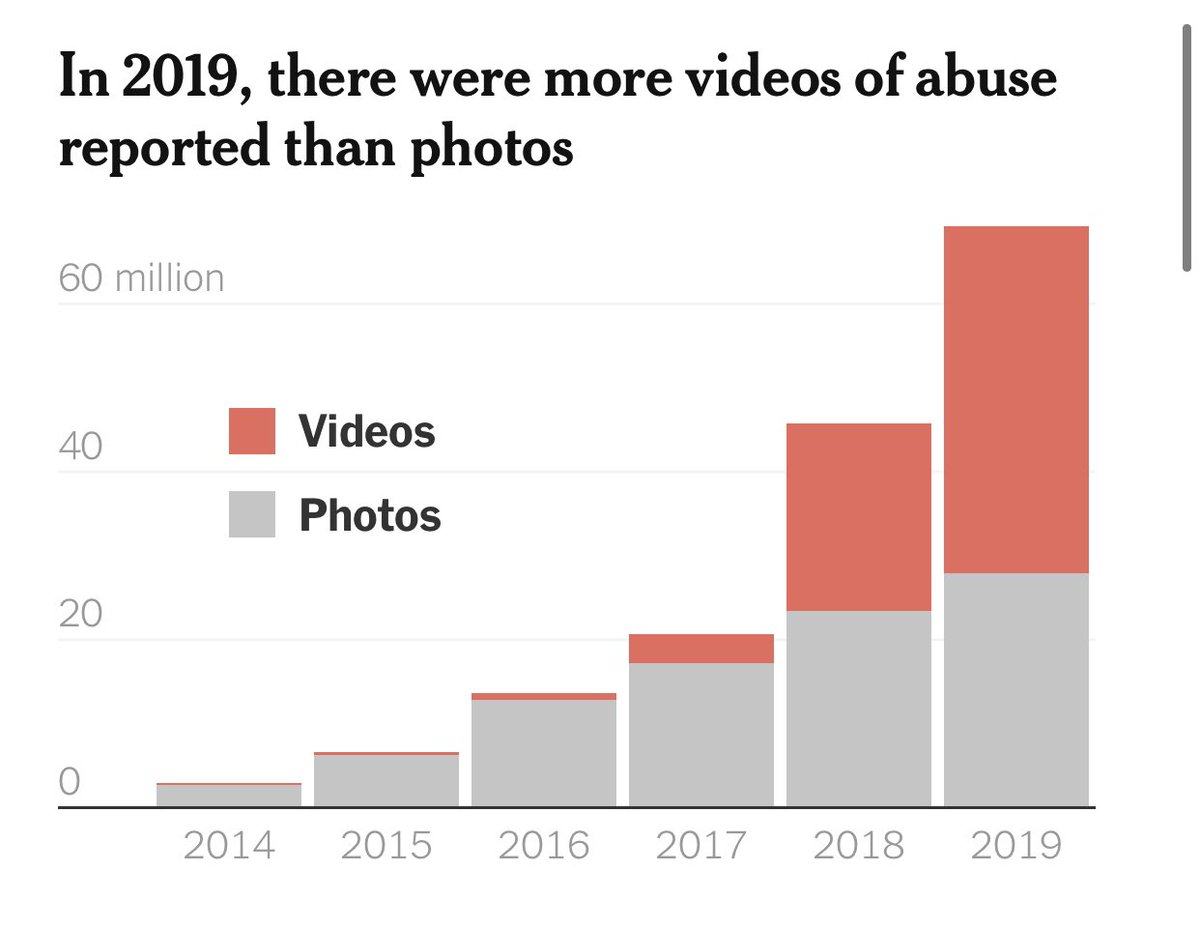

It also creates a dynamic where people can say “look at these millions of automated reports, the problem must be out of control and getting worse.” And then tech companies build more automated scanning systems.

At the root of every story on the worsening problem of CSAM, you’ll find someone pointing to increasing numbers of automated detection reports. There is never, ever any data-driven discussion of whether these are cause or effect — or if these reports really help.

After Apple turns on its photo scanning for a billion devices, the number of CSAM reports is going to explode. To staggering numbers. Just based on projecting results on previous platforms to Apple’s customer base.

The CSAM “problem” won’t be any worse than it was the day before Apple ships this update. But it will be apparently worse, in the same way that these graphs from the NYT show it getting apparently worse.

So I would ask people to think hard about whether they have any hard evidence of a worsening problem, before they handwave towards high reporting numbers and move on to the *important* point they want to make.

And moreover, I would ask whether dumping another few tens of millions of potentially noisy reports into the system (Facebook estimates 75% are “non-malicious”) is going to make law enforcement more effective or less.

And as a note, I want to be clear that “X million reports” usually means “X million photos or videos”. It does not mean X million people. The number of people doing this stuff is as small as you would think it is, it isn’t like COVID where it spreads through the population.

The fact that a small number of criminals continue to operate and spread abusive material in increasing amounts is an *indictment* of the effectiveness of CSAM surveillance systems, not an argument for building more of them.

• • •

Missing some Tweet in this thread? You can try to

force a refresh