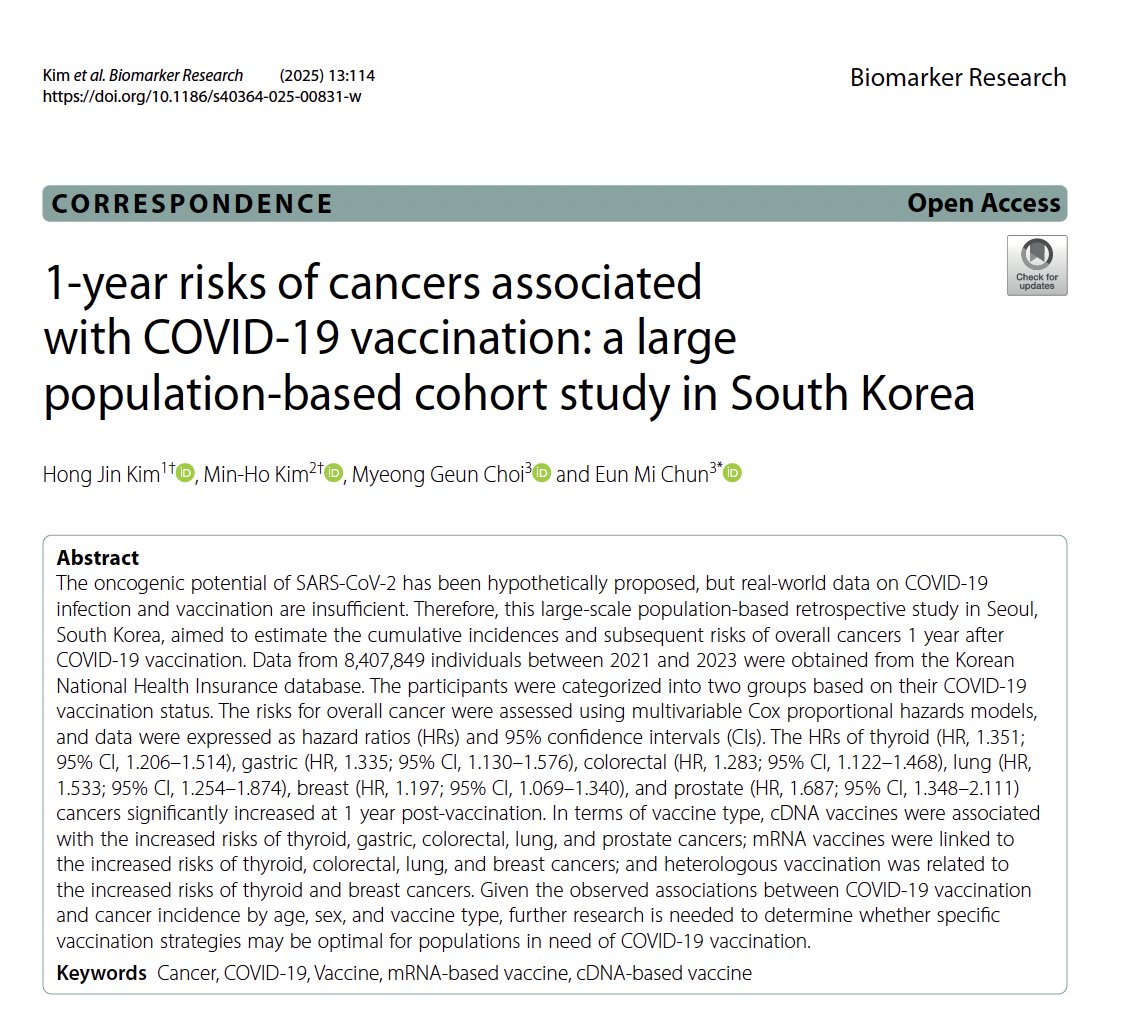

New medRXiv paper by UPenn group led by John Wherry looking at immune markers 6m after vaccination.

Partially explains waning vaccine efficacy vs. infection, more durable vaccine efficacy vs. severe disease, and is relevant to current booster policy.

biorxiv.org/content/10.110…

Partially explains waning vaccine efficacy vs. infection, more durable vaccine efficacy vs. severe disease, and is relevant to current booster policy.

biorxiv.org/content/10.110…

Paper measured immune markers (antibodies, T-cells, B-cells) from 61 individuals vaccinated with Pfizer/Moderna at 6 time points, from pre-vax to 6m post-vax.

16 were previously infected with SARS-CoV-2 and 45 SARS-CoV-2 naïve, and analysis was stratified by previous infection.

16 were previously infected with SARS-CoV-2 and 45 SARS-CoV-2 naïve, and analysis was stratified by previous infection.

The key results were:

1.Neutralizing antibodies (NAbs) decreased over time

2.Memory B cells (Bcells) increased over time and did not wane

3.Helper T cells (T4) and Killer T cells (T8) dynamic described

1.Neutralizing antibodies (NAbs) decreased over time

2.Memory B cells (Bcells) increased over time and did not wane

3.Helper T cells (T4) and Killer T cells (T8) dynamic described

Antibody levels (for spike/receptor binding domain) spiked after vaccination, & declined 10x in 6m, but remained above baseline levels for previous infected.

This reduction of circulating antibodies might explain the waning efficacy vs. asymptomatic/mild symptomatic disease.

This reduction of circulating antibodies might explain the waning efficacy vs. asymptomatic/mild symptomatic disease.

Here are the levels for all individuals. We see heterogeneity, with some having much higher levels than others.

When we say "immunity is waning" we must remember it is waning for SOME, not all.

When we say "immunity is waning" we must remember it is waning for SOME, not all.

But antibodies always wane. Long term protection comes from memory B & T cells.

Memory B-cells rapidly generate new Abs when later exposed to the virus.

These continued to increase, not decline, in 6m after vax (blue) and had similar levels in previously infected at 6m (red)

Memory B-cells rapidly generate new Abs when later exposed to the virus.

These continued to increase, not decline, in 6m after vax (blue) and had similar levels in previously infected at 6m (red)

People worry about immune escape in Alpha (B.1.1.7), Beta (B.1.351), and Delta (B.1.517.2+)

They measured B-cell binding as % of wild type (D614G).

B-cells bound well for all variants, with little immune escape for Alpha, the most for Beta, and intermediate levels for Delta.

They measured B-cell binding as % of wild type (D614G).

B-cells bound well for all variants, with little immune escape for Alpha, the most for Beta, and intermediate levels for Delta.

Looking at 6m values for individuals, we see most had strong binding (~90% for Alpha, 75% Delta, 60% Beta) but a subset clearly had less protection. Heterogeneity.

Also note that at 6m, vaccinated and previously infected (vaccinated or not) had similar levels of B-cell binding

Also note that at 6m, vaccinated and previously infected (vaccinated or not) had similar levels of B-cell binding

They have other interesting results looking at T-cells and looking deeper into various aspects of the immune response – this paper is much worth reading.

In summary, we see mRNA vaccines induce strong multi-modal immune response, including high Nabs as well as memory B/T cells

After 6m, most notably Abs & T cells decline the most, & might help explain increased breakthrough infections, while memory B cells remain strong.

After 6m, most notably Abs & T cells decline the most, & might help explain increased breakthrough infections, while memory B cells remain strong.

It is possible that the “waning immunity” is not a total loss of immune protection, but rather a delay of immune response, with reduction of circulating Nabs requiring generation of new ones by B-cells (and may only affect a subset of people)

@UnrollHelper

please unroll!

please unroll!

Here is detailed 🧵from@one of the authors:

https://twitter.com/rishirajgoel/status/1430148915064676352

• • •

Missing some Tweet in this thread? You can try to

force a refresh