@claudiorlandi The claim that the protocol is “auditable.” This is a strong claim that is being made to consumers and politicians. What does it mean? I think it means “the pdata [first protocol message from server]” is a secure commitment to the scanning database X. 1/

@claudiorlandi In other words, under the assumption of *a malicious server* the clients can be assured that (provided they check that their pdata is what Apple intended to publish) then Apple cannot scan for items outside of a committed database. And this is (at least privately) verifiable. 2/

@claudiorlandi My first observation is that while this “auditability” property exists in Apple’s public claims, no corresponding “dishonest server” properties exist anywhere in the formal description of the protocol. Check for yourself. 3/

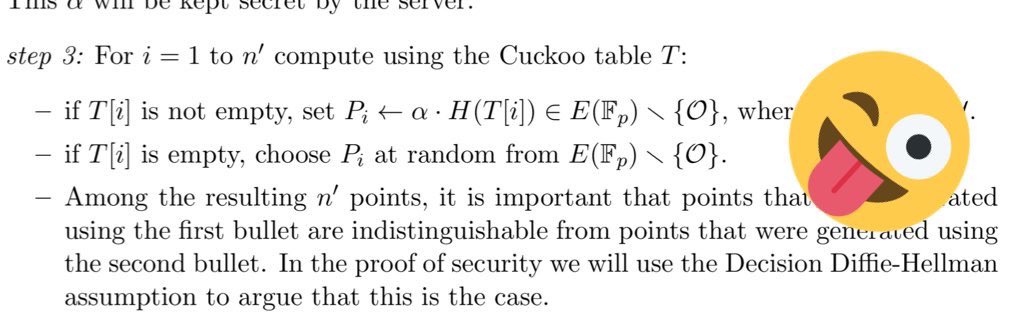

@claudiorlandi Moreover, based on the protocol as described in the paper I can’t see how it *could* apply. For example, when the Cuckoo hash table is empty the server picks a “random element.” But what if it doesn’t choose an element honestly at random?

@claudiorlandi For example: what if Apple wants to include other meaningful elements into those so-called “random elements”? Under DDH one can construct structured elements that are indistinguishable from random. 5/

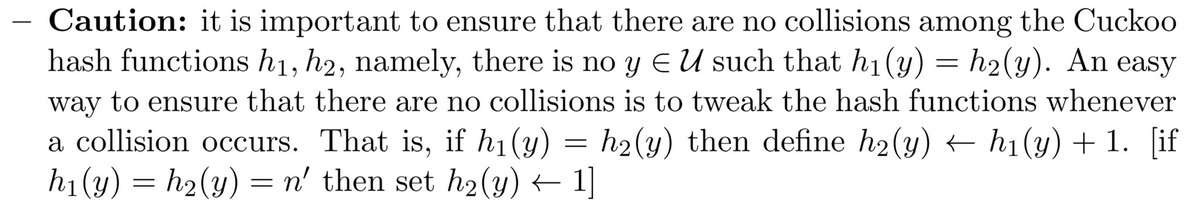

@claudiorlandi Similarly, if pdata is to be “auditable” (meaning a secure commitment to X) then this treatment of the hash functions seems extremely casual. 6/

@claudiorlandi An actual auditability property would assume that the server is malicious and that there is (at minimum) some trusted Verifier that can take (X, pdata, W) where W is a secret witness, and verify that pdata is constructed correctly. There’s more required but that’s the basics. 7/

@claudiorlandi It goes without saying that you could probably tighten this protocol to have that property, for example by generating every random coin from a PRG on a known seed and carefully specifying how verifiably “random points” are sampled. But it’s not specified. 8/

@claudiorlandi And all of this *might* be ok if we were discussing some random academic preprint. But this is a real system that’s being deployed into a billion devices, one with very specific cryptographic failure modes. If Apple is going to make the claim, they can’t omit the proofs. //

• • •

Missing some Tweet in this thread? You can try to

force a refresh