The biggest problem with async await is the “colored functions” problem brilliantly explained by this article journal.stuffwithstuff.com/2015/02/01/wha…. It’s a never ending problem because everything can’t be async and it’s viral. It’s not a new problem though, it’s always been this way.

JavaScript has an easier time because blocking always meant you’d destroy the browsers UI thread. That model naturally made it nicely non blocking on the server side.

Then golang chose a different direction and did go routines. Not conceptually different but the big thing it solves is the “virality”problem. Java’s Loom is also headed this direction. It’s easy to say that .NET should follow but it’s never easy…

One of the fundamental tradeoffs is the performance of interop. .NET is one of the platforms that has excellent support for interop with the underlying OS (pinvokes) aka FFI (foreign function interfaces). It has one of the best FFI systems on the market

The moment you need to call into the underlying platform, you need to context switch from your current “green” thread, to one compatible to what the underlying platform supports. This is one of the big costs and why golang had to rewrite things in go and goasm.

The other difficulty .NET has is that it allows pinning memory. Maybe you pinned some object to get the address or pass it to another function. This is problematic, when you want you want to grow the stack dynamically in your user mode thread implementation.

The inability to copy the stack means you need to do a linked list instead. This is a complex and inefficient implementation. Java and go can both copy because there’s no way to get the underlying address of anything (without really unsafe code).

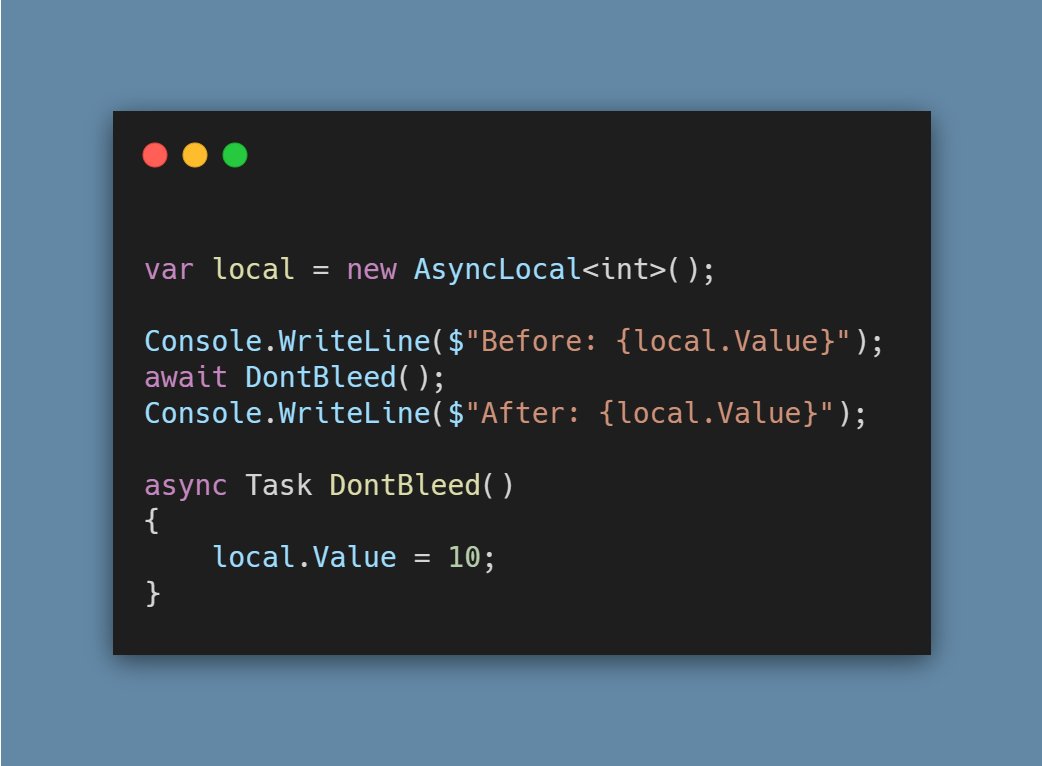

Interestingly, async state machines in .NET form a linked list. If you squint, the state for a single async frame are fields on the async state machine and continuations point to the “return address”.

Execution wise, most of these systems work in a similar way. There’s a thread pool with a queue of work and work stealing. Java’s loom uses one of Java’s threadpool implementations and golang has a scheduler that does similar things.

The biggest difference is in the ergonomics of using it and the “virality”. Sure there’s devil in the details but don’t let anyone tell you that green threads fundamentally performance better than the alternative, they don’t

Or maybe if we wait long enough, the operating system thread will be super cheap and we can remove these programming language runtime specific thread implementations 🙃

I missed the other big problem with user mode threads! It resets the tooling ecosystem. All of the tools that can look at OS threads don't work with your threads.

Watch @pressron's talk on this in the context of Java's loom . It's good.

• • •

Missing some Tweet in this thread? You can try to

force a refresh