1) Still having trouble logging in to Facebook, but for mundane reasons. See, apps with 2FA send an email or a text message when you ask for a password reset. But unlike machines, people are impatient, and mash that "request reset code" button multiple times.

2) As a consequence, several reset codes get sent. Because of email latency, who knows when the most recent request has been fulfilled? So the most recent code in the email box might not be the most recent one sent, so things get out of sync.

3) This gets a richer when Messenger notices trouble. I get email from Facebook: "We noticed you're having trouble logging into your account. If you need help, click the button below and we'll log you in.” Then there’s a one-click button that will allow me to log in to Facebook.

4) EXCEPT that button uses the protocol from *Messenger* (which uses a six-digit authorisation code), so the login to Facebook fails, because Facebook wants an eight-digit auth code! I can pretty much guarantee that the automated checks for login don’t consider such scenarios.

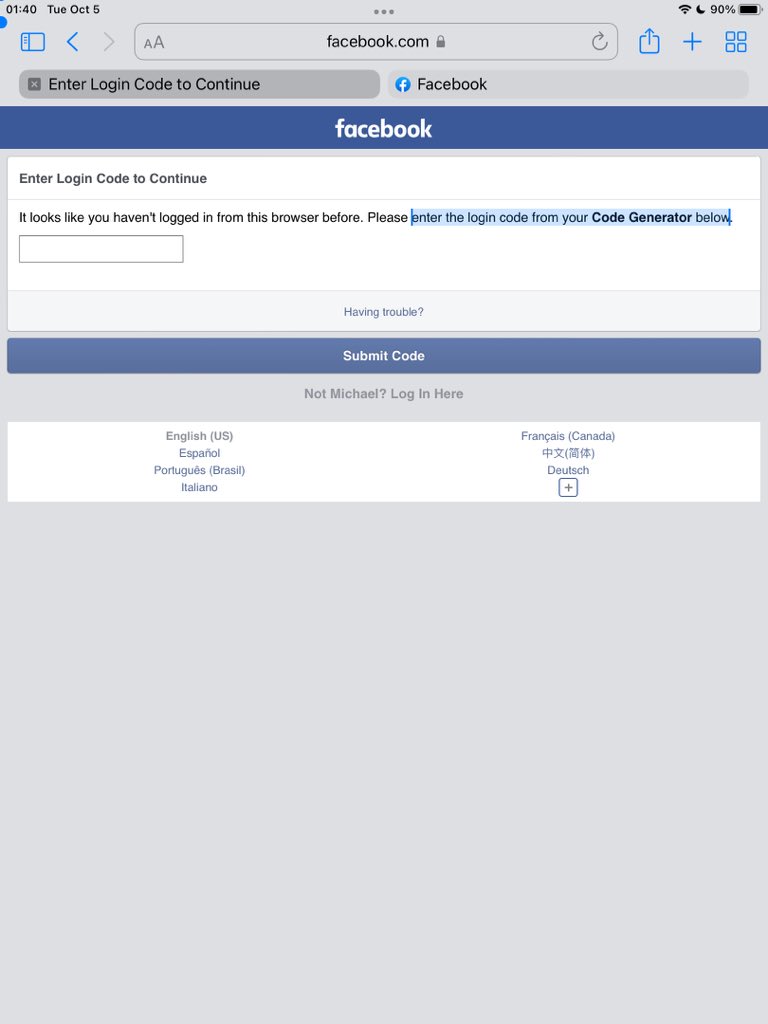

5) Well, somehow this all gets sorted, and I get a usable code FOR Facebook, FROM Facebook, and I change my password. But then, logging in via my browser (which is the user journey here), I get asked "Please enter the login code from your Code Generator below". Code Generator?!

6) (While trying to mark the quoted text from the previous tweet, I find that someone has rendered the text on that page unCopyable. But I digress.) I can pretty much guarantee you that this scenario is not covered by automated checks. I agree that it would be silly to do that.

7) Such checks would be super brittle, annoying to develop, and worse to maintain. But I can also pretty much guarantee that this scenario (and many variations on it) are not covered by testing either, even though Facebook and Messenger are owned by the same company.

8) I would offer good odds that there's a log-in-to-Facebook group, and a log-in-to-Messenger group, and they're on different floors in different buildings, maybe even in different countries, and they almost never talk. Plus there are equivalent monitoring groups, also separated.

9) No matter what, monitoring groups are overwhelmed with data. If this affects one person in a thousand, Messenger’s user base means 1.3 million instances of the issue, with dozens of log file entries for each instance. Without knowing the scenario, try making sense of it all!

10) Testers will miss problems affecting real users when testers are steered towards confirming that everything's okay. Yes, Dear Software Folk, that’s true for your organisation too. And it’s especially true when that confirmation is obtained in instrumented and unattended ways.

11) This is NOT to say that automated checking is a Bad Thing. Automated checking, especially at low, machine-friendly levels, can be a Very Good Thing. Especially when it’s a routine part of the developers' diligent discipline—just as the original eXtreme Programmers advocated.

12) And it’s not to say that experiential and attended testing will find all the problems that elude even a highly disciplined development process. No one knows how to find all the bugs in non-trivial systems. That’s a given; it’s epistemically impossible, to say it fancy-like.

13) But here’s the thing (and it’s a software industry pandemic at the moment, so it seems): there is this myth that automated checking "saves time for exploratory (experiential, attended) testing". It's a very seductive myth, because of an observational and time-accounting bias.

14) When a buhzillion automated checks run, it’s tempting to say "Look at all that testing! And look how FAST it’s going!" And those checks are indeed blindingly fast, but that’s not all that’s happened. We're seeing a ship in a bottle, but NOT what it took to get it in.

15) To get those automated GUI checks to run *at all* can take enormous amounts of time. To adjust them when they get out of sync (because the product changes) can take just as much time. To deal with inconsistent behaviour (because of inconsistent environments)—even more time.

16) Some inconsistent behaviours are often written off as "flaky checks" after a while, but at significant sensemaking and interpretation costs. Then everyone gets frustrated because they realize that the tools or frameworks are problematic, so now there’s migration cost.

17) Now, it’s true that in the course of automating checks at the GUI level, some bugs will get found. Any interaction with the product, by any means, affords the chance to stumble over *some* bugs.

18) Of course, if the task is to automate the GUI, a large proportion of those bugs will pertain to problems with automating the GUI. Those are testability problems without being usability problems; no end user on the planet cares about element ids in the DOM. Well, very few.

19) A handful of the bugs found while developing or maintaining automated GUI checks will be genuine bugs. These will get fixed, whereupon the automated GUI checks are relatively unlikely to detect that bug (especially if the developers implement lower-level checks for it.)

20) Other bugs will be ignored and swept back under the rug, and then something a little more insidious will happen: the checks will be changed so that they run green. This becomes a dirty secret, but everyone's in on it, because dealing with bugs is a pain in the ass.

21) Even the most diligent developers heave a sigh when a bug is noticed and reported. Managers don’t want to have to deal with the complicated decisions about how to deal with bugs. Often there’s subtle yet significant social pressure for testers not to report "too many" bugs.

22) Considering the political and social pressure, it’s unsurprising that many testers will keep their heads down and keep plugging away at the programming, debugging, maintenance, interpretation and occasional migration work around automated GUI checks. Plus it’s mandated.

23) Here's the kicker, though: all the work that I described above largely disappears into the Secret Life of Testers and the Secret Life of Automated GUI Checks. It’s largely invisible; taken for granted; unobserved and unquestioned except in a handful of development shops.

24) There are very few incentives to raising these questions:

A. How much of our time are we spending on the whole effort—not just the execution time when we run the automated GUI checks, but all of the effort around the care and feeding of them?

A. How much of our time are we spending on the whole effort—not just the execution time when we run the automated GUI checks, but all of the effort around the care and feeding of them?

25) B. What *important* bugs are we finding with our automated GUI checks? C. What important bugs might we miss? D. What important bugs have we missed already, and what have we learned from that? E. What kinds of testing work are we NOT doing; what is the *opportunity cost*?

26) Opportunity cost is crucial. Every moment a tester spends on programming or fixing another GUI check is a moment that she can’t spend on learning about the customers' needs and desires; developing tools to generate rich test data…

27) …collaborating with customer support people for test ideas; reviewing relevant and important regulations or standards documents; visualising and manipulating output data for consistencies and inconsistencies; following up on mysteries; investigating new tools…

28) (Tool vendors in particular are eager to sell stuff to management that "will save 80% of the testing time"—which is always a bogus claim, since they don’t know a damned thing about how *your* organisation is spending *its* testing time.)

29) (They apply the 20/80 rule, touting a tool that addresses 20% of your actual testing work, and you spend 80% of your time dealing with the tool, leaving you with 20% of your time to do the other 80% of your work. Credit to @jamesmarcusbach; we call it the Oterap Principle.)

30) But in all this, the opportunity cost really lands on *attended* *experiential* testing—that is, testing wherein the tester is present and engaged, and in which the tester’s encounter with the product is practically indistinguishable from that of the contemplated user.

31) The prevailing ideas behind automated GUI checking are that it be *unattended* and *instrumented*—some medium gets in between the product and a human being's naturalistic experience of it. That can have some value, but getting there reliably is difficult and expensive.

32) And all that, Dear Testers, is why customers encounter problems that automated GUI checks won’t reveal. It’s not that automated GUI checks *replace* testers; they *misplace* testers' focus, and they *displace* experiential testing.

33) Now, of course, I could be wrong about all of this. Maybe in your shop, there is regular scrutiny of where testing time is going. Perhaps your organisation has done a diligent job of examining the value of your automated GUI checking, and contrasted it with other approaches.

34) Maybe you do diligent scenario testing, where testers have the freedom and responsibility to explore the product with maximum agency and without a prescribed set of steps to follow. Maybe you have time to surf the customer forums and let user experiences flavour your testing.

35) Maybe you're a toolsmith with freedom and responsibility to craft all kinds of useful tools for yourself and other testers, instead of automating the GUI to reproduce steps that never found a bug in the past and won’t in the future, either.

36) Maybe you're collaborating with your developers, and they are taking on the responsibility of output checking at the unit and integration level. That’s not only reasonable but also important if they’re mandated to produce reliable code that they themselves can warrant.

37) Maybe, because of all that, you’re doing really minimal GUI checking because you don’t need much of it. But maybe you’re up to your eyeballs in it, because (again credit to @jamesmarcusbach) when it comes to technical debt, automated GUI checking is a vicious loan shark.

38) And finally, a word from our sponsor: Rapid Software Testing Explored (timing friendly for Europe, the UK, and points east) runs November 22-25. Learn about RST here rapid-software-testing.com; about RSTE here rapid-software-testing.com/rst-explored/; and register here: eventbrite.com/e/rapid-softwa…

39) Epilogue: I eventually got into Messenger first, then Facebook having changed my password, but never to my knowledge having successfully supplied the second authentication factor. That’s an investigation for another day.

• • •

Missing some Tweet in this thread? You can try to

force a refresh