Whatever the state props up rises in price. Whatever technology disrupts falls in price.

https://twitter.com/zackkanter/status/1450254433288347651

Baumol's cost disease doesn't happen by accident. Labor productivity can't rise if the state bans innovation, as it does in healthcare, education, and housing.

Try using AI to automate, say, medical imaging — and see how much the state interferes. statnews.com/2020/02/28/ai-…

Try using AI to automate, say, medical imaging — and see how much the state interferes. statnews.com/2020/02/28/ai-…

The cost of medical diagnosis is not simply the cost in cost, but also the cost in time and convenience. In many studies, AI outperforms all but the very best doctors — and does so inexpensively and quickly.

Why isn't it on every phone, then? FDA. auntminnie.com/index.aspx?sec…

Why isn't it on every phone, then? FDA. auntminnie.com/index.aspx?sec…

Only a fraction of biomedical founders who've been obstructed by the rat's nest of red tape ever come forward, out of fear of retaliation, so multiply every story like this by 100. massdevice.com/mobile-mims-lo…

Why is the price of housing so high? Because the Fed printed $1T+ to prop up the price of mostly worthless mortgage-backed securities, and because city governments like SF heavily restrict new construction.

From 2010. A single trillion is quaint now! npr.org/sections/money…

From 2010. A single trillion is quaint now! npr.org/sections/money…

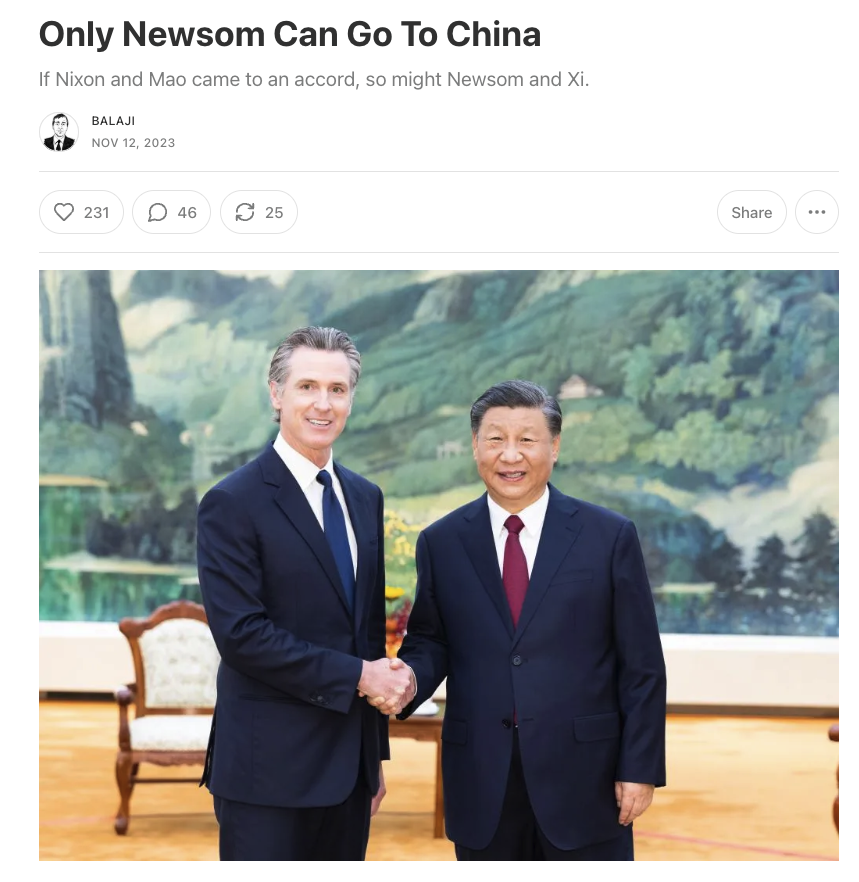

Reducing the cost of housing is not impossible. China can build infrastructure 100-1000X faster than the US because they allow innovation in construction.

Compare hours to years. Then do a financial model. That kind of improvement in time slashes rent.

Compare hours to years. Then do a financial model. That kind of improvement in time slashes rent.

https://twitter.com/balajis/status/1143621827186454528

How about education? Loan subsidies, state-gated accreditation, inhibition of charter schools...from K-12 to higher ed, that's why kids are *still* getting on yellow school buses and attending in-person lectures.

@ATabarrok's prescient comment from 2003: marginalrevolution.com/marginalrevolu…

@ATabarrok's prescient comment from 2003: marginalrevolution.com/marginalrevolu…

That was the pre-2019 status quo. But these institutions failed so hard during COVID that they've subsidized the tech alternatives. We're finally unlocking innovation in online ed, healthcare (eg mRNA vaccines), even housing (via remote work).

Automate faster than they inflate.

Automate faster than they inflate.

Love this thread? Want dozens more specific examples of how regulation holds back innovation?

Well, knock yourself out. Here's a lecture I gave in 2013 on the subject...I think it holds up reasonably well today.

github.com/ladamalina/cou…

Well, knock yourself out. Here's a lecture I gave in 2013 on the subject...I think it holds up reasonably well today.

github.com/ladamalina/cou…

• • •

Missing some Tweet in this thread? You can try to

force a refresh