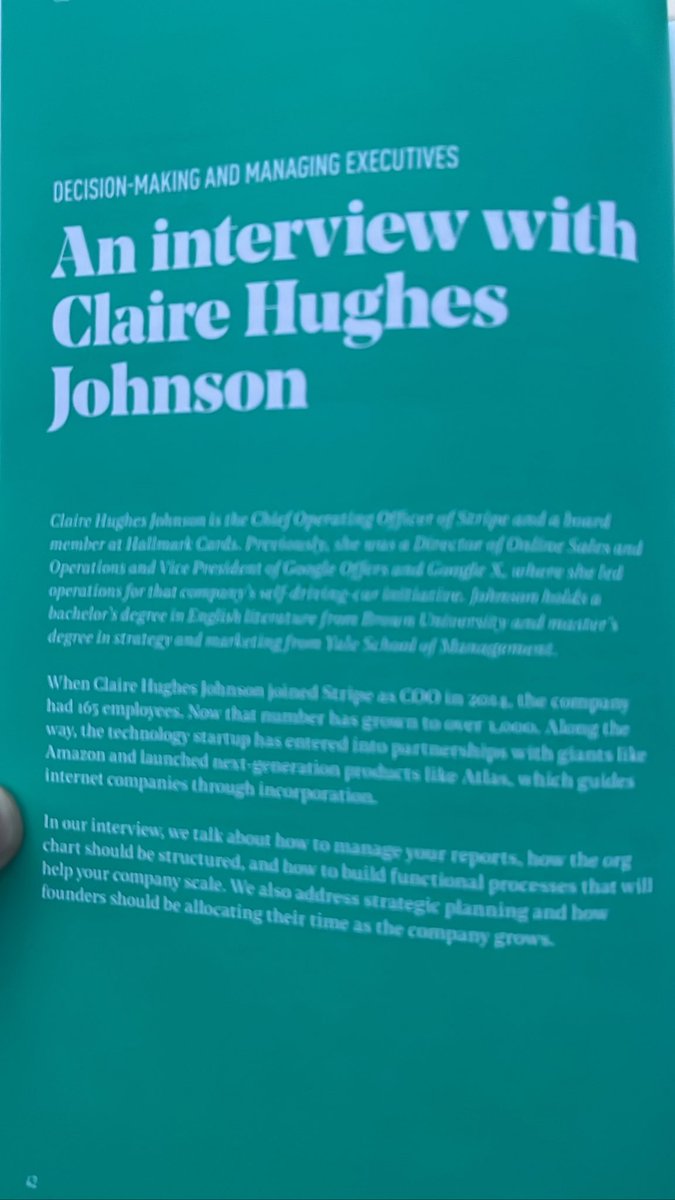

Cool to see @chughesjohnson in there!

Didn’t know that @chughesjohnson started a “working with Claire” @stripe. Brilliant! Makes me want to update and share more broadly the one I did a few years ago:

Interesting point here! Can’t the structure/team/culture be built so that team members (versus just leadership) are incentivized, rewarded and empowered to stop things? Sounds healthier/more scalable! cc @julien_c @Thom_Wolf

Yes! cc @Julie0livieri

Good to see a fellow Florida convert @rabois

That’s enough for today. I’m on page 168 #digitalbookmark

• • •

Missing some Tweet in this thread? You can try to

force a refresh