In something of a hilarious development, the ivmmeta authors have added a section titled "with GMK exclusions" to their website

Unfortunately, this still includes endless awful studies. Let's take a look at some of the papers that remain on this website

Unfortunately, this still includes endless awful studies. Let's take a look at some of the papers that remain on this website

https://twitter.com/GidMK/status/1422044335076306947

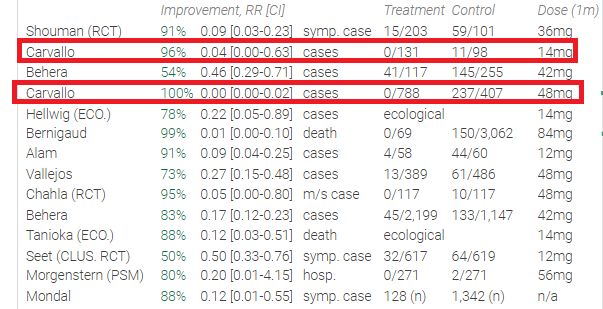

2/n Firstly, we've got studies that probably or definitely did not take place as described. I'd not include these in any analysis, certainly not an aggregate model

3/n We've got a few case series that are just a bit of a waste of time - without even controlling for age, these provide no useful evidence even as part of an aggregate model

4/n Similarly, we've got these awful analyses of mass drug administration programs in Africa, where both studies did an almost identical analysis that also ignored that these programs were halted during COVID-19. Definitely not useful as evidence

5/n (There are, of course, way more issues with both the ecological trials and case series, but let's just look at the most obvious stuff here)

6/n Then, we've got some more studies that should probably be excluded due to their low quality. We've got this study, in which there were only 34 people taking ivermectin, and thus little hope of controlling for confounding

7/n We've got quite a few observational studies that didn't control for really key confounding variables, for example this paper where the control group was clearly much sicker at baseline. Also, mistakes in the tables

8/n We've got randomized trials that have really serious errors, such as Babalola with numerous numeric mistakes or Shoumann where the authors admitted to breaking randomization in the methods, and Okumus which 'randomized' by alternating

9/n We've also got stuff in here that doesn't give us nearly enough information to include in a meta-analysis. In one memorable instance this includes a slide tweeted by a single, anonymous account

https://twitter.com/Covid19Crusher/status/1365420061859717124?s=20

10/n If you were to ask which trials I would definitely not include in any meta-analysis due to extremely low quality, serious issues with bias, or potential fabrication, you'd end up with this

11/n But even beyond this, there's so much weirdness in this website. For example, the authors take studies where there's a tiny relative risk difference between the outcome, but use the inverse to show a tiny point estimate

12/n For instance, in this study 108/110 people in the ivermectin group were discharged compared to 124/144 in the control, or a 14% increase in the relative likelihood of being discharged if you took ivermectin

13/n Instead, based on a pretty dubious logistic regression in the initial paper, the authors have taken the ratio of NOT being discharged, which has the impact of massively exaggerating the effect, from a 14% to a 87% benefit

14/n And then, the numerous examples where the authors contradict their own methodology (always in favour of ivermectin, of course)

15/n The authors say that they take "most serious" events. But here's an example where they took the risk of not having a fever at day 7 instead of length of hospitalization. Why? Well, the placebo group did better on hospitalization than ivermectin

16/n So firstly, if we wanted to make ivmmeta non-useless we'd have to exclude A LOT more of these garbage 'studies'. We'd also have to run the calculations in an honest way, and apply the criteria evenly

17/n I doubt that any of this will be done, because the purpose of the website is not to look at the evidence reasonably, but to prove that ivermectin works regardless of what the evidence shows 🤷♂️

• • •

Missing some Tweet in this thread? You can try to

force a refresh