Can you detect COVID-19 using Machine Learning? 🤔

You have an X-ray or CT scan and the task is to detect if the patient has COVID-19 or not. Sounds doable, right?

None of the 415 ML papers published on the subject in 2020 was usable. Not a single one!

Let's see why 👇

You have an X-ray or CT scan and the task is to detect if the patient has COVID-19 or not. Sounds doable, right?

None of the 415 ML papers published on the subject in 2020 was usable. Not a single one!

Let's see why 👇

Researchers from Cambridge took all papers on the topic published from January to October 2020.

▪️ 2212 papers

▪️ 415 after initial screening

▪️ 62 chosen for detailed analysis

▪️ 0 with potential for clinical use

healthcare-in-europe.com/en/news/machin…

There are important lessons here 👇

▪️ 2212 papers

▪️ 415 after initial screening

▪️ 62 chosen for detailed analysis

▪️ 0 with potential for clinical use

healthcare-in-europe.com/en/news/machin…

There are important lessons here 👇

Small datasets 🐁

Getting medical data is hard, because of privacy concerns, and at the beginning of the pandemic, there was just not much data in general.

Many papers were using very small datasets often collected from a single hospital - not enough for real evaluation.

👇

Getting medical data is hard, because of privacy concerns, and at the beginning of the pandemic, there was just not much data in general.

Many papers were using very small datasets often collected from a single hospital - not enough for real evaluation.

👇

Biased datasets 🧒🧑🦲

Some papers used a dataset that contained non-COVID images from children and COVID images from adults. These methods probably learned to distinguish children from adults... 🤷♂️

👇

Some papers used a dataset that contained non-COVID images from children and COVID images from adults. These methods probably learned to distinguish children from adults... 🤷♂️

👇

Training and testing on the same data ❌

OK, you just never do that! Never!

👇

OK, you just never do that! Never!

👇

Unbalanced datasets ⚖️

There are much more non-COVID scans than real COVID cases, but not all papers managed to adequately balance their dataset to account for that.

Check out this thread for more details on how to deal with imbalanced data:

👇

There are much more non-COVID scans than real COVID cases, but not all papers managed to adequately balance their dataset to account for that.

Check out this thread for more details on how to deal with imbalanced data:

https://twitter.com/haltakov/status/1359643910688157696

👇

Unclear evaluation methodology ⁉️

Many papers failed to disclose the amount of data they were tested or important aspects of how their models work leading to poor reproducibility and biased results.

👇

Many papers failed to disclose the amount of data they were tested or important aspects of how their models work leading to poor reproducibility and biased results.

👇

The problem is in the data 💽

The big problem for most methods was the availability of high-quality data and a deep understanding of the problem - many papers didn't even consult with radiologists.

A high-quality and diverse dataset is more important than your fancy model!

👇

The big problem for most methods was the availability of high-quality data and a deep understanding of the problem - many papers didn't even consult with radiologists.

A high-quality and diverse dataset is more important than your fancy model!

👇

References 🗒️

Full article in Nature: nature.com/articles/s4225…

More detailed coverage: statnews.com/2021/06/02/mac…

Source for X-Ray image: bmj.com/content/370/bm…

Full article in Nature: nature.com/articles/s4225…

More detailed coverage: statnews.com/2021/06/02/mac…

Source for X-Ray image: bmj.com/content/370/bm…

This week I'm reposting some of my best threads from the past months, so I can focus on creating my machine learning course.

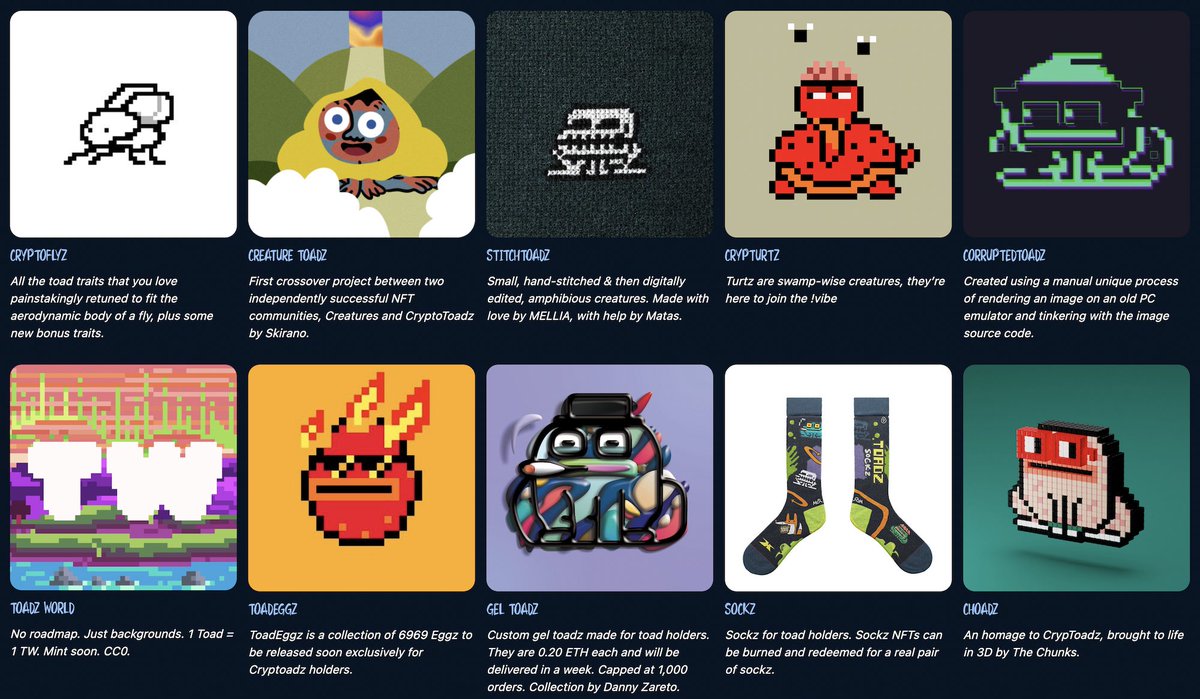

Next week I'm back with some new content on machine learning and web3, so make sure you follow me @haltakov.

Next week I'm back with some new content on machine learning and web3, so make sure you follow me @haltakov.

• • •

Missing some Tweet in this thread? You can try to

force a refresh