Building products to make your life simpler. Ex VP of Eng, AI, BMW self-driving.

🏞 https://t.co/9Hc8cSroul

💻 https://t.co/OROI1NAwth

🐰 https://t.co/0E3Nmvi3B5

7 subscribers

How to get URL link on X (Twitter) App

Dimensionality Reduction

Dimensionality Reduction

The Basis ◻️

The Basis ◻️

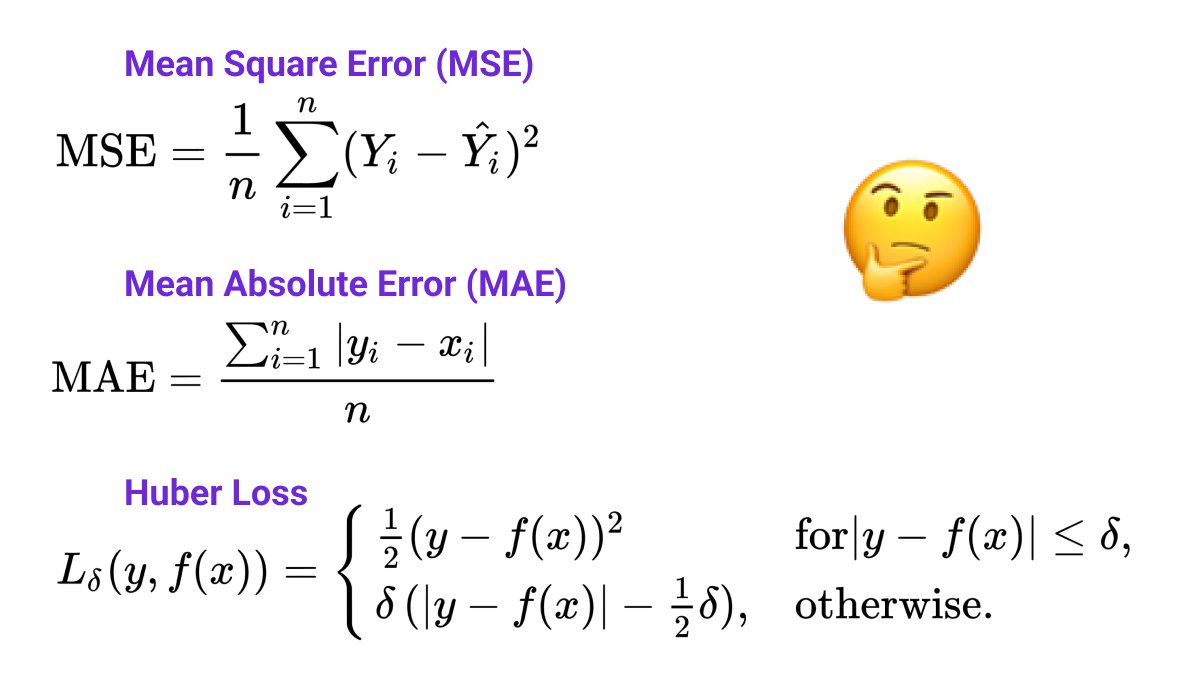

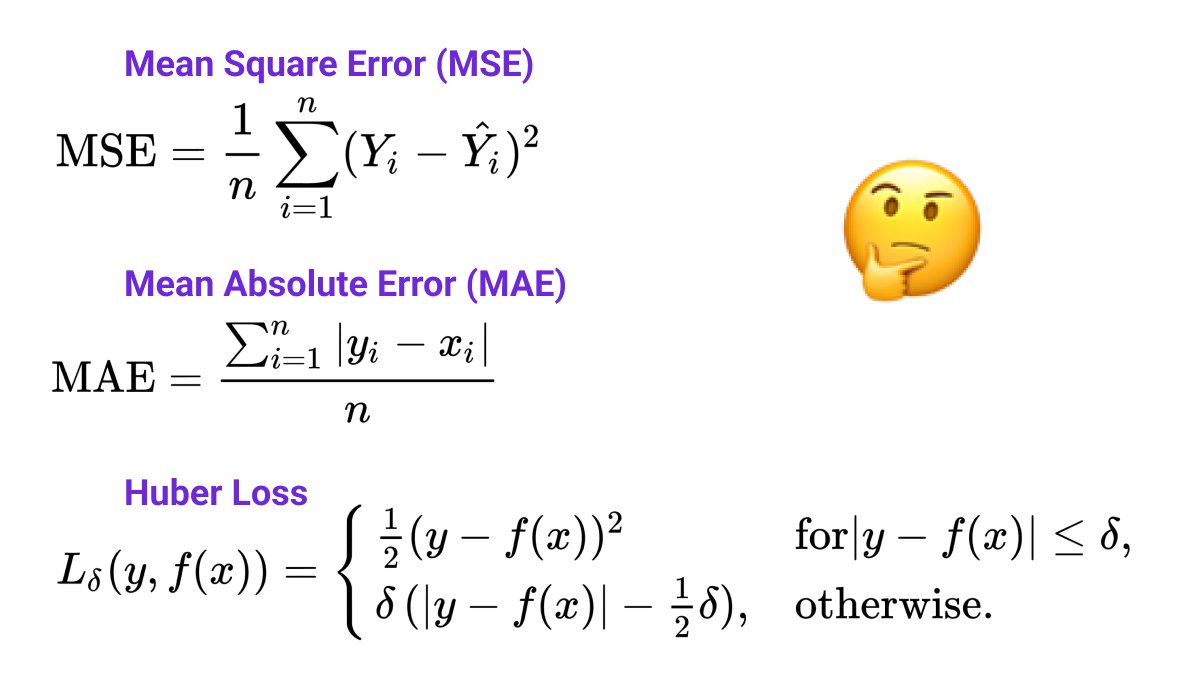

Let's first quickly recap what each of the loss functions does. After that, we can compare them and see the differences based on some examples.

Let's first quickly recap what each of the loss functions does. After that, we can compare them and see the differences based on some examples.

Collect Data 💽

Collect Data 💽

Background

Background

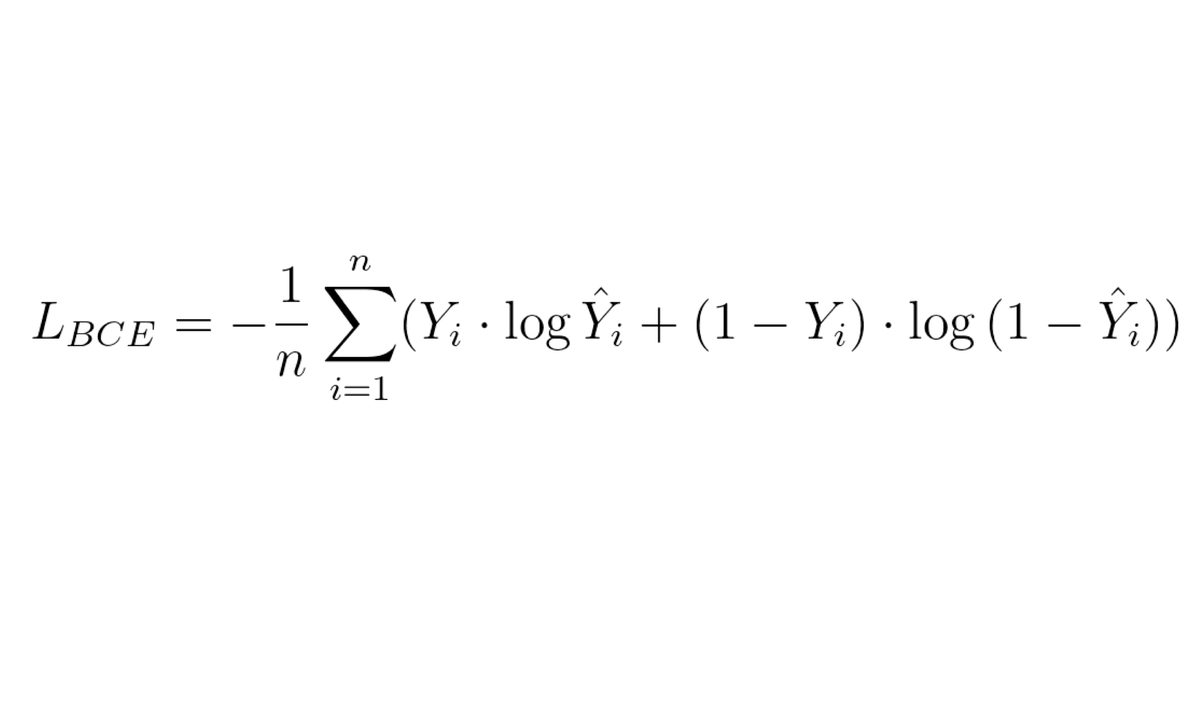

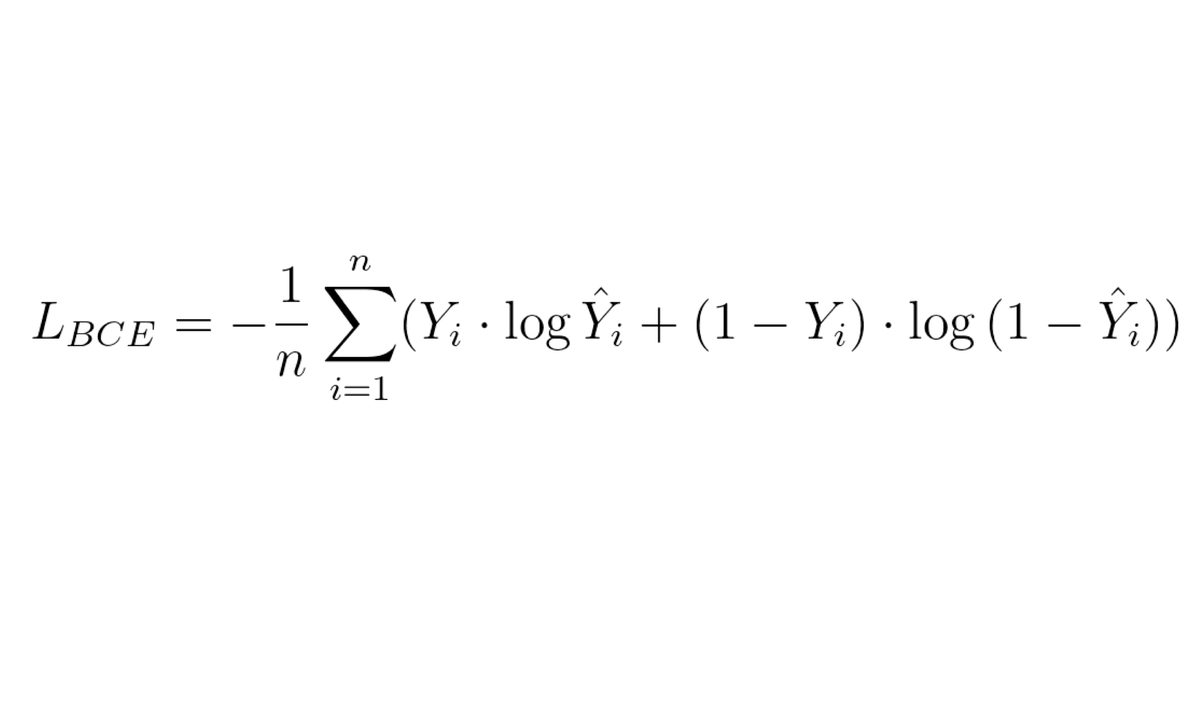

The Cross-Entropy Loss function is one of the most used losses for classification problems. It tells us how well a machine learning model classifies a dataset compared to the ground truth labels.

The Cross-Entropy Loss function is one of the most used losses for classification problems. It tells us how well a machine learning model classifies a dataset compared to the ground truth labels.

Botto uses a popular technique to create images - VQGAN+CLIP

Botto uses a popular technique to create images - VQGAN+CLIP

Last week I wrote another detailed thread on ROC curves. I recommend that you read it first if you don't know what they are.

Last week I wrote another detailed thread on ROC curves. I recommend that you read it first if you don't know what they are.https://twitter.com/haltakov/status/1438206936680386560

SNNs use an activation function called Scaled Exponential Linear Unit (SELU) that is pretty simple to define.

SNNs use an activation function called Scaled Exponential Linear Unit (SELU) that is pretty simple to define.

Artificially scarce

Artificially scarce

What does ROC mean?

What does ROC mean?

@OpenAI GLIDE also has the interesting ability to perform inpainting allowing for some interesting usages.

@OpenAI GLIDE also has the interesting ability to perform inpainting allowing for some interesting usages.