Ever felt your favorite gene has *more than one function* after perturbing it in many cell contexts? Today at #MLCB2021, I'll discuss automatic genotype-phenotype inference from high-dimensional gene perturbation data using sparse approximation: arxiv.org/abs/2111.06247 [a 🧵]

This work was performed with my mentors @marinkazitnik (@HarvardDBMI), James McFarland (@CancerDepMap), and William Hahn (@broadinstitute @DanaFarber)

along with @JKwonBio, Jessica Talamas, @AshirBorah, @aviadt, @paquita, @boehmjesse

along with @JKwonBio, Jessica Talamas, @AshirBorah, @aviadt, @paquita, @boehmjesse

Words and genes share a correspondence: their *meanings* are resolved via context. Just as polysemic works like "apple" can refer to fruits or computers depending on the sentence, pleiotropic genes can perform function_1 or function_2 depending on what's going on in the cell.

It's widely known that word embeddings trained on large text corpuses recover linear semantics (king - man + woman = queen) that model polysemy arxiv.org/abs/1905.01896... could we discover gene "semantics" from biological data? If so, what data and what approach would we use?

We propose using gene perturbation effect measurements for this task. By comparing phenotypes of normal cells vs. cells harboring gene knockouts (#CRISPR), we get close to the causal effect of that gene on cell function. Fitness screens across many cell contexts are now common.

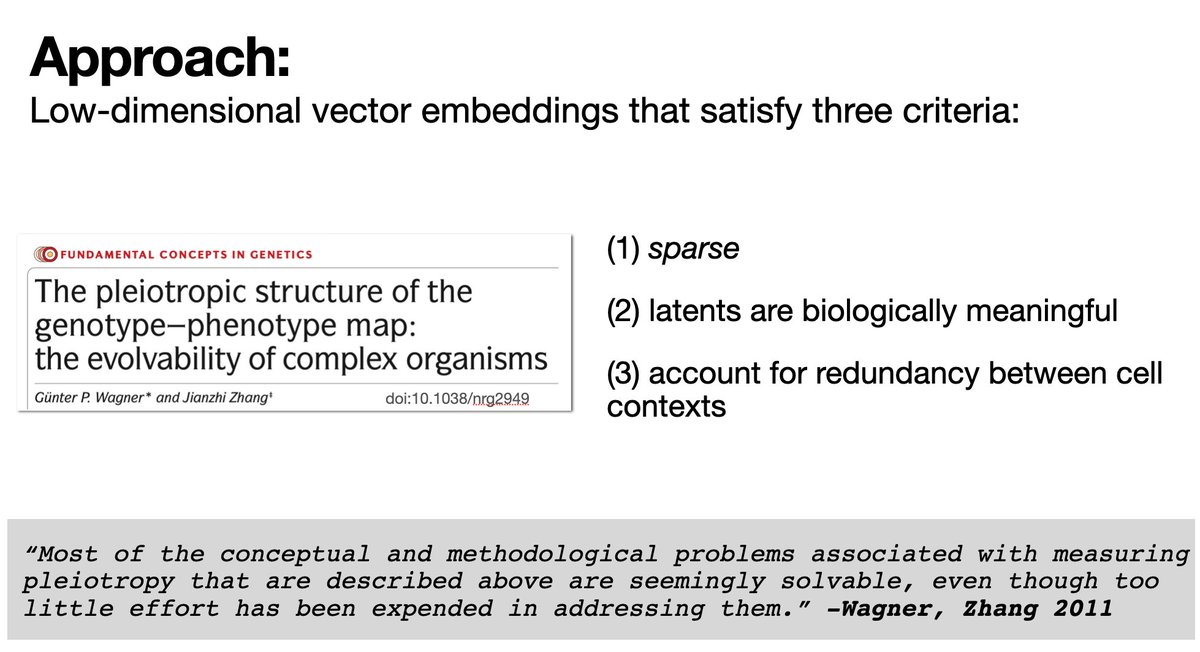

For our model, we want some vector representation for genes in terms of their effects across cell contexts. In 2011, Wagner and Zhang outlined clear criteria for measuring pleiotropy in high-d data: (1) sparse, (2) interpretable, (3) non-redundant latents doi.org/10.1038/nrg2949

These criteria led us to graph-regularized dictionary learning, blending sparse matrix factorization w/ manifold learning on a gene co-fitness graph (a la @TraverHart @anshulkundaje @MCBassik @MarcMendillo @KivancBirsoy) and cell similarity graph @evaubo @jkpritch @WJGreenleaf

The full method involves: (1) preprocessing and batch correction, (2) dictionary initialization using k-medoids, and (3) DGRDL (doi.org/gdq9vx). Numeric fitness data is the sole input, with graph priors derived empirically (cosine nearest-neighbors over rows / cols).

Our approach works on real-world data. From @Molivieri_ @durocher1's genotoxic fitness screens ( doi.org/gg73kt), we recovered latent biological functions reflecting gene pleiotropy. Linear "semantics" emerge from this (H2AFX - End Joining + Fanconi Anemia ≈ RAD51B!)

We also recovered interpretable latent functions from @CancerDepMap data. Gene effects are modeled as mixtures of context-specific fns (see @TraverHart w/ supervised learning! doi.org/g6r3). Joint embeddings of genes & fns enables exploration, akin to SAFE (@abarysh)

Finally, we used biological functions inferred from gene knockouts to model compounds. By projecting compound sensitivity profiles into our latent space derived from CRISPR data, we recover drug-function-gene interactions (a la @emanuelvgo @Garnettlab doi.org/gg7825)

Our method ('Webster' 📖) relies only on fitness data as input -- genesets are used to annotate latent variables, but not to derive them. We hope such approaches might be useful for learning representations of gene fn! (see doi.org/fbdp78 @edward_marcotte @andy_utoronto)

• • •

Missing some Tweet in this thread? You can try to

force a refresh