1/ Can we (theoretically) see vaccination deaths in all cause mortality? Difficult...

Let's take NL as example.

Even with a vaxx mortality of 1:10k (which is very high), we expect it to be buried in the background of mortality.

👉Maybe for <30y @OS51388957 @connolly_s

Let's take NL as example.

Even with a vaxx mortality of 1:10k (which is very high), we expect it to be buried in the background of mortality.

👉Maybe for <30y @OS51388957 @connolly_s

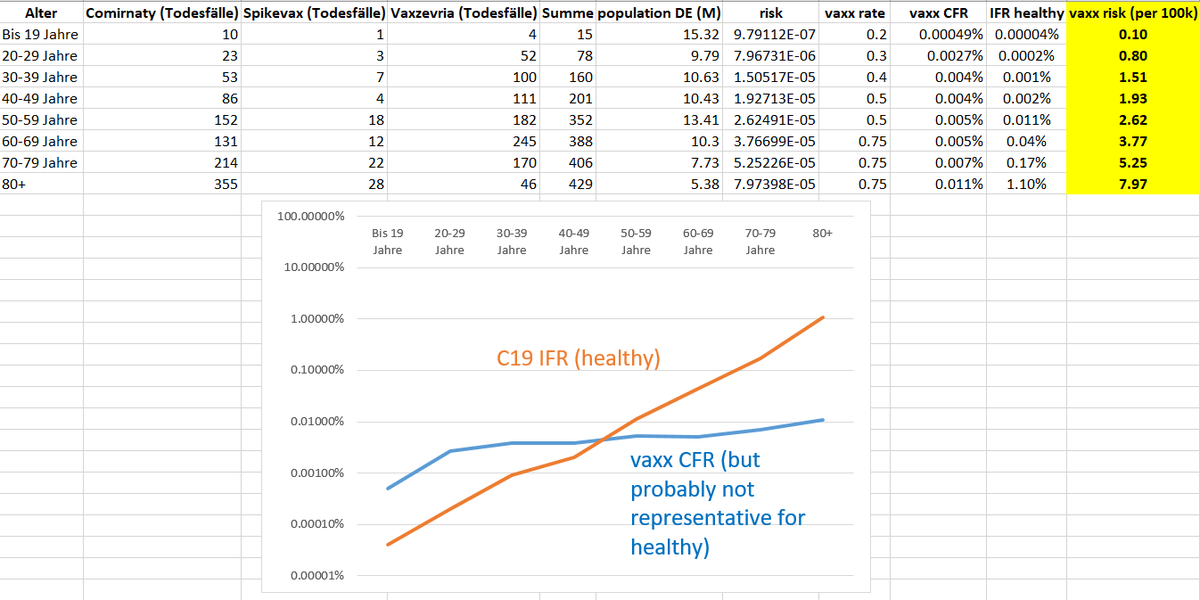

2/ The problem is that the vaccination will be diluted over many weeks.

I assumed that e.g. 10% of the population in an age group will vaccinate per week. Then this gives the expected weekly vaxx death background (red for 1:10k vaxx CFR-->1:100k, and blue 1:50k CFR-->1:500k).

I assumed that e.g. 10% of the population in an age group will vaccinate per week. Then this gives the expected weekly vaxx death background (red for 1:10k vaxx CFR-->1:100k, and blue 1:50k CFR-->1:500k).

3/ So we could maybe see it for the below 30y. But here, also the vaxx CFR is rather >>1:100k. So difficult. Maybe in cases when a lot of people vaccinated in the same week.

That's what @OS51388957 is hunting. He knows what he is doing. 😎🤙💪

That's what @OS51388957 is hunting. He knows what he is doing. 😎🤙💪

4/ Sources for creating the population adjusted age graph for NL:

Mortality: ec.europa.eu/eurostat/datab…

Population: ec.europa.eu/eurostat/datab…

Derived 5 years bins for population:

public.tableau.com/app/profile/or…

Result:

public.tableau.com/app/profile/or…

Mortality: ec.europa.eu/eurostat/datab…

Population: ec.europa.eu/eurostat/datab…

Derived 5 years bins for population:

public.tableau.com/app/profile/or…

Result:

public.tableau.com/app/profile/or…

@mr_Smith_Econ @jens_140081 @FrankfurtZack @DrSimonsSpirit @holmenkollin @freiheit_ruft @prof_freedom

5/ Correction for the 1:50k line (red). If 10% get vaxx per week, this gives 0.2 per 100k. So it would be buried in noise.

That doesn't mean that 1:50k is acceptable for healthy children wo have a lower C19 IFR than this!! Let's be clear on this!! 1:50k--> not OK even.

That doesn't mean that 1:50k is acceptable for healthy children wo have a lower C19 IFR than this!! Let's be clear on this!! 1:50k--> not OK even.

6/ To put things in perspective (for those not used to log scales): here a linear y-axis version of the plot.

But again: even a vaxx CFR of 1/500k would not be OK. This is not a small number for children!!

But again: even a vaxx CFR of 1/500k would not be OK. This is not a small number for children!!

7/ Diclaimer: the CFR examples are theoretical(!!). I'm not saying that this is what we have for a healthy person. This value remains UNKNOWN. We have no data allowing to inferring what it is. The VAERS, PEI, Lareb, EMA reported deaths are to my view mostly co-morbidities.

8/ Similar as C19 pushes the "weak" over the edge, the Spike vaxx may behave in the same way.

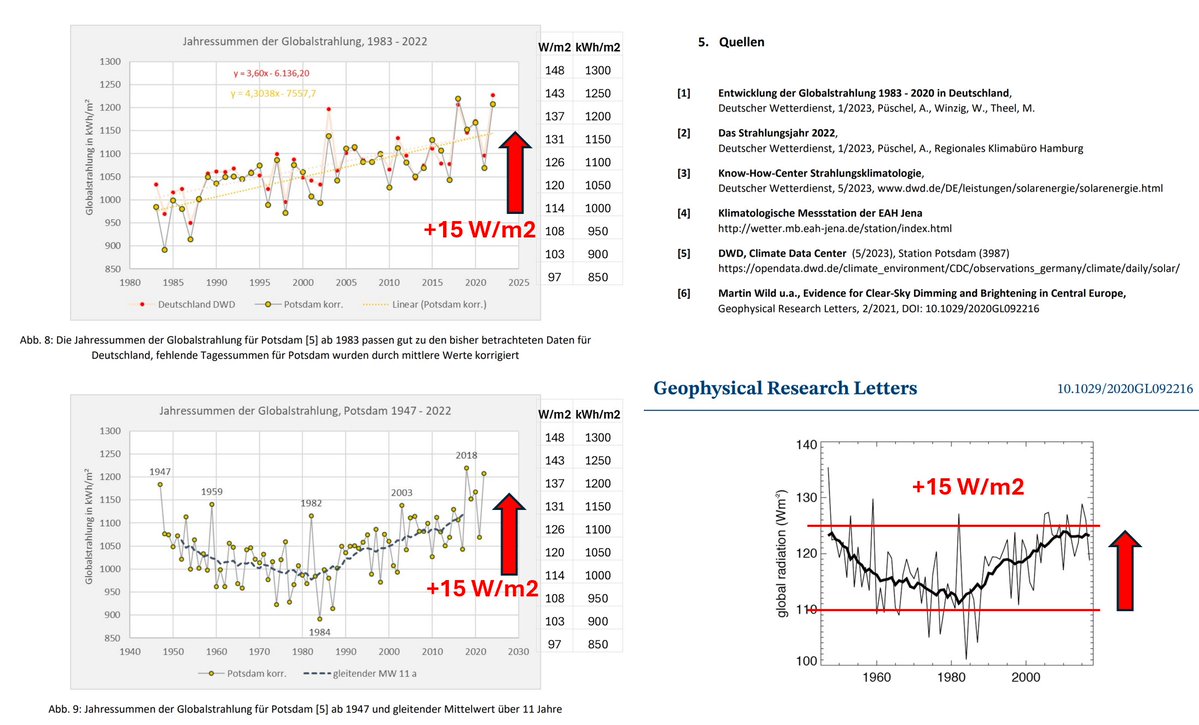

This was the vaxx CFR by age as extracted from the PEI report July 2021. Most likely, the vaxx CFR curve for healthy is far below this level. But I have no data to estimate what it is.

This was the vaxx CFR by age as extracted from the PEI report July 2021. Most likely, the vaxx CFR curve for healthy is far below this level. But I have no data to estimate what it is.

• • •

Missing some Tweet in this thread? You can try to

force a refresh