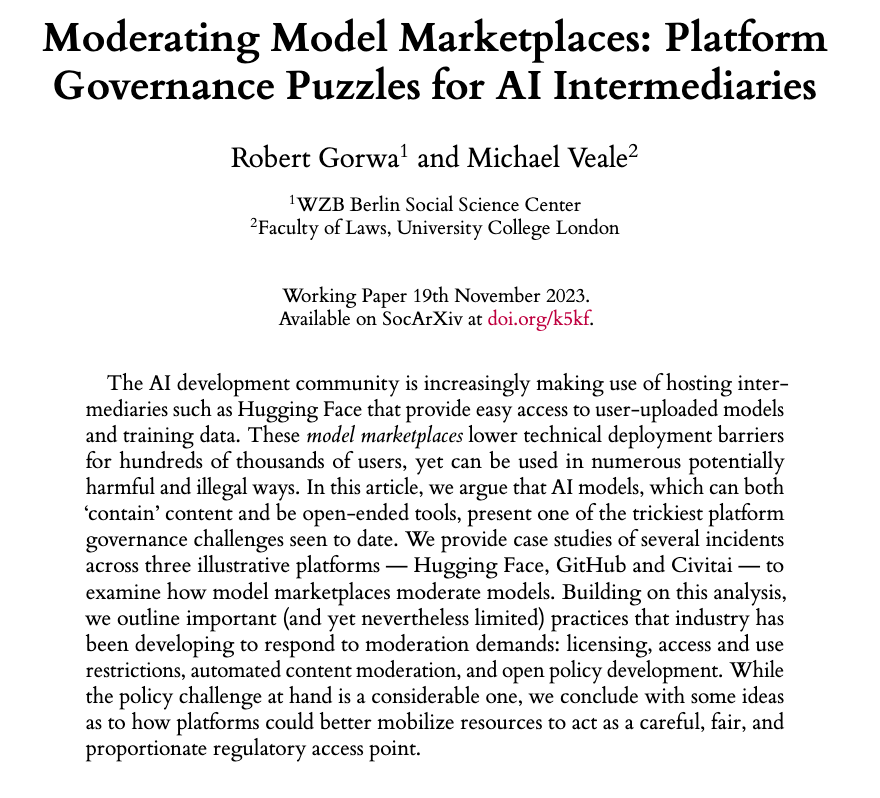

The Council presidency compromise text on the draft EU AI Act has some improvements, some big steps back, ignores some huge residual problems and gives a *giant* handout to Google, Amazon, IBM, Microsoft and similar. Thread follows. 🧵

The Good:

G1. The manipulation provisions are slightly strengthened by a weakening of intent and a consideration of reasonable likelihood. The recital also has several changes which actually seem like they have read our AIA paper, on sociotechnical systems and accumulated harms…

G3. Was unsure whether to add this into ‘good’, but I don’t really mind the changes to the scope of the act, I think ‘modelling’ is still broad and includes complex spreadsheets and things (cc @mireillemoret), w/o including ones simply automating obvious actions. Jury still out.

G4. Some changes to high risk in Annex III to include certain critical infrastructures, insurance premium setting, and to clarify that contractors and their ilk included in law enforcement obligations

The Bad.

B1. While the EU regulating national security *users* would be controversial, the text exempts systems developed solely for national security. NSO-equivalent firms just… fall out of the Act and its requirements, as long as they don’t sell more broadly. Even when selling abroad!

• • •

Missing some Tweet in this thread? You can try to

force a refresh