Today, our letter about a paper in Nature Scientific Reports that claimed to find no evidence that staying at home reduced Covid-19 deaths was published

This is a depressing example of how scientific error-correction fails 1/n nature.com/articles/s4159…

This is a depressing example of how scientific error-correction fails 1/n nature.com/articles/s4159…

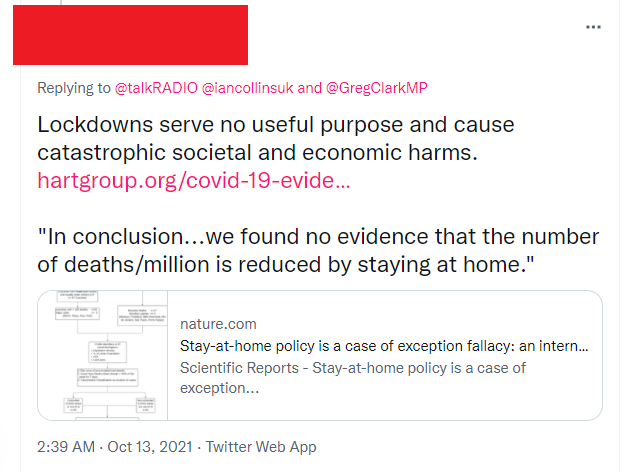

2/n The original paper came out in March, amid the huge worldwide epidemics, and was immediately a massive hit. After 9 months, it has been accessed nearly 400k times and has one of the highest Altmetric scores of any paper ever

3/n The paper has also been, I think it's fair to say, one of the more impactful pieces of work during the pandemic. It is still regularly cited everywhere to support the idea that government restrictions against Covid-19 don't work

4/n However, there are clear issues with this paper. When it first came out, we wrote a series of threads on twitter about this as well as an OSF preprint

https://twitter.com/GidMK/status/1371914323061182466?s=20

https://twitter.com/RaphaelWimmer/status/1371930576450678784?s=20

5/n In addition, @goescarlos wrote a detailed mathematical preprint which has now been published on the paper as well nature.com/articles/s4159…

6/n @goescarlos proved that the model the authors use will always produce what is essentially noise. @RaphaelWimmer then simulated this and proved that regardless of how closely Covid-19 deaths were linked with staying-at-home behaviour, the model finds no correlation

7/n Indeed, even if you create a dataset where staying at home increases Covid-19 deaths in a 1:1 ratio, the authors model spits out non-significant results

8/n There are also numerous other methodological concerns in the paper. For example, the authors uncritically use Belarussian death data, which is notoriously fake and fails the simplest of sense-checks

9/n Basically, the paper is entirely useless. It proves absolutely nothing about Covid-19 or staying at home

And yet, remember, HUGELY impactful. Used in decision-making in countries across the globe

And yet, remember, HUGELY impactful. Used in decision-making in countries across the globe

10/n The thing is, the editors of Scientific Reports did everything right here, according to the usual academic norms. Shortly after we went public with our concerns via twitter, they posted a notice of concern on the paper

11/n The editors have been very responsive to us throughout. They have listened to the problems, had them peer-reviewed to make sure these are real issues, and generally done everything that we expect editors to do in this situation

12/n And yet, the entire process is a monumental failure

Why?

Because it all takes FAR TOO LONG

Why?

Because it all takes FAR TOO LONG

13/n The study went viral overnight. It was read by 100,000s within a month. While the note of concern went up quickly, it said very little and did not really disagree with the main arguments of the paper

14/n This paper, which was always useless as evidence, has been used as exactly that for nearly a year while we slowly ensured that the enormous, serious criticisms of it were accurate

That is simply not fast enough

That is simply not fast enough

15/n In a pandemic, decisions are made overnight. A paper which goes viral today may end up in the hands of the Albanian head of state the next day. A formal response that takes 9+ months to arrive is simply inadequate to mitigate any harms

16/n I don't have any good solutions for this. Peer-review, flawed as it is, will always allow some terrible research through. With the new online world, that research will sometimes go viral and have severe negative impacts before we can correct it

17/n I can say, however, that if it takes the better part of a year to make any major comment on a paper that mathematically cannot provide any evidence on the subject it examines, then our system for error-checking has serious issues

• • •

Missing some Tweet in this thread? You can try to

force a refresh