Why is matrix multiplication defined the way it is?

When I first learned about it, the formula seemed too complicated and counter-intuitive! I wondered, why not just multiply elements at the same position together?

Let me explain why!

↓ A thread. ↓

1/11

When I first learned about it, the formula seemed too complicated and counter-intuitive! I wondered, why not just multiply elements at the same position together?

Let me explain why!

↓ A thread. ↓

1/11

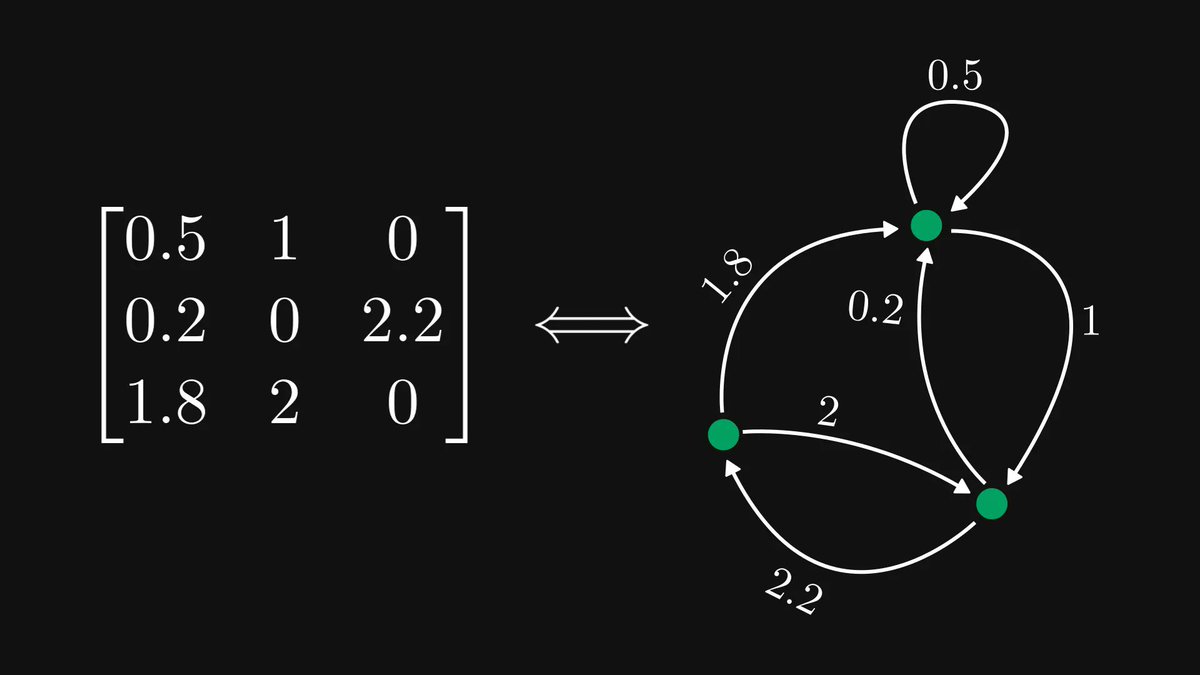

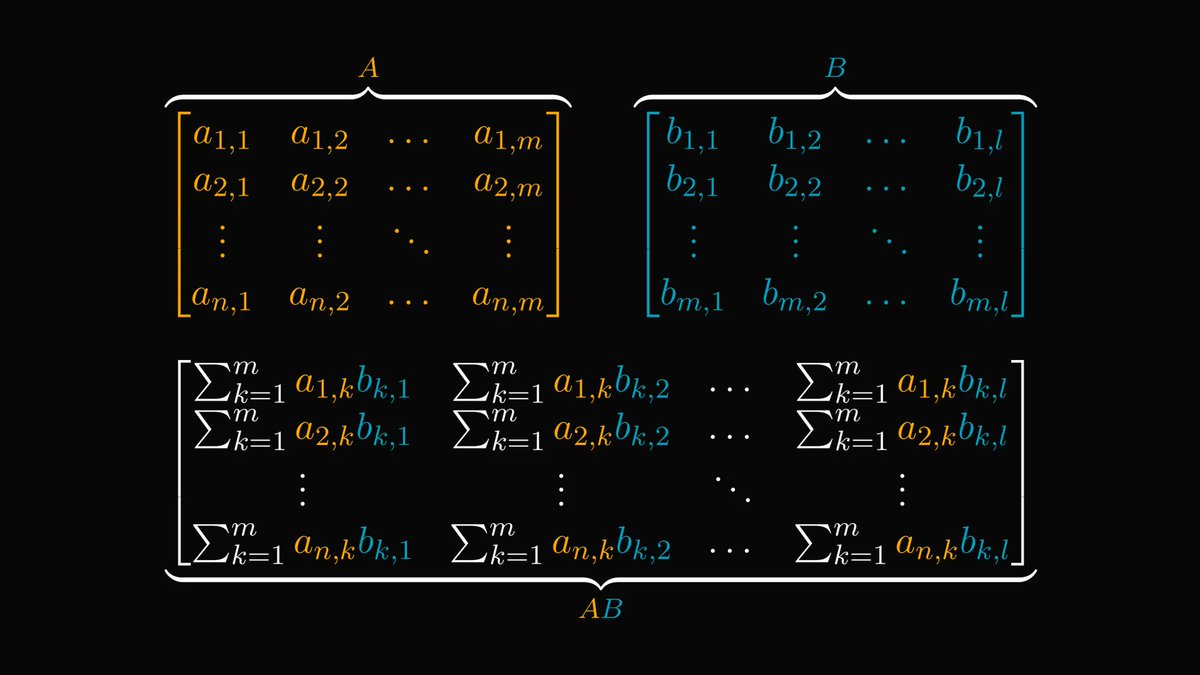

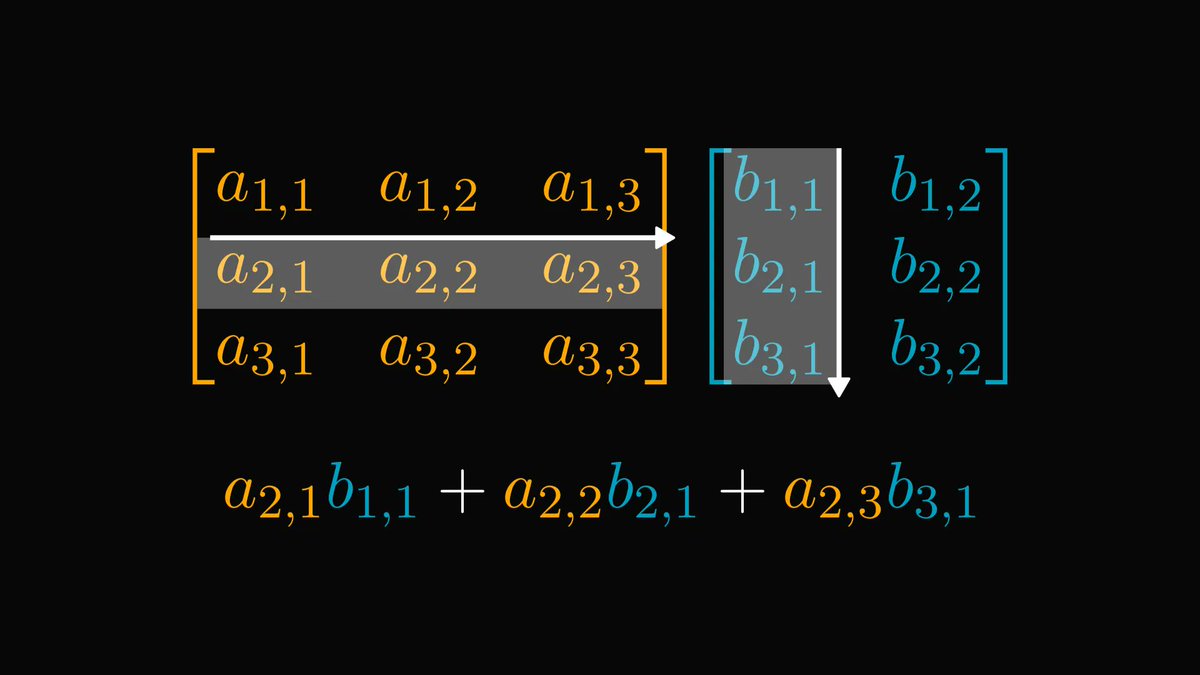

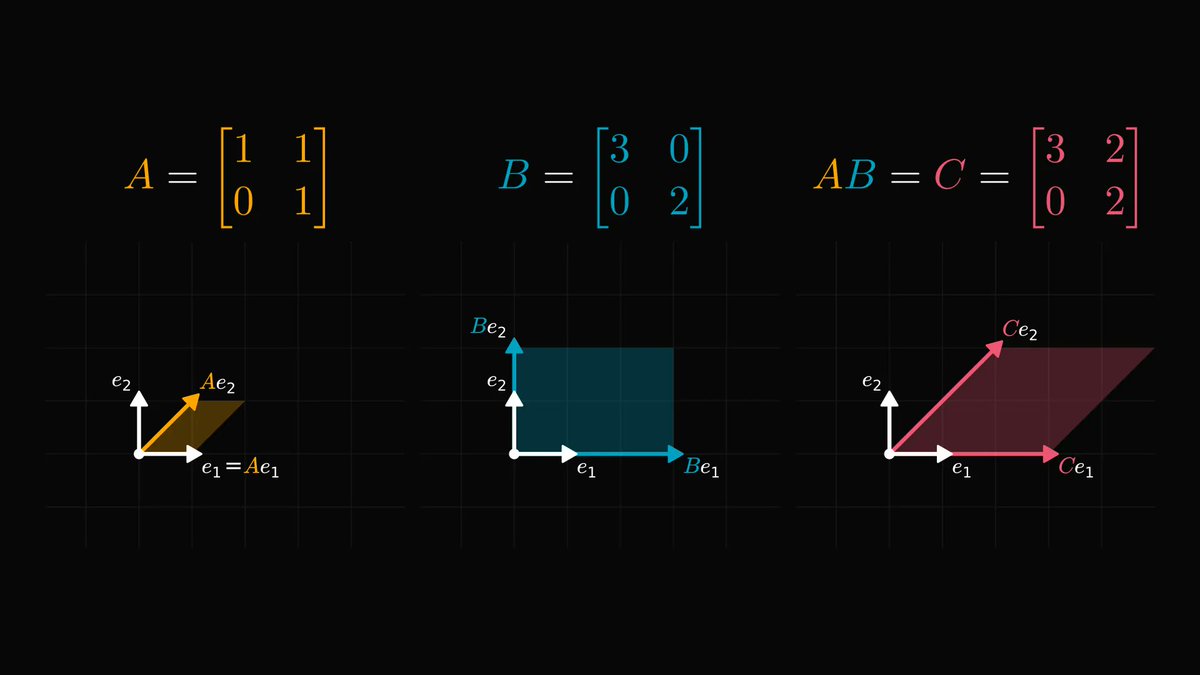

First, let's see how to make sense of matrix multiplication!

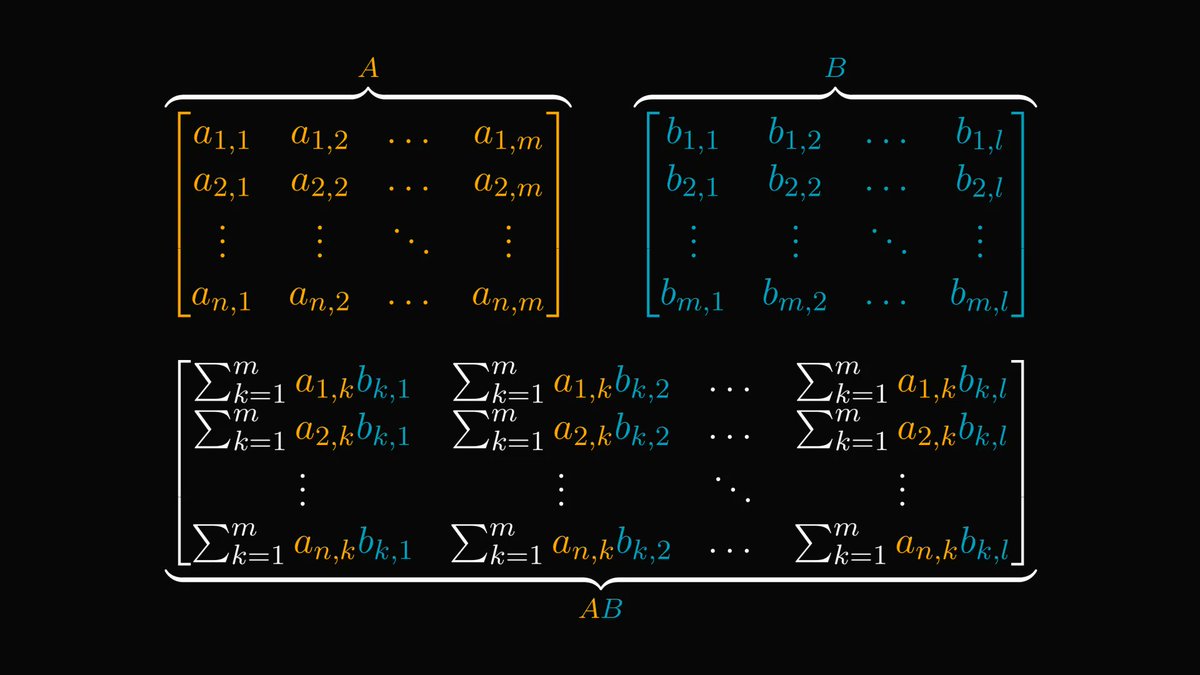

The elements of the product are calculated by multiplying rows of 𝐴 with columns of 𝐵.

It is not trivial at all why this is the way. 🤔

To understand, let's talk about what matrices really are!

2/11

The elements of the product are calculated by multiplying rows of 𝐴 with columns of 𝐵.

It is not trivial at all why this is the way. 🤔

To understand, let's talk about what matrices really are!

2/11

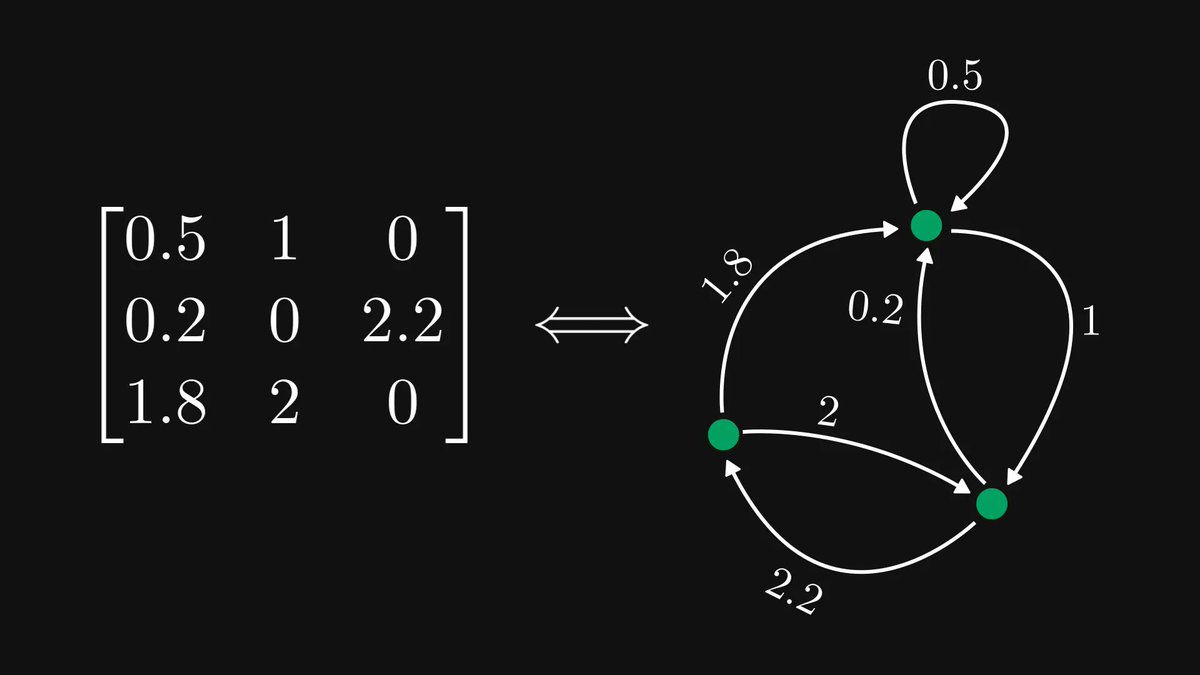

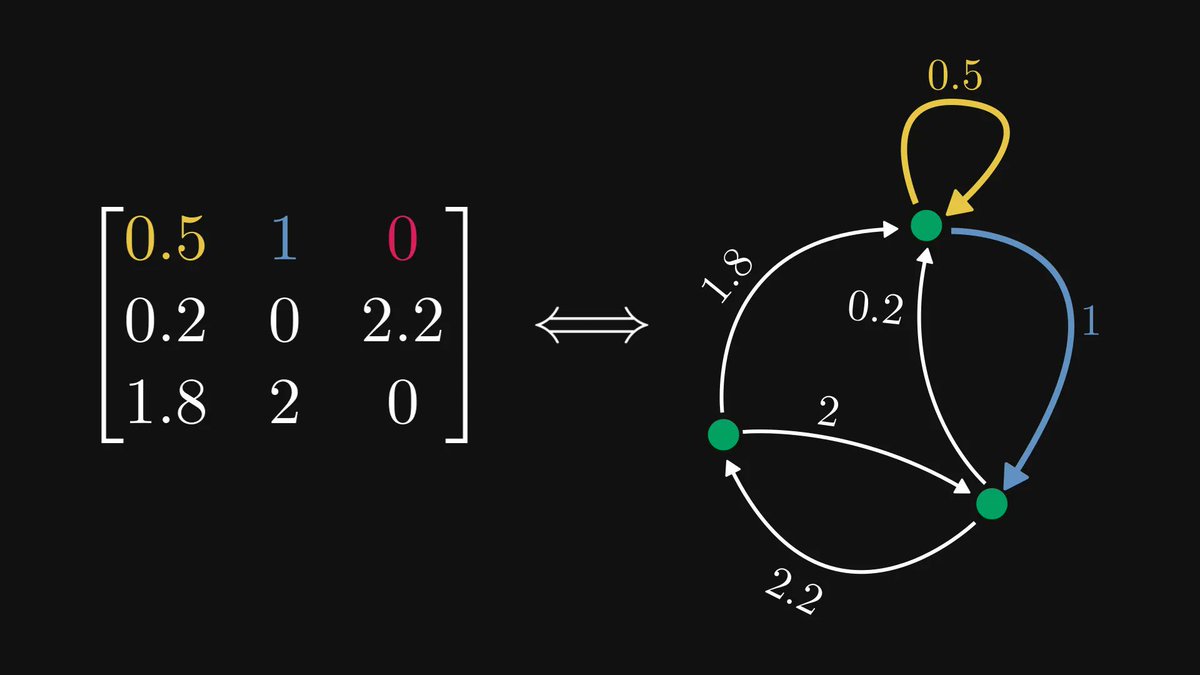

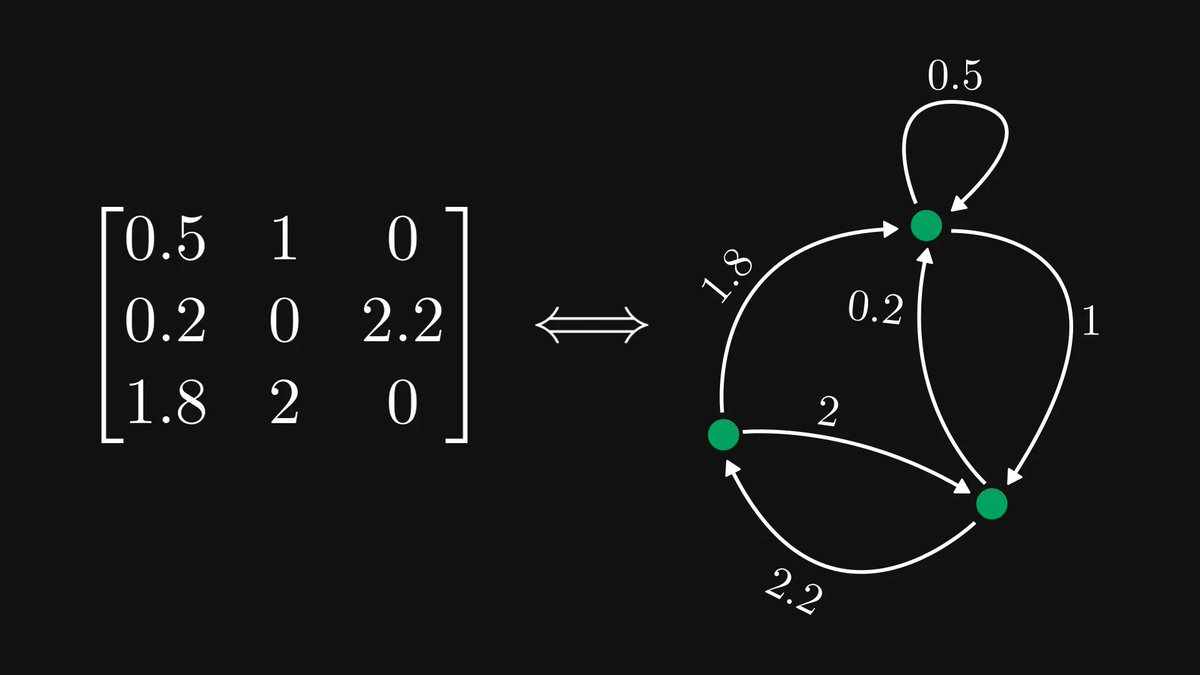

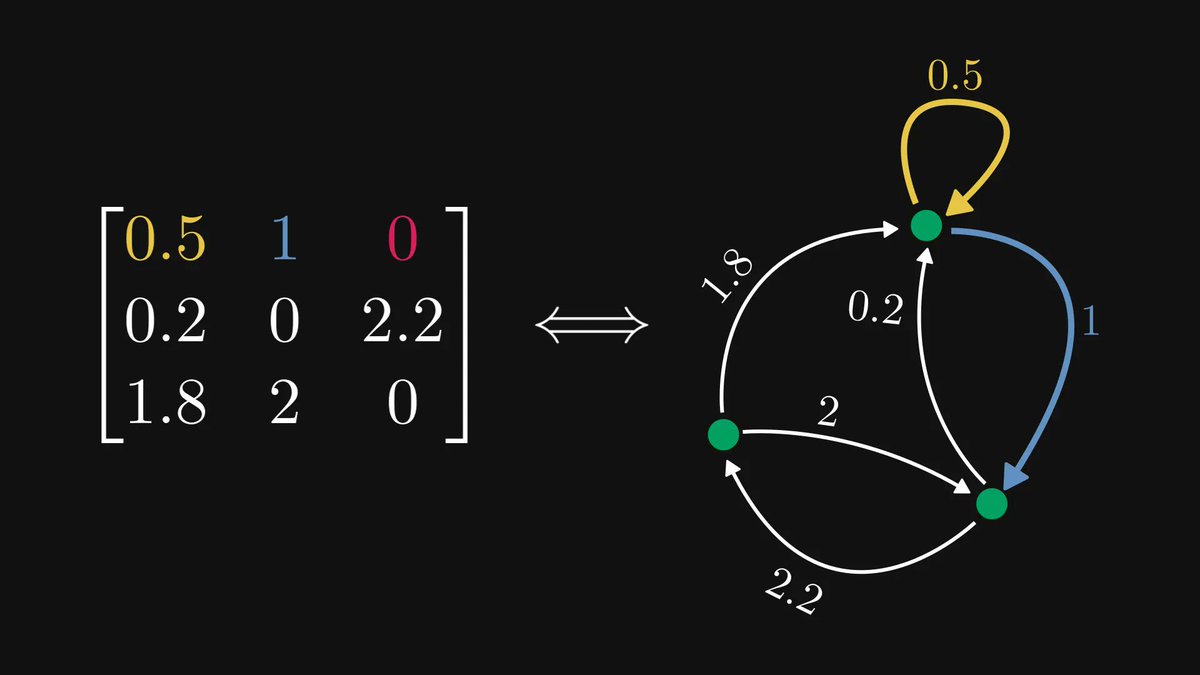

Matrices are just representations of linear transformations: mappings between vector spaces that are interchangeable with addition and scalar multiplication.

Let's dig a bit deeper to see why are matrices and linear transformations are (almost) the same!

3/11

Let's dig a bit deeper to see why are matrices and linear transformations are (almost) the same!

3/11

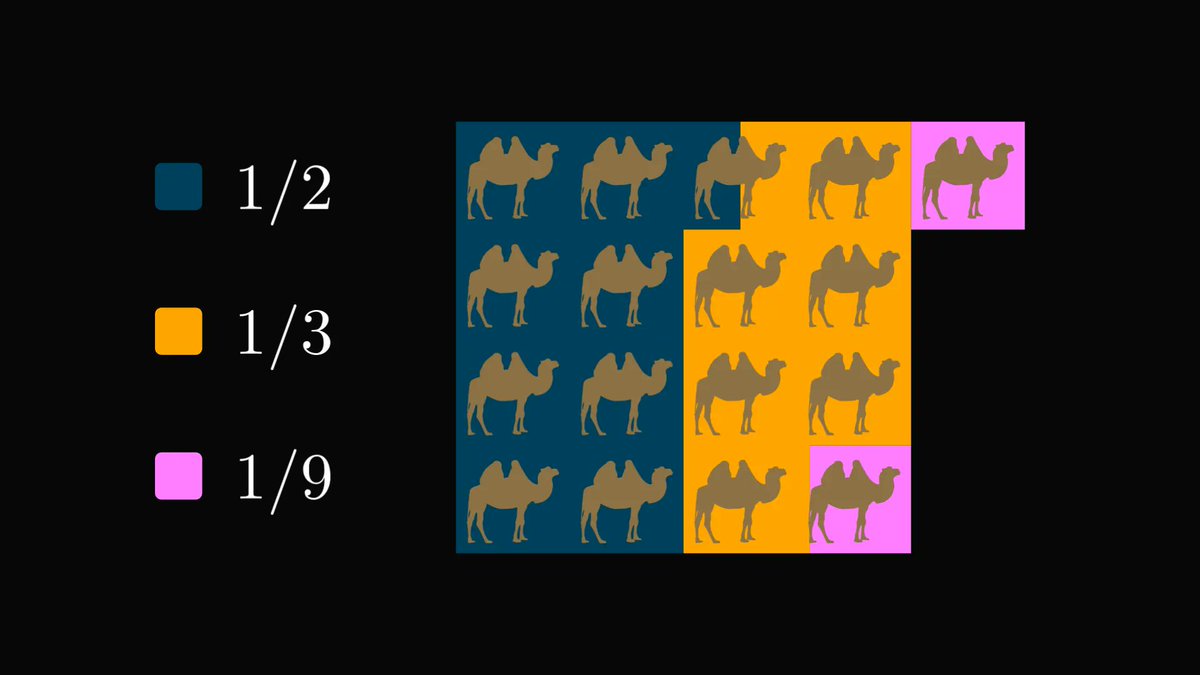

The first thing to note is that every vector space has a basis, which can be used to uniquely express every vector as their linear combination.

4/11

4/11

The simplest example is probably the standard basis in the 𝑛-dimensional real Euclidean space.

(Or, with less fancy words, in 𝐑ⁿ, where 𝐑 denotes the set of real numbers.)

5/11

(Or, with less fancy words, in 𝐑ⁿ, where 𝐑 denotes the set of real numbers.)

5/11

Why is this good for us? 🤔

💡 Because a linear transformation is determined by its behavior on basis vectors! 💡

If we know the image of the basis vectors, we can calculate the image of every vector, as I show below.

6/11

💡 Because a linear transformation is determined by its behavior on basis vectors! 💡

If we know the image of the basis vectors, we can calculate the image of every vector, as I show below.

6/11

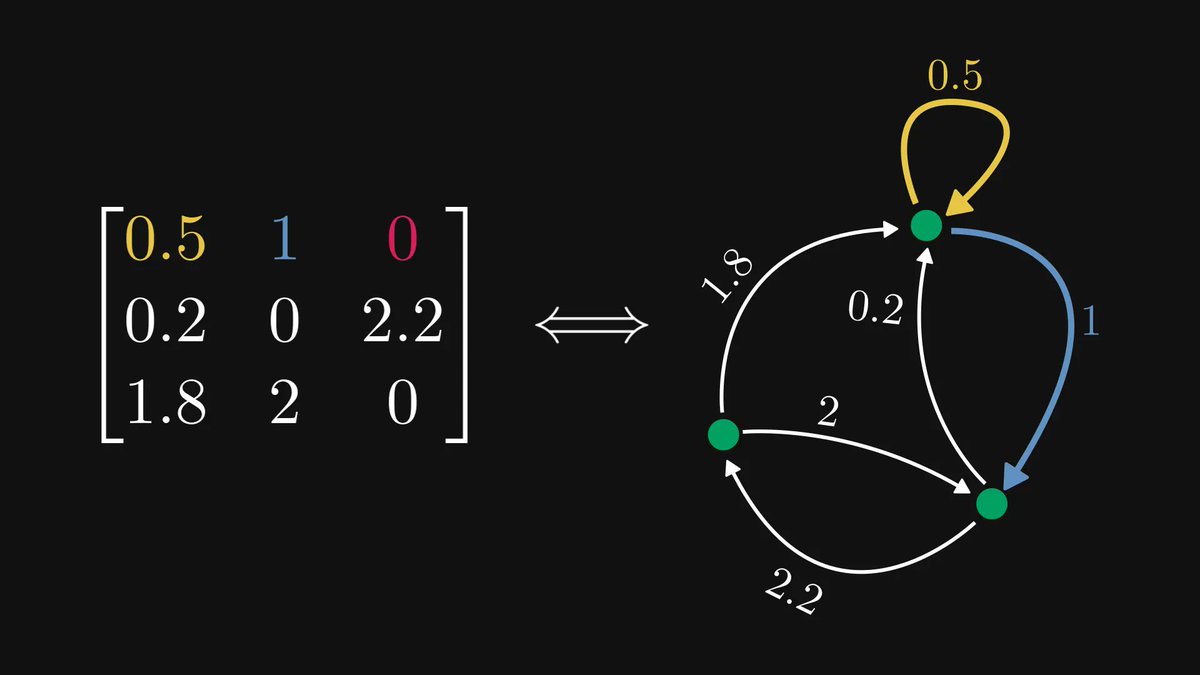

Because the image of a basis vector is just another vector in our vector space, it can also be expressed as the basis vectors' linear combination.

💡 These coefficients are the elements of the transformation's matrix! 💡

(The image of 𝑗-th basis gives the 𝑗-th column.)

7/11

💡 These coefficients are the elements of the transformation's matrix! 💡

(The image of 𝑗-th basis gives the 𝑗-th column.)

7/11

So, let's recap!

For any linear transformation, there is a matrix such that the transformation itself corresponds to the multiplication with that matrix.

What is the equivalent of matrix multiplication in the language of linear transformations?

8/11

For any linear transformation, there is a matrix such that the transformation itself corresponds to the multiplication with that matrix.

What is the equivalent of matrix multiplication in the language of linear transformations?

8/11

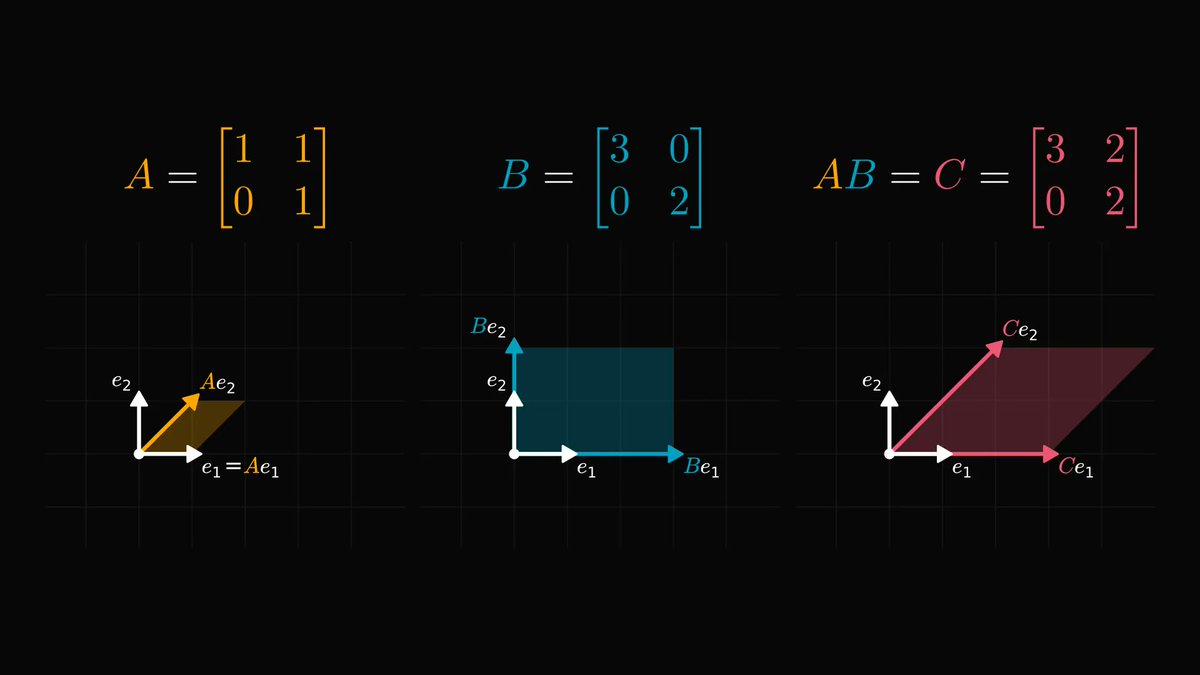

Function composition!

(Keep in mind that a linear transformation is a function, just mapping vectors to vectors.)

9/11

(Keep in mind that a linear transformation is a function, just mapping vectors to vectors.)

9/11

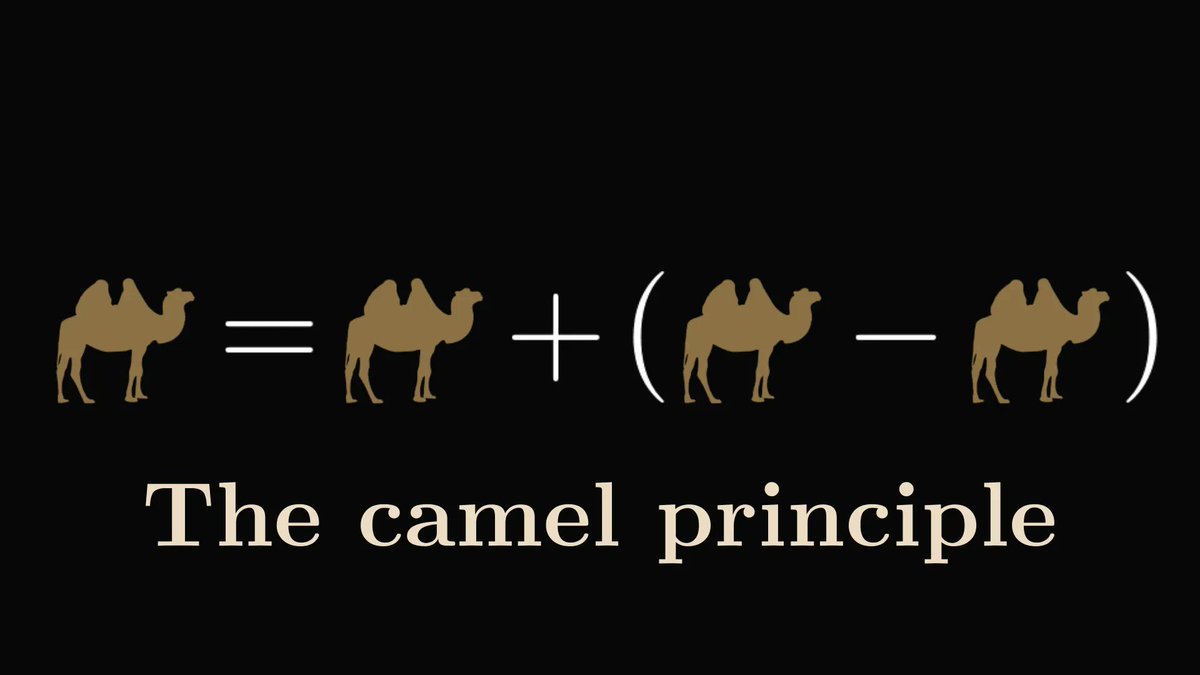

💡 Multiplication of matrices is just the composition of the corresponding linear transforms! 💡

This is why matrix multiplication is defined the way it is.

10/11

This is why matrix multiplication is defined the way it is.

10/11

Having a deep understanding of math will make you a better engineer. I want to help you with this, so I am writing a comprehensive book about the subject.

If you are interested in the details and beauties of math, check out the early access!

11/11

tivadardanka.com/book/

If you are interested in the details and beauties of math, check out the early access!

11/11

tivadardanka.com/book/

• • •

Missing some Tweet in this thread? You can try to

force a refresh