1/n. Will there be any more profound, fundamental discoveries like Newtonian physics, Darwinism, Turing computation, QM, molecular genetics, deep learning?

Maybe -- and here's some wild guesses about what they'll be...

Maybe -- and here's some wild guesses about what they'll be...

2/n.

Guess (1):New crypto-economic foundations of society. We might move to a society based on precise computational mechanisms:

a) smart contracts with ML oracles

b) ML algorithms that learn + aggregate our preferences/beliefs make societal decisions/allocations based on them

Guess (1):New crypto-economic foundations of society. We might move to a society based on precise computational mechanisms:

a) smart contracts with ML oracles

b) ML algorithms that learn + aggregate our preferences/beliefs make societal decisions/allocations based on them

3/n. We see small specialized instances today (crypto/DeFi, AI-enabled ad auctions, prediction markets, recommender systems) but the space of possibilities is large and today's Bitcoin may not be very representative.

4/n. There can’t be a concise textbook for our current economic/political/legal system like one for QM or CS theory. But in a crypto-economic future, society would be founded on the math of computational game theory, CS theory, and machine learning.

5/n.

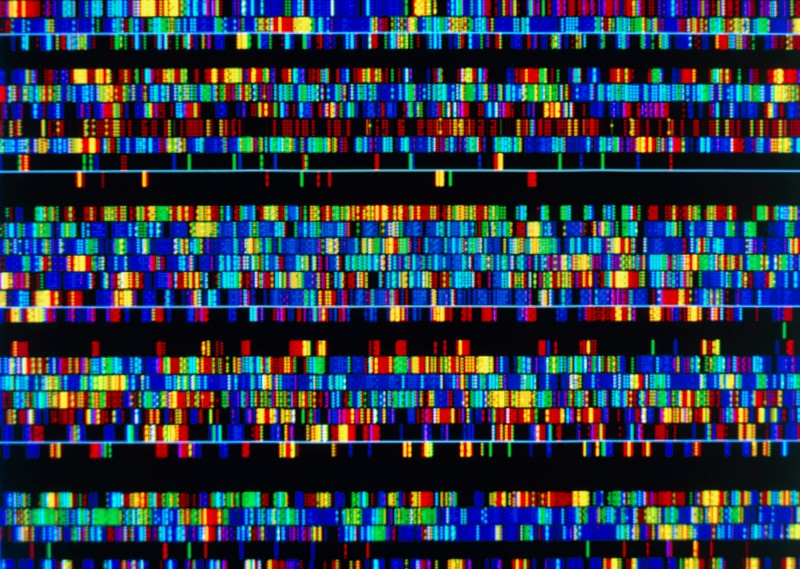

Guess (2): New Fundamentals in Bio, Neuro, and study of big, messy systems.

We’re currently using deep learning to produce predictive models of entangled, high-dimensional systems in biology, neuroscience/human behavior, climate/weather, stat-mech, economics, etc

Guess (2): New Fundamentals in Bio, Neuro, and study of big, messy systems.

We’re currently using deep learning to produce predictive models of entangled, high-dimensional systems in biology, neuroscience/human behavior, climate/weather, stat-mech, economics, etc

6/n. In the next 10 years, these models will get much better. They’ll make predictions relevant to aging and health (bio), disease (bio), consciousness/self-awareness (neuro), drives/desires and values (neuro), etc.

7/n. DL models are mostly blackboxes — better prediction doesn’t mean an understandable, explanatory, elegant theory like Newtonian physics.

8/n. However, it’s plausible to me that better predictive DL models will sometimes yield elegant theories.

9/n. Either (a) we make *interpretable* DL models that yield the elegant theory directly, or (b) better predictions helps humans create the new theories, or (c) we produce strong evidence that *no elegant theory* is possible in the domain.

10/n. (For example, we already have evidence from machine learning that there’s no elegant theory of ImageNet, i.e. of recognizing common objects like cats, chairs, and houses from 2D photos).

11/n.

Guess (3) New Fundamental Metaphysics of the Universe.

Some basic questions: Why is there something rather than nothing? Why the laws of QM and not some other laws? Are we living in a simulation and if so what kind? ...

Guess (3) New Fundamental Metaphysics of the Universe.

Some basic questions: Why is there something rather than nothing? Why the laws of QM and not some other laws? Are we living in a simulation and if so what kind? ...

12/n. How do values/ethics interact with the fundamental nature of the universe? Is there other intelligent life in the (multi)verse?

I think we might build on some existing ideas and make a profound advance on these questions. I have in mind these ideas:

I think we might build on some existing ideas and make a profound advance on these questions. I have in mind these ideas:

13/n. ...The universal/Solomonoff distribution, MWI, timeless decision theories, logical induction, superintelligence safety, anthropics.

Postscript:

Will there be a new Newton/Einstein/Darwin figure, a single person who discovers a fundamental theory?

For Guess 1 (Crypto-economic Foundations), it’s possible that figures like Satoshi/Vitalik will get lots of credit (if the future is built on blockchain/ETH) but...

Will there be a new Newton/Einstein/Darwin figure, a single person who discovers a fundamental theory?

For Guess 1 (Crypto-economic Foundations), it’s possible that figures like Satoshi/Vitalik will get lots of credit (if the future is built on blockchain/ETH) but...

I expect there'll be many dispersed contributors.

For (2) New Fundamentals in Bio/Neuro, I think it will be a large group effort either like AlphaFold2 (one lab) or like the HGP (many labs).

The last guess, New Fundamental Metaphysics, is much more theoretical/philosophical...

For (2) New Fundamentals in Bio/Neuro, I think it will be a large group effort either like AlphaFold2 (one lab) or like the HGP (many labs).

The last guess, New Fundamental Metaphysics, is much more theoretical/philosophical...

and so one person could be a Newton/Einstein style figure. If you like working solo and want to be the next Einstein, try spending 10 years on a New Fundamental Metaphysics (deeply grounded in math/physics/computation)!

HT @peligrietzer for raising this and for discussion. @ArtirKel and @seanmcarroll have written on whether there'll be fundamental new discoveries in basic physics (read them for much more nuance). nintil.com/is-useful-phys…

arxiv.org/abs/2101.07884

arxiv.org/abs/2101.07884

@threadreaderapp unroll

• • •

Missing some Tweet in this thread? You can try to

force a refresh