The militarization of US tech may be inevitable. But before we jump into privatized cyberwar, let's think through possible consequences.

- Damage to millions of innocent Russians

- Targeted retaliatory cyberattack

- Unpredictable escalation into global cyber WW3

A thread. 🧵

- Damage to millions of innocent Russians

- Targeted retaliatory cyberattack

- Unpredictable escalation into global cyber WW3

A thread. 🧵

https://twitter.com/mwseibel/status/1496889263085920287

First, collateral damage.

This proposal is essentially privatized cyberwar on millions of innocent Russians. In my view, better to do targeted positive acts (offering asylum, helping dissidents) or targeted negative acts than untargeted broad attacks.

This proposal is essentially privatized cyberwar on millions of innocent Russians. In my view, better to do targeted positive acts (offering asylum, helping dissidents) or targeted negative acts than untargeted broad attacks.

https://twitter.com/sandeep_sr/status/1496893860160544787

Second, retaliatory cyberattacks may ensue.

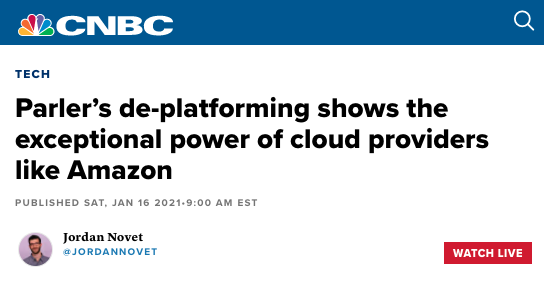

Using US tech power against millions of Russians in this way isn’t like a typical deplatforming, where it's a consequence-free act by a huge company on a powerless individual.

This is Russia. They may hit back, in nasty ways.

Using US tech power against millions of Russians in this way isn’t like a typical deplatforming, where it's a consequence-free act by a huge company on a powerless individual.

This is Russia. They may hit back, in nasty ways.

Third, retaliation may also not stop at cyberwar.

We have not yet seen ideologically motivated attacks on tech CEOs, but Russia has signaled its willingness to track, poison, and murder their enemies. Even in the middle of London.

Again, if you do this, go in eyes open.

We have not yet seen ideologically motivated attacks on tech CEOs, but Russia has signaled its willingness to track, poison, and murder their enemies. Even in the middle of London.

Again, if you do this, go in eyes open.

Fourth, talk to your team.

I don't want to quite say that throwing your firm into the global cyberwar is like picking up a rifle and standing a post.

But it does expose your team & customers to targeted lifelong retaliation by nasty people. They should take that risk knowingly.

I don't want to quite say that throwing your firm into the global cyberwar is like picking up a rifle and standing a post.

But it does expose your team & customers to targeted lifelong retaliation by nasty people. They should take that risk knowingly.

Fifth, the US military can't protect you against cyberattack.

After Solarwinds & OPM, it's clear the US is a sitting duck for cyber. They can't protect themselves, so they can't protect you. Thus any entity that decides to engage in privatized cyberwar does so at their own risk.

After Solarwinds & OPM, it's clear the US is a sitting duck for cyber. They can't protect themselves, so they can't protect you. Thus any entity that decides to engage in privatized cyberwar does so at their own risk.

Sixth, the US military won't defray your costs.

If you decide to enter a privatized cyberwar, the US government is not going to pay for any damages you, your employees, and customers may suffer as a result.

And this kind of war can get extremely expensive.

If you decide to enter a privatized cyberwar, the US government is not going to pay for any damages you, your employees, and customers may suffer as a result.

And this kind of war can get extremely expensive.

Seventh, spiraling may ensue.

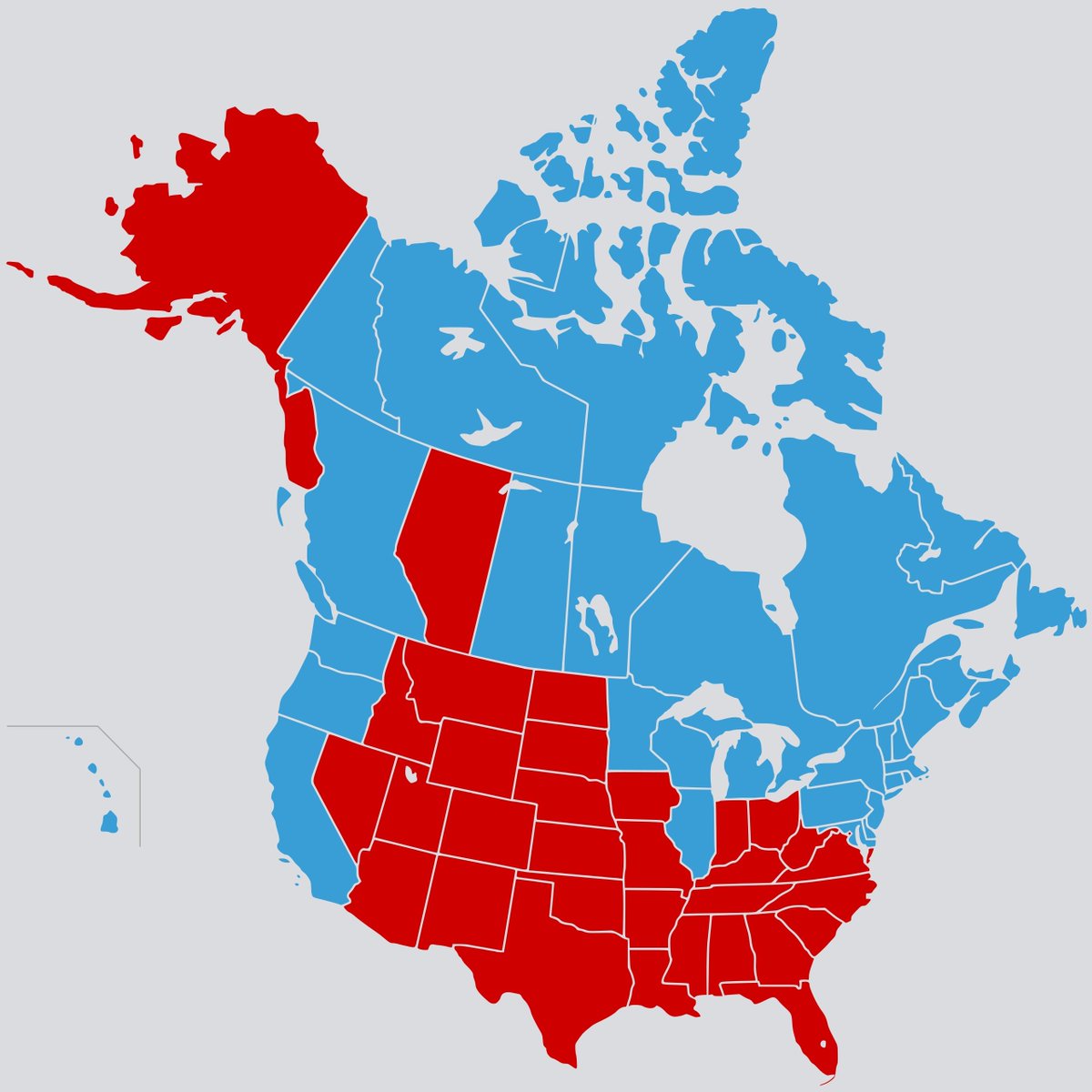

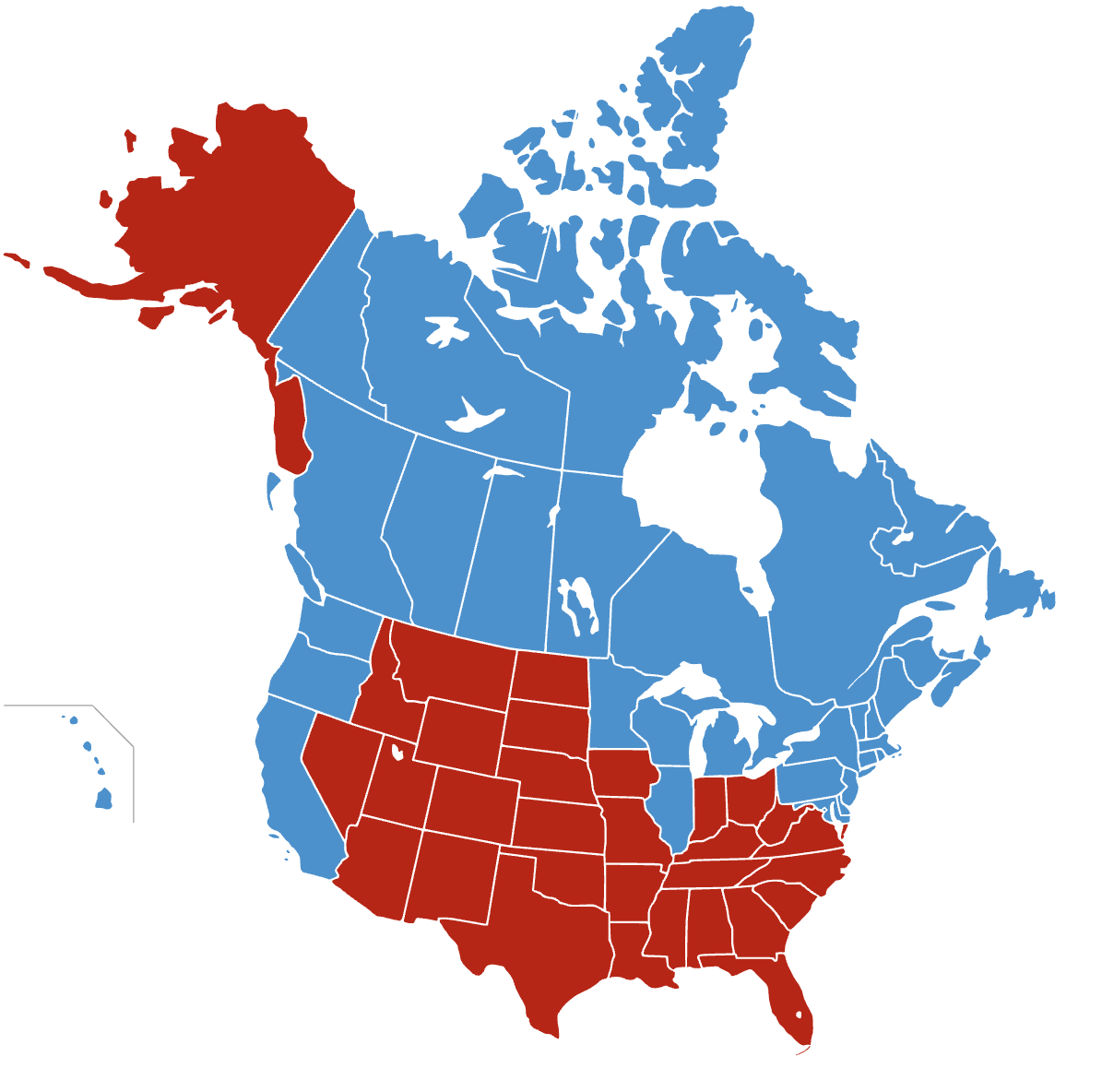

At the beginning of WW1, people didn't think about how things could escalate unpredictably. And many US tech cos are themselves vulnerable to cutoffs from China, a Russian ally.

This game has more than one move, and the enemy also gets a say.

At the beginning of WW1, people didn't think about how things could escalate unpredictably. And many US tech cos are themselves vulnerable to cutoffs from China, a Russian ally.

This game has more than one move, and the enemy also gets a say.

The age of total cyberwar

I've been apprehensive about this for some time. The involvement of global firms can make a conflict spiral. The potential for this has been clear, but perhaps we can come back from the precipice.

Or at least be aware of it.

I've been apprehensive about this for some time. The involvement of global firms can make a conflict spiral. The potential for this has been clear, but perhaps we can come back from the precipice.

Or at least be aware of it.

https://twitter.com/balajis/status/1429005987600506880

Why do I see great potential for miscalculation?

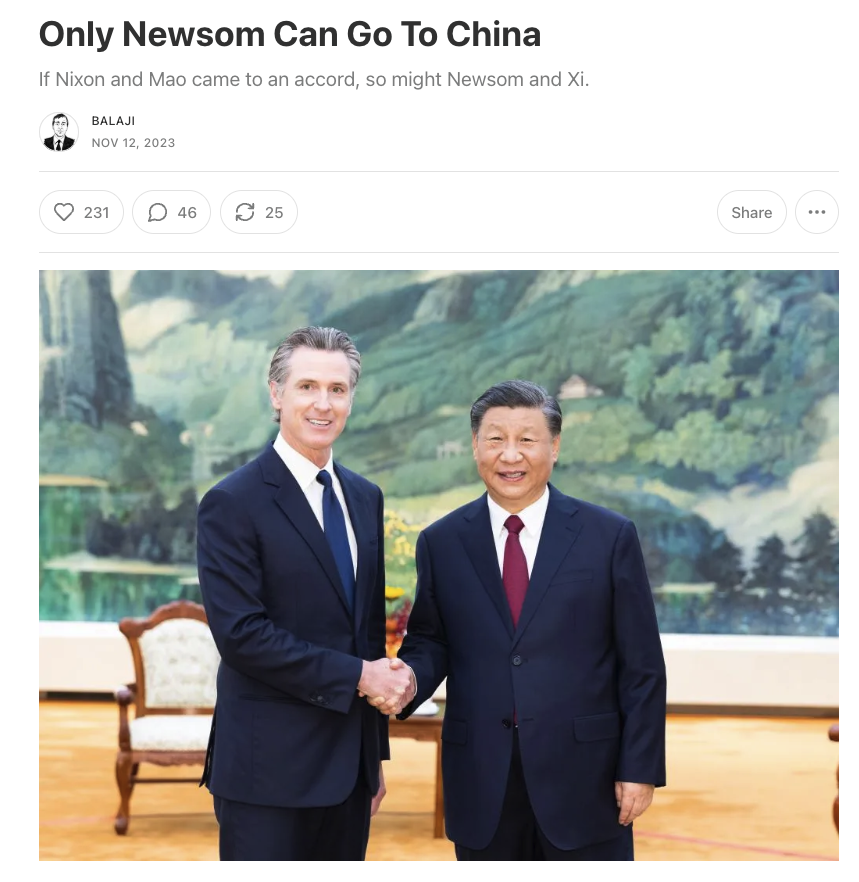

Tech companies have grown accustomed to taking consequence-free actions against individuals. Arbitrary corporate deplatforming of folks across the political spectrum is common.

A state like Russia is a totally different beast.

Tech companies have grown accustomed to taking consequence-free actions against individuals. Arbitrary corporate deplatforming of folks across the political spectrum is common.

A state like Russia is a totally different beast.

No one thought WW1 would spiral as it did.

A great way to internationalize the conflict is for transnational tech companies to get involved in a global, privatized cyberwar. This may not play out in a feel-good way.

At a minimum, we should game out the possible consequences.

A great way to internationalize the conflict is for transnational tech companies to get involved in a global, privatized cyberwar. This may not play out in a feel-good way.

At a minimum, we should game out the possible consequences.

Broad attacks may be counterproductive.

Mass cyberwar like what is proposed below may actually make Russians rally around the regime, as no distinction is being made between civilian & combatant.

See eg:

icrc.org/en/doc/assets/…

Mass cyberwar like what is proposed below may actually make Russians rally around the regime, as no distinction is being made between civilian & combatant.

See eg:

icrc.org/en/doc/assets/…

https://twitter.com/sandeep_sr/status/1496893860160544787

• • •

Missing some Tweet in this thread? You can try to

force a refresh