🚨Paper alert!🚨Birds of a Feather Don't Fact-check Each Other #CHI2022

On Twitters crowdsourced factcheck program @birdwatch, partisanship is king: users mostly flag counterpartisans' tweets as misleading & rate their notes as unhelpful

psyarxiv.com/57e3q

1/

On Twitters crowdsourced factcheck program @birdwatch, partisanship is king: users mostly flag counterpartisans' tweets as misleading & rate their notes as unhelpful

psyarxiv.com/57e3q

1/

Some background: in Jan 2021, Twitter announced a new crowdsourced fact-checking project, @birdwatch, and invited users to apply to become ~official~ keyboard warriors and fight misinfo on the platform

2/

https://twitter.com/twittersupport/status/1353766523664531459

2/

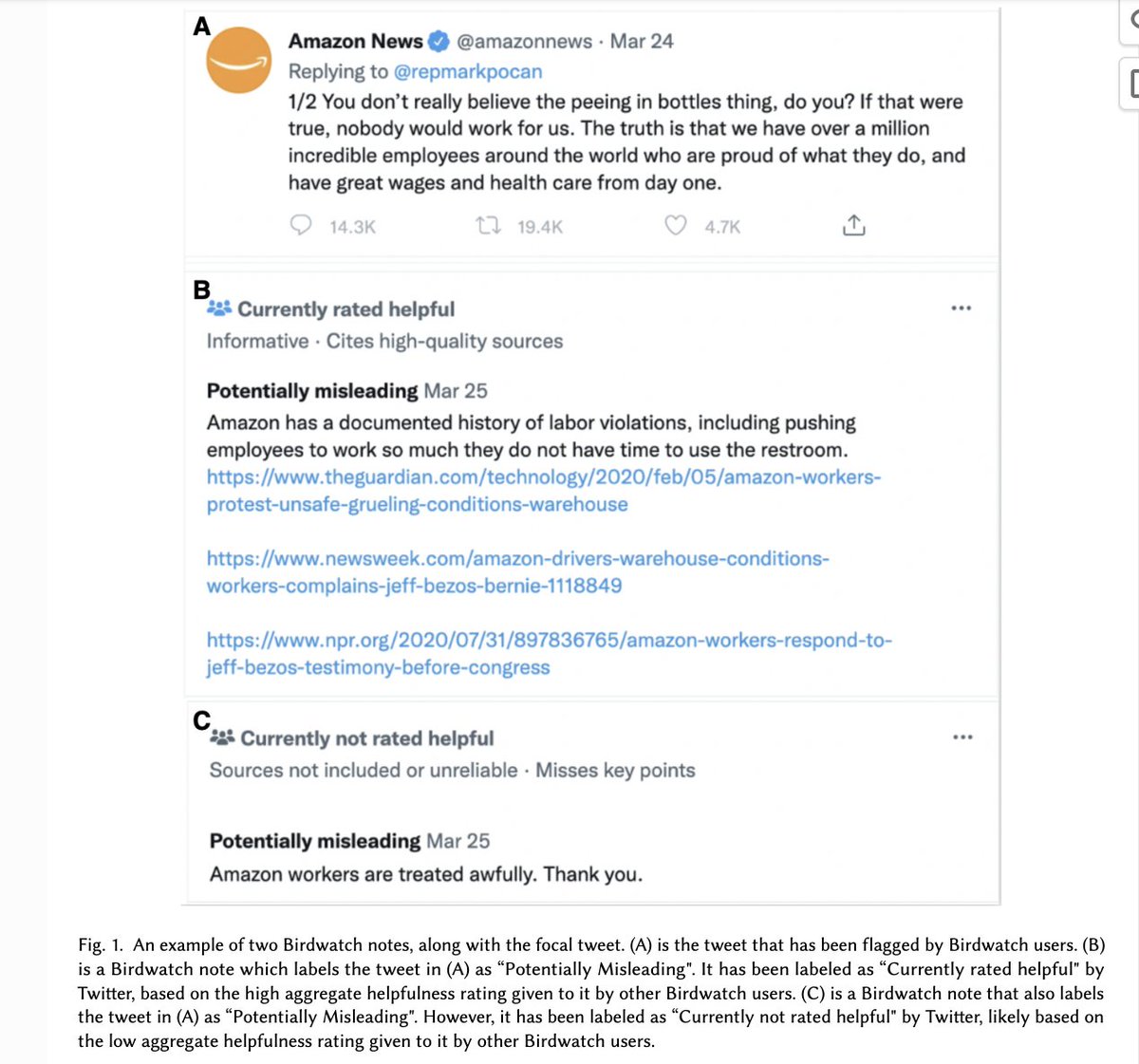

Accepted users could participate in 2 ways:

1. Notes - users flag tweets as misleading or not and write a summary explaining why

2. Ratings - users upvote or downvote other birdwatchers notes' as helpful or not

More ex. found here: twitter.com/i/birdwatch

3/

1. Notes - users flag tweets as misleading or not and write a summary explaining why

2. Ratings - users upvote or downvote other birdwatchers notes' as helpful or not

More ex. found here: twitter.com/i/birdwatch

3/

Responses to the announcement were, uh, mixed (see prototypical example below).

Can Birdwatch separate the truth from the trolling?

4/

https://twitter.com/Adsinjapan/status/1353772352174080000

Can Birdwatch separate the truth from the trolling?

4/

We also had questions. Past work we've done shows that crowds can do a good job flagging misinfo when platforms control what content ppl rate:

But what happens when users choose which content to evaluate? Does partisan cheerleading take over?

5/

https://twitter.com/DG_Rand/status/1314212731826794499?s=20&t=8V1jqhIpfEr3-2t2bHK8WQ

But what happens when users choose which content to evaluate? Does partisan cheerleading take over?

5/

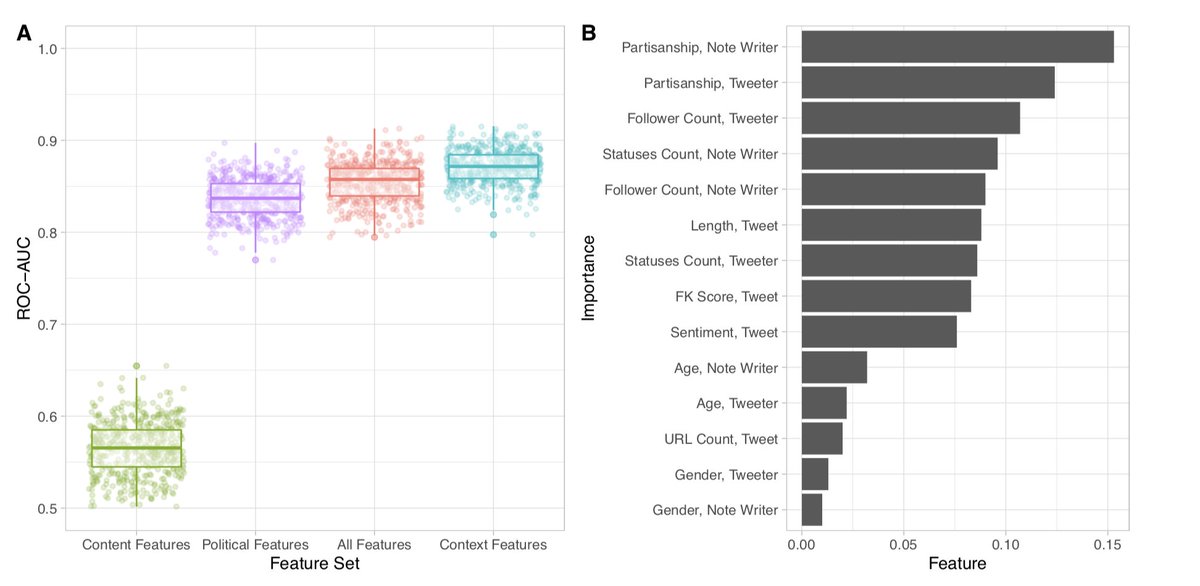

To answer, we got tweets, notes, and ratings from the first 6 mo of @birdwatch. We examined predictive power of

i) content features, related to the content of tweet or note

vs ii) context features, related to user-level attributes of the bwatcher or tweeter (eg partisanship)

6/

i) content features, related to the content of tweet or note

vs ii) context features, related to user-level attributes of the bwatcher or tweeter (eg partisanship)

6/

We ran a random forest model predicting tweet misleadingness and found models that used just content features did barely better than chance. But models w just political features of the ppl involved had an AUC of almost .85, w little add'l benefit of more features

7/

7/

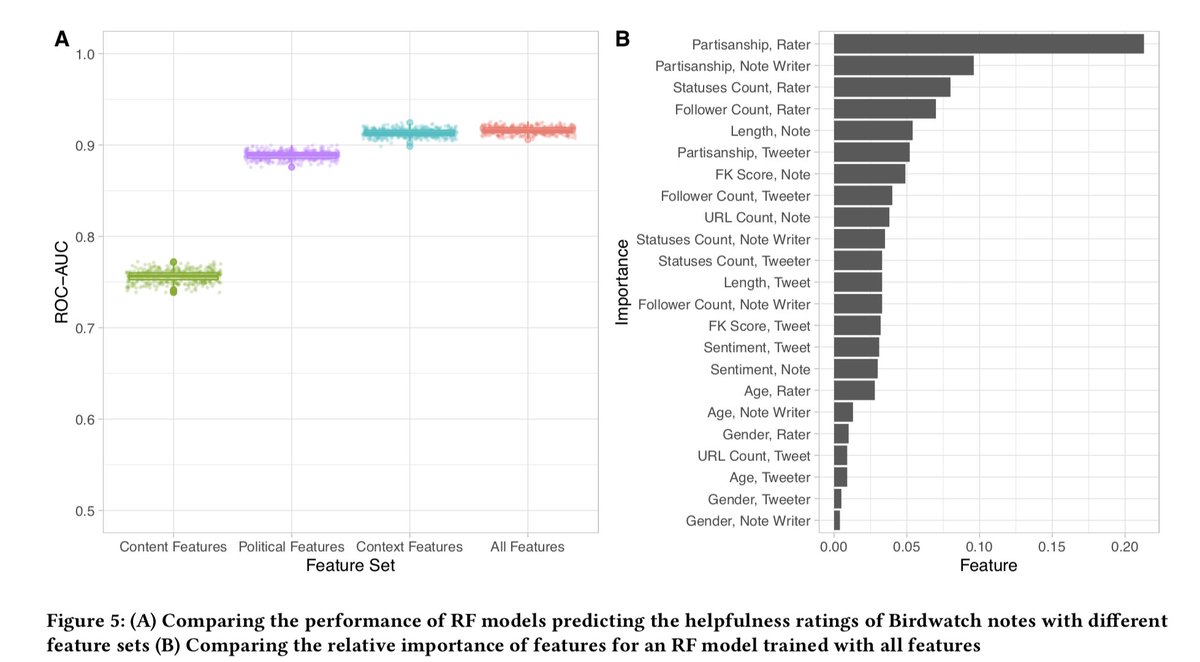

Same was true for a model predicting note helpfulness. Here, using the context features alone produced a decent model w AUC ~ .75. But just political features gets to an AUC of ~.9!

8/

8/

Clearly, we needed to take a closer look at this relationship b/t partisanship & participation in bwatch

9/

9/

Results were stark!

Ds were 2X, and Rs were 3X, more likely to flag a tweet by a counterpartisan. Even though the majority of tweets (90%!) were classified misleading, bwatchers labeled many more co-partisan tweets as NOT misleading than counterpartisan ones

10/

Ds were 2X, and Rs were 3X, more likely to flag a tweet by a counterpartisan. Even though the majority of tweets (90%!) were classified misleading, bwatchers labeled many more co-partisan tweets as NOT misleading than counterpartisan ones

10/

Ratings data were even more striking

Ds rated 83% of notes by fellow Ds helpful, vs. 43% by Rs

Rs rated 87% of notes by Rs helpful, vs. 26% by Ds

11/

Ds rated 83% of notes by fellow Ds helpful, vs. 43% by Rs

Rs rated 87% of notes by Rs helpful, vs. 26% by Ds

11/

So is BWatch doomed? Maybe not!

We had 2 fact-checkers vet a subset of the tweets, and found that among the 57 tweets flagged as misleading by a majority of bwatchers, 86% were also rated as misleading by fact-checkers.

12/

We had 2 fact-checkers vet a subset of the tweets, and found that among the 57 tweets flagged as misleading by a majority of bwatchers, 86% were also rated as misleading by fact-checkers.

12/

Data is very prelim., but suggestive that desire to call out *actually bad* content by the opposing party could be an important motivator driving people to participate in Birdwatch in the first place. It's not all trolling! 🥳

13/

13/

Also impt- this research is just looking at v0 of bwatch. Twitter has been constantly iterating to better the program, and we are grateful to the team for sharing data and being open to collaboration from academics.

Check out their data here: twitter.github.io/birdwatch/

14/

Check out their data here: twitter.github.io/birdwatch/

14/

We're thankful for receiving a Best Paper Honorable Mention from #CHI2022, and @_JenAllen & @Cameron_Martel_ will be at the conference - so plz reach out if you'll also be in attendance & would like to chat!

programs.sigchi.org/chi/2022/progr…

15/

programs.sigchi.org/chi/2022/progr…

15/

• • •

Missing some Tweet in this thread? You can try to

force a refresh