🚨JUST OUT🚨

Quarterly threat report from @Meta’s investigative teams.

Much to dig into:

State & non-state actors targeting Ukraine;

Cyber espionage from Iran and Azerbaijan;

Influence ops in Brazil and Costa Rica;

Spammy activity in the Philippines...

about.fb.com/news/2022/04/m…

Quarterly threat report from @Meta’s investigative teams.

Much to dig into:

State & non-state actors targeting Ukraine;

Cyber espionage from Iran and Azerbaijan;

Influence ops in Brazil and Costa Rica;

Spammy activity in the Philippines...

about.fb.com/news/2022/04/m…

I’ll focus this thread on Ukraine. For more on the rest, see the great @ngleicher and @DavidAgranovich.

We’ve seen state & non-state ops targeting Ukraine across the internet since the invasion, including attempts from:

🇧🇾 Belarus KGB

👹 A Russian “NGO” w/ some links to past IRA folks

👻 Ghostwriter

We caught these early, before they could build audience or be effective.

🇧🇾 Belarus KGB

👹 A Russian “NGO” w/ some links to past IRA folks

👻 Ghostwriter

We caught these early, before they could build audience or be effective.

Typically, these efforts only pivoted to posting about Ukraine close to when the invasion happened.

For example, the Belarus op was posting about Poland and migrants right up to invasion day.

It doesn’t look like these influence ops were prepared for the war.

For example, the Belarus op was posting about Poland and migrants right up to invasion day.

It doesn’t look like these influence ops were prepared for the war.

The timing and lack of prep are important.

We know from the 2018 Mueller indictment that the IRA gave itself 2.5 years to target the US 2016 election (Apr 2014 -> Nov 2016)

An op that only lasts a few days is going to struggle for impact cross-internet

justice.gov/file/1035477/d…

We know from the 2018 Mueller indictment that the IRA gave itself 2.5 years to target the US 2016 election (Apr 2014 -> Nov 2016)

An op that only lasts a few days is going to struggle for impact cross-internet

justice.gov/file/1035477/d…

We also took down an attempt by people linked to past Internet Research Agency activity.

They posed as an NGO and were active almost entirely off platform: they did try to create FB accounts in January, but were detected.

They posed as an NGO and were active almost entirely off platform: they did try to create FB accounts in January, but were detected.

Throughout January and February, their website focused on accusing the West of human rights abuse. After the invasion, they focused more on Ukraine.

Ghostwriter is an op that specialises in hacking people’s emails, then trying to take over their social media from there.

We saw a spike in Ghostwriter targeting of the Ukrainian military after the invasion.

We saw a spike in Ghostwriter targeting of the Ukrainian military after the invasion.

In a few cases, Ghostwriter posted videos calling on the Army to surrender as if these posts were coming from the legitimate account owners.

We blocked these videos from being shared.

We blocked these videos from being shared.

Non-state actors focused on Ukraine too. We took down an attempt to come back by an actor running two websites posing as news outlets. Those sites were linked to a network we disrupted in December 2020.

about.fb.com/wp-content/upl…

about.fb.com/wp-content/upl…

As typical for critical world events, spammers focused on the invasion too. They used war footage to leverage people's’ attention to this war to make money.

Automated and manual systems have caught thousands of those.

Automated and manual systems have caught thousands of those.

Finally, we also took down a group in Russia that tried to mass-report people in Ukraine and RU, to silence them. Likely in an attempt to conceal their activity, they coordinated in a group that was ostensibly focused on cooking.

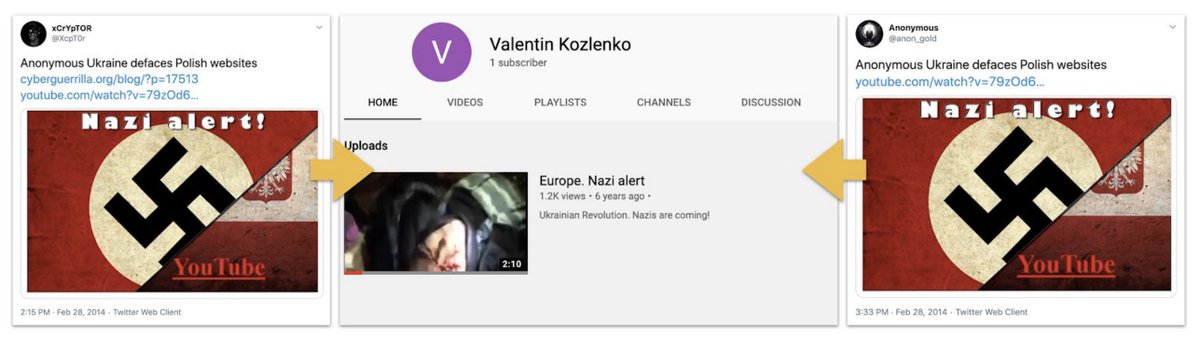

It’s worth making a bigger point here: we as a society have come a long way since 2014. The info ops research community is far more developed than it was back then, when there were just a handful of us doing this research.

On platform, we caught the recidivist ops early.

On platform, we caught the recidivist ops early.

In the #OSINT community, there's more forensic expertise than ever.

Look at #BBCRealityCheck, @malachybrowne / @NYTimes visual investigations, @ElyseSamuels / @washingtonpost visual forensics, old friends like @bellingcat, @dfrlab, @graphika_nyc, @stanfordio, @EUdisinfolab...

Look at #BBCRealityCheck, @malachybrowne / @NYTimes visual investigations, @ElyseSamuels / @washingtonpost visual forensics, old friends like @bellingcat, @dfrlab, @graphika_nyc, @stanfordio, @EUdisinfolab...

@malachybrowne @nytimes @ElyseSamuels @washingtonpost @bellingcat @DFRLab @Graphika_NYC @stanfordio That matters hugely. Threat actors will always try: it’s their job. But having a skilled community out there which is able to detect deception and influence ops fast makes for a much less conducive environment.

• • •

Missing some Tweet in this thread? You can try to

force a refresh