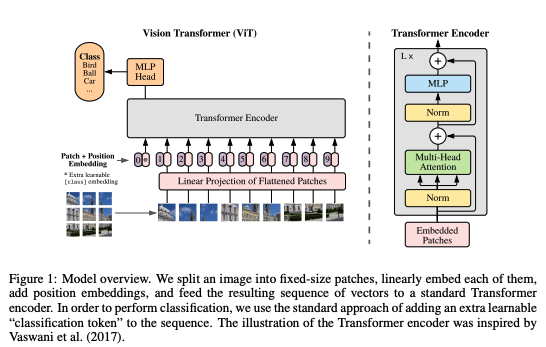

Are you working on federated learning over heterogeneous data? Use Vision Transformers as a backbone!

In our upcoming #CVPR2022 paper, we perform extensive experiments demonstrating the effectiveness of ViTs for FL:

paper: arxiv.org/abs/2106.06047

code: github.com/Liangqiong/ViT…

In our upcoming #CVPR2022 paper, we perform extensive experiments demonstrating the effectiveness of ViTs for FL:

paper: arxiv.org/abs/2106.06047

code: github.com/Liangqiong/ViT…

@vickyqu0 @yuyinzhou_cs @mldcmu @StanfordDBDS @StanfordAILab We find that ViTs are more robust to distribution shift, reduce catastrophic forgetting over devices, accelerate convergence, and reach better models.

Using ViTs, we are able to scale FL up to the edge-case of heterogeneity - 6000 & 45000 clients with only 1 sample per client!

Using ViTs, we are able to scale FL up to the edge-case of heterogeneity - 6000 & 45000 clients with only 1 sample per client!

@vickyqu0 @yuyinzhou_cs @mldcmu @StanfordDBDS @StanfordAILab By virtue of their robustness and generalization properties, ViTs also converge faster with fewer communicated parameters, which makes them appealing for efficient FL.

ViTs can be used with optimization FL methods (FedProx, FedAvg-Share) to further improve speed & performance.

ViTs can be used with optimization FL methods (FedProx, FedAvg-Share) to further improve speed & performance.

@vickyqu0 @yuyinzhou_cs @mldcmu @StanfordDBDS @StanfordAILab Try out our code on your FL tasks! With full comparisons between ResNets, ConvNets, & ViTs across many FL tasks: github.com/Liangqiong/ViT…

this was a fun collaboration led by @vickyqu0 and @yuyinzhou_cs with Yingda Feifei @eadeli @drfeifei @rubinqilab @StanfordDBDS @StanfordAILab

this was a fun collaboration led by @vickyqu0 and @yuyinzhou_cs with Yingda Feifei @eadeli @drfeifei @rubinqilab @StanfordDBDS @StanfordAILab

• • •

Missing some Tweet in this thread? You can try to

force a refresh