1/ FASCINATING new theory on DREAMING from observing a deep neural net

but 1st––some of the most cutting-edge + interesting research on how 🧠🧠 work –– are inspired by observation of modern 💻💻 + algorithms

every era there's a theory of brains running parallel to era's tech

but 1st––some of the most cutting-edge + interesting research on how 🧠🧠 work –– are inspired by observation of modern 💻💻 + algorithms

every era there's a theory of brains running parallel to era's tech

2/ Go back to Descartes who thought the 🧠 worked like hydraulic pumps ⛽️––the available new tech or his era

4/ More recent analogies have been to the brain as a computer––which notably inspired lots of AI research, specifically the early work on neural nets which lost and regained favor over the decades

5/ Then we have had the analogy of the brain as an internet––with islands of functional groups interconnected

6/ All models are wrong––some of them are useful.

Insights from trying to understand our internal human SENSES, PERCEPTION, SPEECH, VISION, HEARING, MEMORY have all led to embodied technologies

which in turn lead to new theories...

Insights from trying to understand our internal human SENSES, PERCEPTION, SPEECH, VISION, HEARING, MEMORY have all led to embodied technologies

which in turn lead to new theories...

https://twitter.com/wolfejosh/status/1444717406090375168?s=20&t=GOVx8QKZRjxoFU6thq-f5w

7/ We already know we SEE what we BELIEVE

Illusions are excellent at humbling us.

Even if we know they are illusions.

Illusions are excellent at humbling us.

Even if we know they are illusions.

https://twitter.com/wolfejosh/status/1297539830017069056?s=20&t=CD76U9JK8XImcVa4g4Dw9A

8/ (almost there...stick with me;)

Now our study + design of neural nets is leading to a 'consilience of inductions'––

many different researchers convening on common explanations that point to same conclusion

Now our study + design of neural nets is leading to a 'consilience of inductions'––

many different researchers convening on common explanations that point to same conclusion

https://twitter.com/wolfejosh/status/1465159241661132806?s=20&t=WM3TudoI5RncKMI5T1uasw

9/ Like "memory––prediction" framework and the computational layer between them

that ingests reality, makes models + predictions of patterns it later expects to see, then updates models based on 'reality' (just as robots/machine vision do)

that ingests reality, makes models + predictions of patterns it later expects to see, then updates models based on 'reality' (just as robots/machine vision do)

https://twitter.com/wolfejosh/status/1450451437868175370?s=20&t=Nmm2ggFvG-liupE6LS1Rrg

10/ now –– Erik Hoel has a COOL hypothesis

what if the REASON we DREAM––was similar to

the REASON programmers add noise to deep neural nets

to prevent narrow training from experience

+ generalize, allowing for anticipation of weird new stuff––and be evolutionarily adaptive...

what if the REASON we DREAM––was similar to

the REASON programmers add noise to deep neural nets

to prevent narrow training from experience

+ generalize, allowing for anticipation of weird new stuff––and be evolutionarily adaptive...

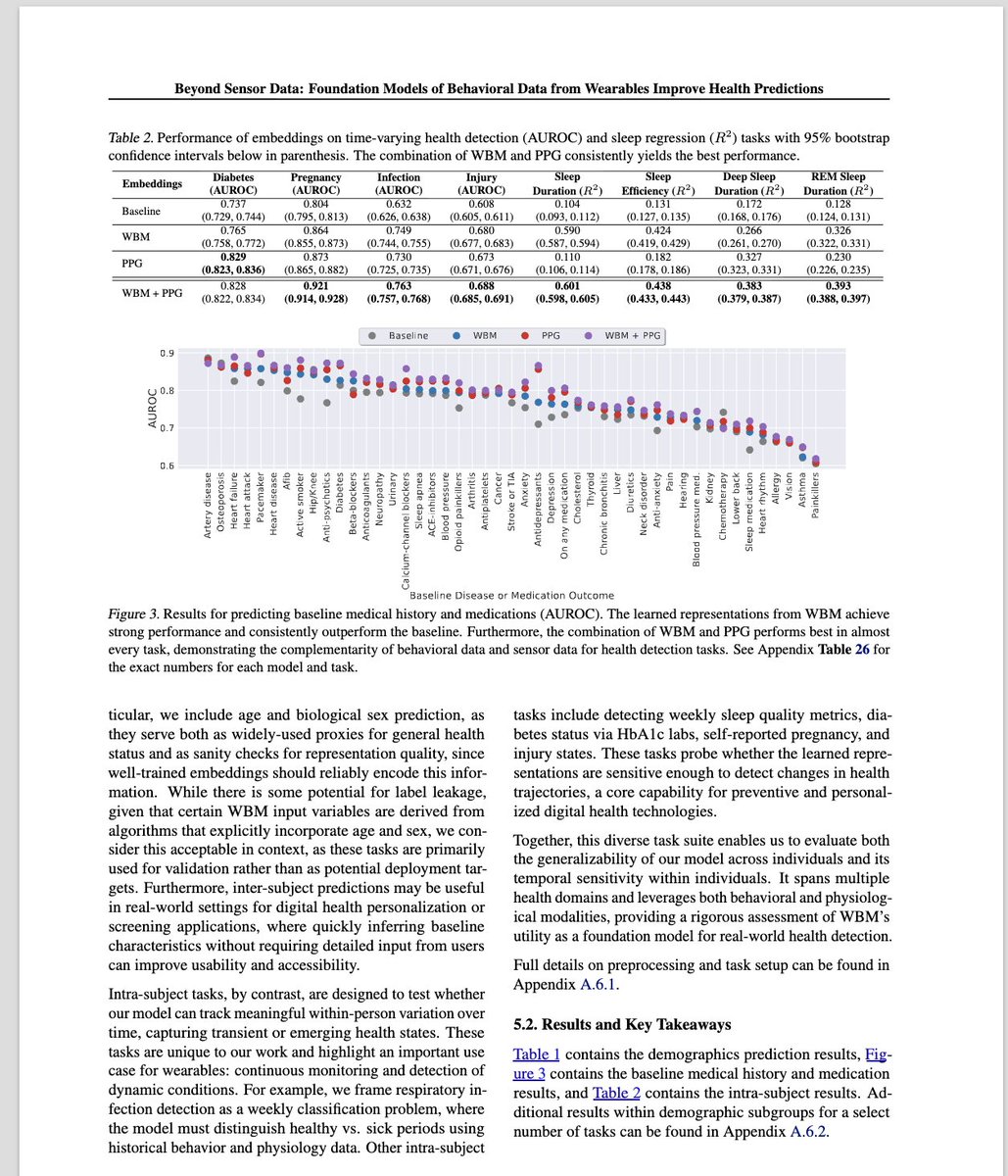

11/ Hoel calls it the Overfitting Brain Hypothesis

the problem of OVERFITTING in machine learning is best visualized by this

the problem of OVERFITTING in machine learning is best visualized by this

12/ The way researchers solve the "overfitting" problem for Deep Neural Nets––is by introducing "noise injections" in the form of corrupt inputs

Why? So the algorithms don't treat everything so narrowly SPECIFIC and precise––but instead can better GENERALIZE

Why? So the algorithms don't treat everything so narrowly SPECIFIC and precise––but instead can better GENERALIZE

13/ Now IF our brain processes + stores information from stimulus it receives all day long––and learns from experiences in a narrow way––THEN it too can "overfit" a model

& be less fit to encounter wider variations from it

(like the real world)...

So the PROVOCATIVE theory...

& be less fit to encounter wider variations from it

(like the real world)...

So the PROVOCATIVE theory...

14/...Is that the evolutionary PURPOSE of DREAMING is to purposely corrupt data (memory or predictions) by inject noise into the system

And prevent learning from just rote routine memorization

Basically––

natural hallucination improves generalization

🤯

And prevent learning from just rote routine memorization

Basically––

natural hallucination improves generalization

🤯

15/ Link to full paper PDF here––a quick and VERY provocative read from @erikphoel cell.com/action/showPdf…

• • •

Missing some Tweet in this thread? You can try to

force a refresh