#GoogleAlloyDB. Let’s talk about why it’s a BIG deal for developers and DBAs, and why it might be the best way to do PostgreSQL in the cloud.

And how about a quick look at what the provisioning experience looks like? Quick 🧵 with a bunch of links at the end …

#GoogleIO

And how about a quick look at what the provisioning experience looks like? Quick 🧵 with a bunch of links at the end …

#GoogleIO

First, it's just PostgreSQL, but operationalized in a way that @googlecloud does so well. It's 100% compatible PostgreSQL 14.

Performance is silly great. 4x faster than standard PostgreSQL for traditional workloads, and 2x faster than AWS Aurora. And you can use it for analytical queries, where it's 100x faster than standard PostgreSQL.

Other things I like? 99.99% SLA, automatic failover and recovery, automatic backups, and integration with Vertex AI to pull predictions into SQL queries. Oh, and pricing that easy. Pay only for the storage you use, and you don't get saddled with IO charges.

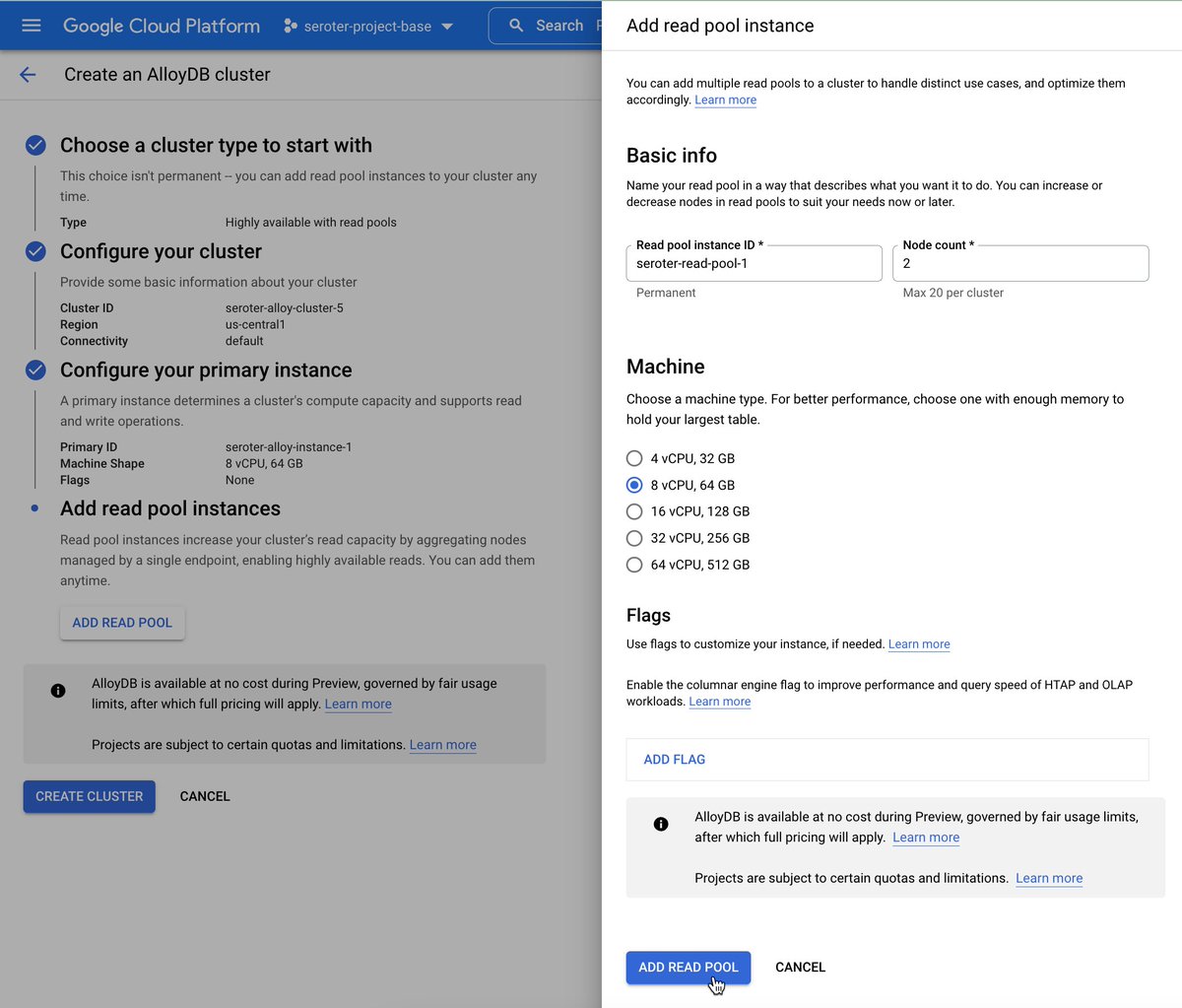

Let's take a look at provisioning a cluster. First, you're asked whether you want an HA cluster, or an HA cluster with read pools.

Next I get the only infrastructure question I need to answer. I chose a machine type, which I can change later.

It took a few minutes to provision everything. I was taken to a view that showed some metrics and such.

Once it was done, I could resize instances, view metrics, and more. And for an instance I created yesterday, I can see a couple of automatic backups we took.

AlloyDB is a feat of engineering, and a terrific database option in @googlecloud.

Launch blog: goo.gle/3PgHv8c

Tech deep dive blog: cloud.google.com/blog/products/…

Product docs: cloud.google.com/alloydb/docs/o…

Try it for free right now!

Launch blog: goo.gle/3PgHv8c

Tech deep dive blog: cloud.google.com/blog/products/…

Product docs: cloud.google.com/alloydb/docs/o…

Try it for free right now!

• • •

Missing some Tweet in this thread? You can try to

force a refresh