Now keynote at #BYSTANDER22 by @DG_Rand on the problem of misinformation and how polarization might solve the problem.

The key question is: How do we fight misinformation at scale? 1/19

The key question is: How do we fight misinformation at scale? 1/19

Currently, platforms are using technical solutions such as machine learning etc. But there are limits to this solution. These limits often entails that human fact-checkers are brought in. This *does* work. Warning labels limits false news. 2/19

The problem with fact-checking is that it doesn't scale. How can we deal with misinformation at scale?

The solution is to turn towards the wisdom of the crowds (i.e., the finding that aggregations of average people's opinions are often very accurate). 3/19

The solution is to turn towards the wisdom of the crowds (i.e., the finding that aggregations of average people's opinions are often very accurate). 3/19

Wisdom of the crowds often works.

But in the context of polarized topics such as politics, one may be concerned with the wisdom of the crowds. People have partisan biases etc. that may destroy the effect. 4/19

But in the context of polarized topics such as politics, one may be concerned with the wisdom of the crowds. People have partisan biases etc. that may destroy the effect. 4/19

Does it? A 2019-study examined Dems & Reps trust in news sources. There are partisan asymmetries (e.g., Fox News) but both groups reject hyper-partisan & fake news sites. Average ratings are accurate of trustworthiness: Corr of .90 with fact-checkers' ratings. 5/19

But if this is used in the wild, will people adjust their responses to promote their political agenda? A follow-up study informed people that results will be shared with Facebook and used to tweak the algorithm. The results overall didn't change. 6/19

Why? Because most people don't care about politics.

Can this also be applied at the level of articles and not just sources. Here, it is key to choose ecologically valid articles (i.e., reflecting actually shared articles). 7/19

Can this also be applied at the level of articles and not just sources. Here, it is key to choose ecologically valid articles (i.e., reflecting actually shared articles). 7/19

In a 2021-study 207 headlines selected for fact-checking by Facebook were checked by 3 professionals. Does their assessment reflect the assessments of the crowds? The benchmark is the degree of agreement between the fact-checkers. 15 lay people can indeed match this. 8/19

Does this work cross-culturally? 2022-paper examines this across 16 countries. In almost all countries 20 people per headline provides 90 % accuracy in detecting false news. 9/19

Yet, if this really should be usable at scale, people need to be able to choose what to rate themselves. There is too much information out there to have a central selection process. This again entails the risk of partisan cheer-leading. 10/19

What could motivate people to flag content? They could care about truth. But they could also care about partisanship. This may drive people to flag accurate but opposing content. 11/19

However, the value put on truth and partisanship are continuums. In this two-dimensional space, most people will care about both truth and partisanship and, hence, will be motivated to specifically flag false information that opposes them. 12/19

Here, partisanship actual gives the motivation to invest in fact-checking. And most people are not motivated enough by politics to wrongly flag opposing information that is actually true. 13/19

Twitter has implemented a crowd-sourced fact-checking unit of 3000 participants, called the Birdwatch. What drives the behavior of the Birdwatchers? 14/19

73 % of Birdwatchers actually never flagged anything, showing that motivation needs to be high for this.

Analyses of flagged tweets and the Birdwatchers show that the 60 % of flagging that does happens is indeed of the false-opposing type. 15/19

Analyses of flagged tweets and the Birdwatchers show that the 60 % of flagging that does happens is indeed of the false-opposing type. 15/19

Only 10 % of true-opposing information. 16/19

Despite this partisan bias, there was high accuracy because of the nature of it. 80 % of flagged content was also flagged by a fact-checker.

Partisan motivations drive people to contribute with helpful flagging. 17/19

Partisan motivations drive people to contribute with helpful flagging. 17/19

Still, some potential problems: 1) We don't know what they are missing. 2) There might be more problems, if the crowds are not equally divided in terms of ideology. 3) Non-polarized false information may not be targeted much. 18/19

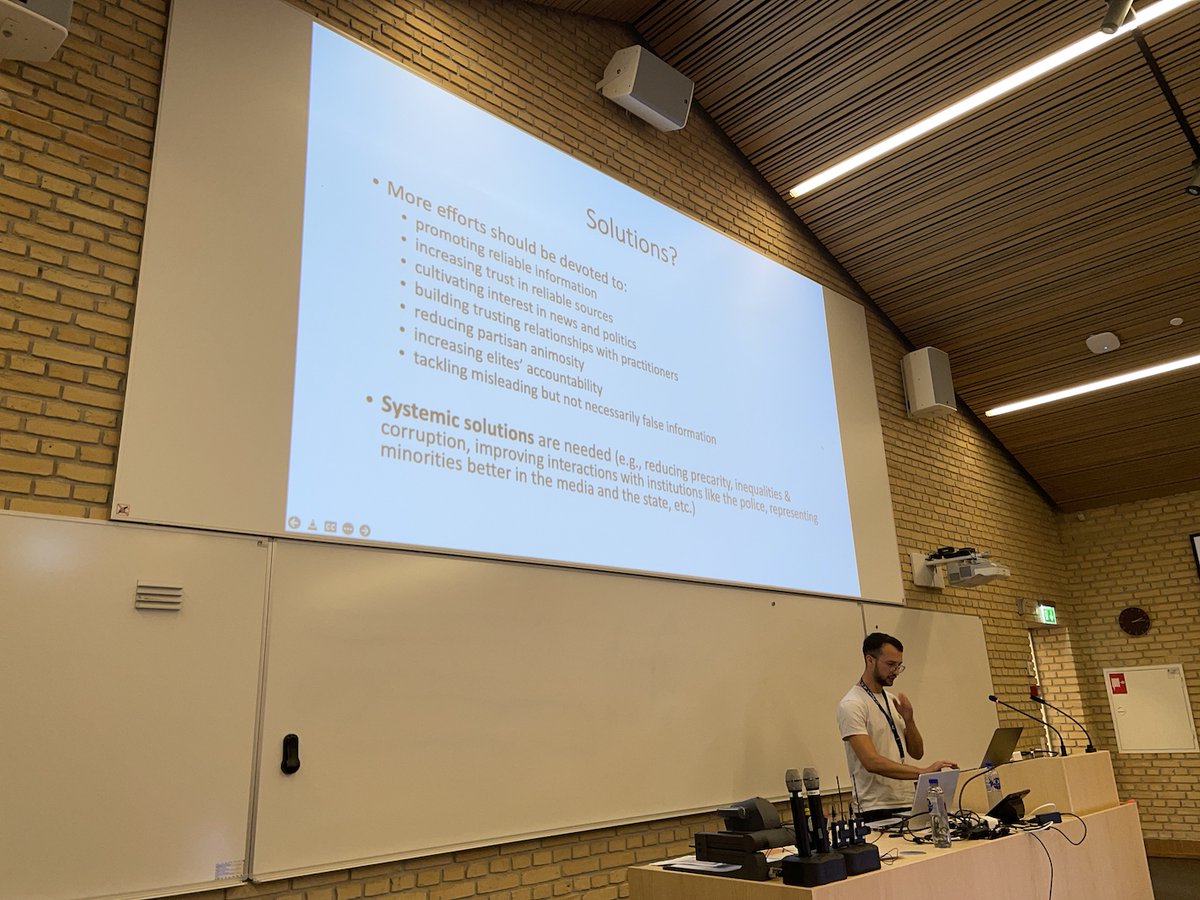

So, in conclusion: Wisdom of crowds can help platforms to identify misinfo in scalable ways. And partisan motivation solves the public goods problem of why people should care about helping society in this way. 19/19

• • •

Missing some Tweet in this thread? You can try to

force a refresh