I've been asked about this a lot, so let me provide a quick FAQ.

Q: What's the nature of the issue?

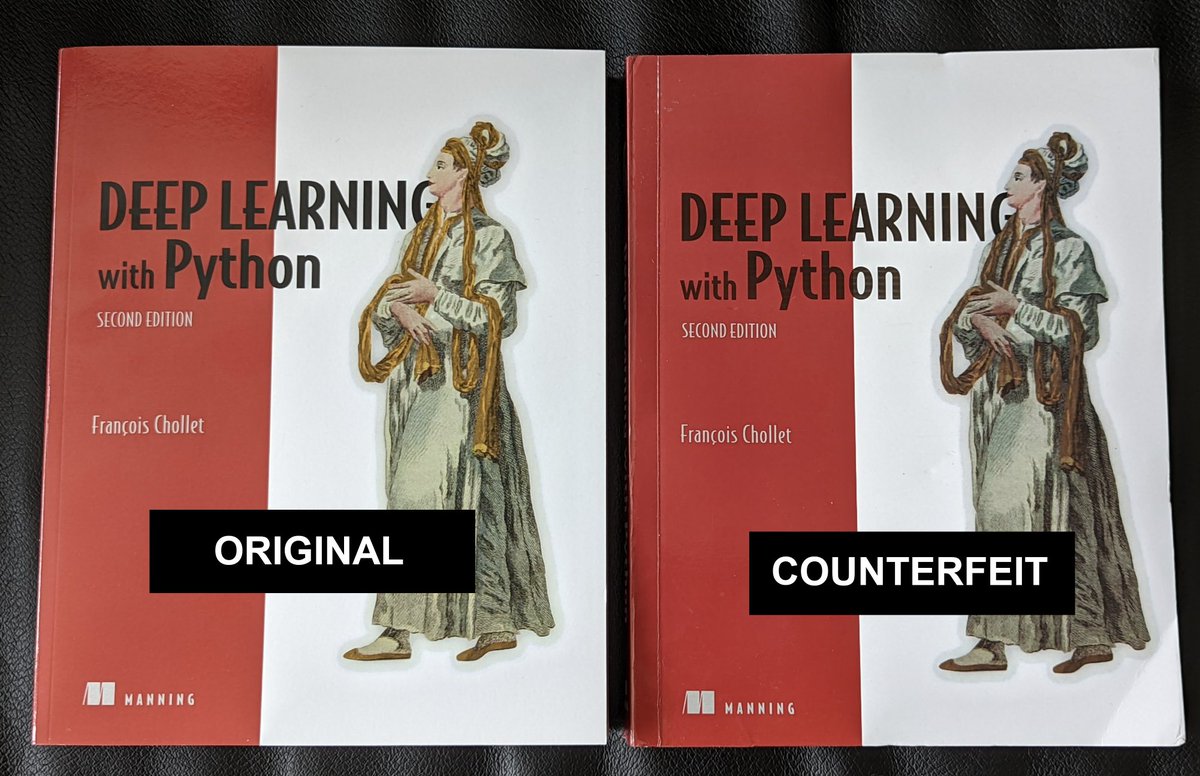

A: Anyone who has bought my book from Amazon in the past few month hasn't bought a genuine copy, but a lower-quality counterfeit copy printed by various fraudulent sellers.

Q: What's the nature of the issue?

A: Anyone who has bought my book from Amazon in the past few month hasn't bought a genuine copy, but a lower-quality counterfeit copy printed by various fraudulent sellers.

https://twitter.com/fchollet/status/1545477711321186305

Q: How does this even happen?

A: Amazon lets any seller claim that they have inventory for a given book, and then proceeds to route orders (from the book's page) to that seller's inventory. In this case, Amazon even hosts the inventory and takes care of the shipping. (cont.)

A: Amazon lets any seller claim that they have inventory for a given book, and then proceeds to route orders (from the book's page) to that seller's inventory. In this case, Amazon even hosts the inventory and takes care of the shipping. (cont.)

(cont.) This has given rise to a cottage industry of fraudsters who "clone" books (which is easy when the PDF is readily available: you just need to contract a printer) and then claim to be selling real copies.

This is endemic for all popular textbooks on Amazon.

This is endemic for all popular textbooks on Amazon.

Q: How do I know if I'm about to buy a counterfeit copy?

A: Look for the name of the seller. If it's a 3rd party seller (i.e. not Amazon's own inventory) and it isn't a well-known bookstore, then it's a scammer. (cont.)

A: Look for the name of the seller. If it's a 3rd party seller (i.e. not Amazon's own inventory) and it isn't a well-known bookstore, then it's a scammer. (cont.)

(cont.) They tend to have names like "Sacred Gamez", "Your Toy Mart", etc. That's because they started out with counterfeit toys, video games, etc. and eventually pivoted to technical books (higher-margin).

They've been in activity for years -- it's a highly lucrative model.

They've been in activity for years -- it's a highly lucrative model.

Q: How do I know if my copy is counterfeit?

A: The surest way to check is to try to register it with Manning at: manning.com/freebook

Other than that, the fakes have much lower print/make quality.

- Darker cover colors.

- Flimsier paper.

- Poorly bound.

- Cut smaller.

A: The surest way to check is to try to register it with Manning at: manning.com/freebook

Other than that, the fakes have much lower print/make quality.

- Darker cover colors.

- Flimsier paper.

- Poorly bound.

- Cut smaller.

Q: What is Amazon doing about it?

A: Nothing. We've notified them multiple times, nothing happened. The fraudulent sellers have been in activity for years.

A: Nothing. We've notified them multiple times, nothing happened. The fraudulent sellers have been in activity for years.

The issue affects ~100% of Amazon sales of the book since March or April. That's because, amazingly, since fraudsters are claiming to have inventory, Amazon has stopped carrying its own inventory for the book (i.e. it has stopped ordering new copies from the publisher).

Q: Does this affect any other book?

A: Absolutely. It affects nearly all high sale volume technical books on Amazon. If you've bought a technical book on Amazon recently there's a >50% chance it's a fake.

E.g.

@aureliengeron's book is also heavily affected, etc.

A: Absolutely. It affects nearly all high sale volume technical books on Amazon. If you've bought a technical book on Amazon recently there's a >50% chance it's a fake.

E.g.

@aureliengeron's book is also heavily affected, etc.

Besides books, it also affects a vast number of items across every product category -- vitamins, toys, video games, brand name electronics, brand name clothes, etc. But that's another story.

Q: So where should I buy the book?

A: Buy it directly from the publisher, here: manning.com/books/deep-lea…

A: Buy it directly from the publisher, here: manning.com/books/deep-lea…

Q: What should I do if I already bought a counterfeit copy?

A: Ask for a refund. Maybe this will put pressure on Amazon to look into the issue?

A: Ask for a refund. Maybe this will put pressure on Amazon to look into the issue?

An update: this thread has caused more of a stir than I expected. A positive side effect is that the issue has been escalated and resolved by Amazon (at least in the case of my book). Thanks to all those involved!

Specifically, the default buying option for the 2nd edition of my book is now Amazon itself, rather than any third party seller.

For the 1st edition, the default option is still a counterfeit seller, though. Perhaps this widespread problem needs more than a special-case fix.

For the 1st edition, the default option is still a counterfeit seller, though. Perhaps this widespread problem needs more than a special-case fix.

If it's impossible for Amazon to ensure the trustworthiness of 3rd party sellers, then perhaps there should be an option for publishers/authors to prevent any 3rd party seller from being listed as selling their book (esp. as the default option for people landing on the page).

It may not be entirely obvious at first that a given seller is selling exclusively counterfeit items, because that seller may appear to have thousands of ratings, 99% positive.

An important reason why is that Amazon takes down negative reviews related to counterfeits.

An important reason why is that Amazon takes down negative reviews related to counterfeits.

I spoke way too soon when I said the problem was resolved for my book -- 24 hours later a fraudulent seller is now back as the default buying option for both editions of my book. Sigh...

Exact same situation for @aureliengeron's ML bestseller right now: a fraudulent seller is the default. amazon.com/Hands-Machine-…

The level of mismanagement here is staggering.

The level of mismanagement here is staggering.

This goes far beyond "a 3rd party seller on Amazon is selling counterfeit copies."

The gist of the issue is for many bestselling books, Amazon is routing people towards counterfeits *by default*. Which is a big deal because Amazon is the default online bookstore for most people.

The gist of the issue is for many bestselling books, Amazon is routing people towards counterfeits *by default*. Which is a big deal because Amazon is the default online bookstore for most people.

If someone wants to buy my book or @aureliengeron's book (etc.), they will search for it on Amazon, find the book's official page, and click "buy".

And Amazon will be routing this purchase intent *by default* towards a seller of counterfeits.

And Amazon will be routing this purchase intent *by default* towards a seller of counterfeits.

This is hijacking a large fraction of total book sales -- for some books, a majority. This is theft of purchase intent (and that purchase intent typically originates outside of Amazon).

For authors and publishers, this represents a massive loss of revenue.

For authors and publishers, this represents a massive loss of revenue.

To use a metaphor -- it's not as if some guy on the street were selling bootleg items next to a massive supermarket that sold genuine ones.

It's as if this guy were empowered by the supermarket to systematically replace the genuine items on the shelves with his own fakes.

It's as if this guy were empowered by the supermarket to systematically replace the genuine items on the shelves with his own fakes.

An update -- it has been nearly 5 days (and over 2M views) since I posted this thread. I regret to say that both editions of my book are *still* being sold by fraudulent sellers by default (new ones, though).

Do NOT buy my books on Amazon. Buy from the publisher directly.

Do NOT buy my books on Amazon. Buy from the publisher directly.

• • •

Missing some Tweet in this thread? You can try to

force a refresh