Co-founder @ndea. Co-founder @arcprize. Creator of Keras and ARC-AGI. Author of 'Deep Learning with Python'.

99 subscribers

How to get URL link on X (Twitter) App

ARC-AGI-2 dataset: github.com/arcprize/ARC-A…

ARC-AGI-2 dataset: github.com/arcprize/ARC-A…

Read about our goals here: ndea.com

Read about our goals here: ndea.com

My full statement here: arcprize.org/blog/oai-o3-pu…

My full statement here: arcprize.org/blog/oai-o3-pu…

The "AI" of today still has near-zero (though not exactly zero) intelligence, despite achieving superhuman skill at many tasks.

The "AI" of today still has near-zero (though not exactly zero) intelligence, despite achieving superhuman skill at many tasks.

https://twitter.com/MIT_CSAIL/status/1774467004201578566Intelligence is found in the ability to pick up new skills quickly & efficiently -- at tasks you weren't prepared for. To improvise, adapt and learn.

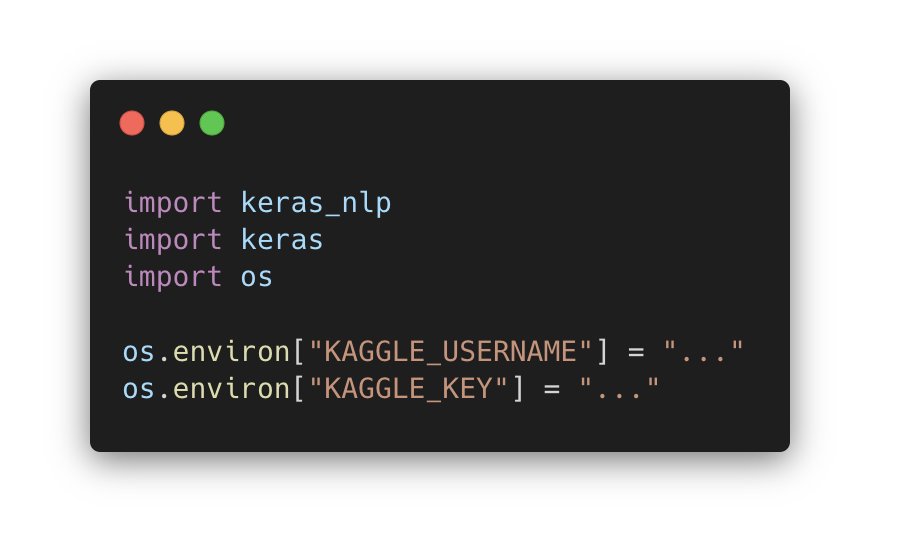

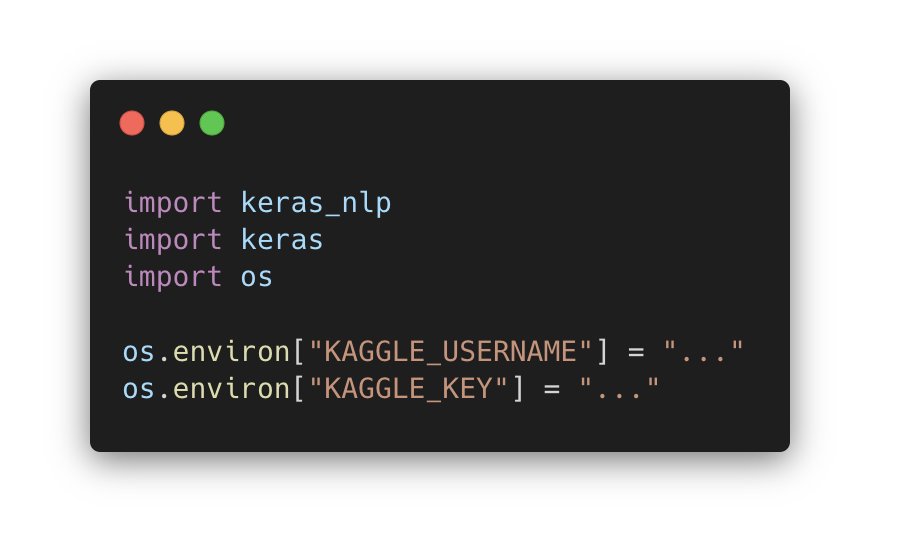

Next, let's instantiate the model and generate some text. You have access to 2 different sizes, 2B & 7B, and 2 different versions per size: base & instruction-tuned.

Next, let's instantiate the model and generate some text. You have access to 2 different sizes, 2B & 7B, and 2 different versions per size: base & instruction-tuned.