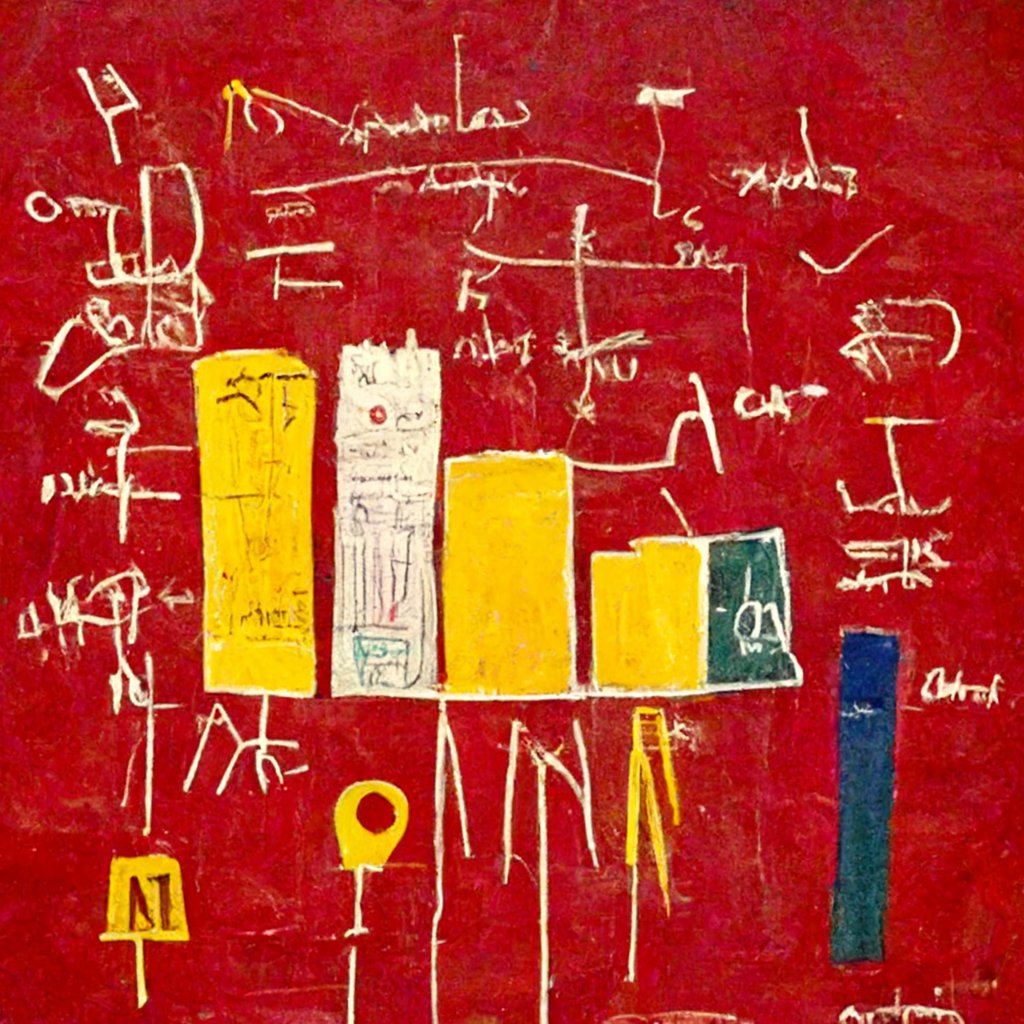

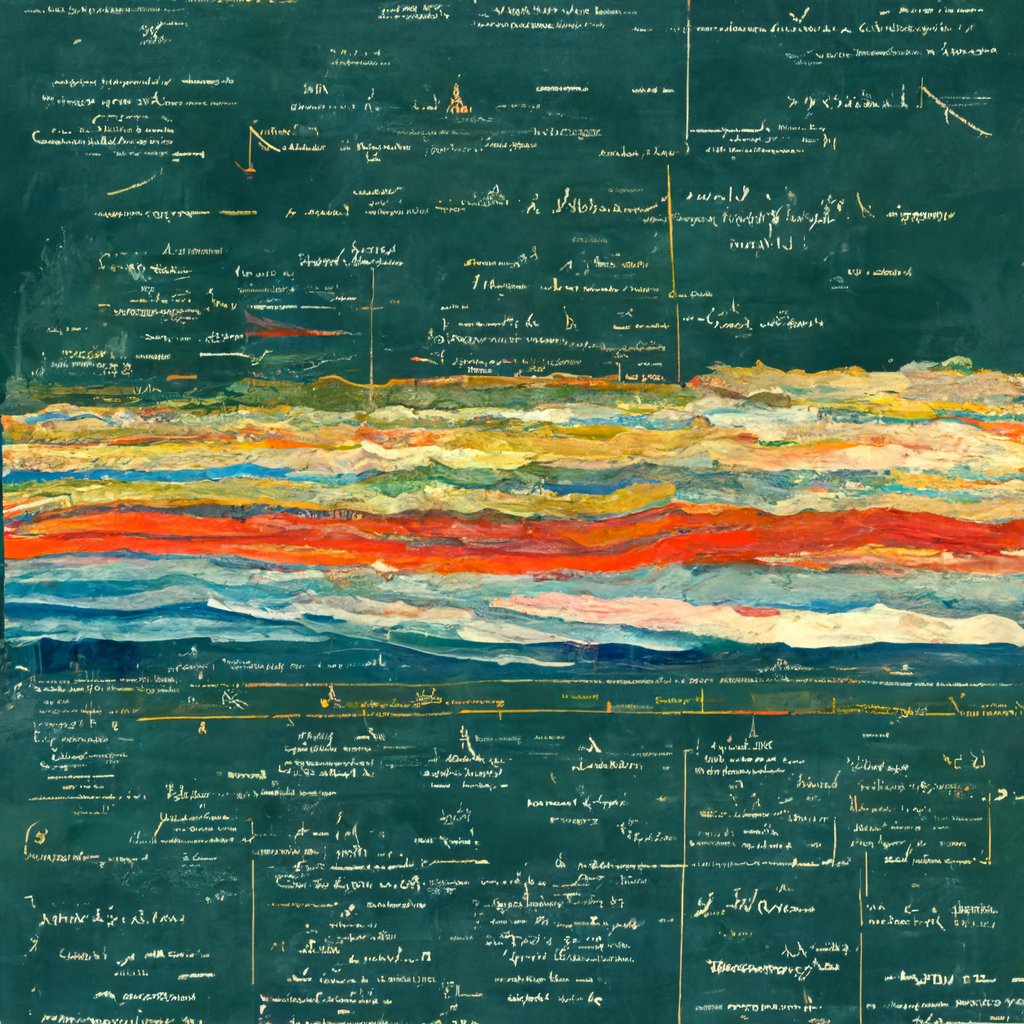

Data visualization inspiration thanks to DALL-E: how Rothko, Basquiat, Picasso, and Monet would create an academic chart.

A few more sources of data visualization inspiration: Bar charts as stained glass in an old cathedral. As a page from the Voynich Manuscript. As ancient stone monoliths on a grassy plain. Made of great columns of fire in the sky at the end of the world. cdn.discordapp.com/attachments/10…

Bar charts in the style of the a 1950s comic book, by Leonardo da Vinci, made of Jello, on a knight's shield

Scientific diagrams created by Vincent van Gogh, in the style of the Egyptian Book of the Dead, made of smoke and fire, haunted by ghosts.

Bar charts in the style of Magritte, a burning post-apocalyptic city, a Byzantine mosaic, an 80s punk album cover.

Bar chart by Lisa Frank, in a book of dread prophecy, traced by the masts of tall ships in a Turner painting, outlined by tornadoes.

Bar chart as cave painting, as Brutalist architecture, drawn by Studio Ghibli, in a frame of a Wes Anderson movie.

Bar chart as drawn by Dali, in the style of DALL-E (I asked it to create a bar chart in the style of AI), composed of (creepy) dollies, made out of dal.

Bar charts in the style of Keith Haring. As a traditional Chinese landscape. Carved into the rock of an alien planet. As a Hieronymus Bosch painting.

Bar charts as a Cézanne still life. As a scene in a Michael Bay movie. As a couture dress. Out of art deco furniture. (All of these are done in Midjourney, which I used for the first time yesterday!)

Since people keeping asking for these, here are all the images I posted (plus some leftovers), maximum resolution, under creative commons attribution license. Enjoy! drive.google.com/drive/folders/…

• • •

Missing some Tweet in this thread? You can try to

force a refresh