Our biggest launch in years: nbdev2, now boosted with the power of @quarto_pub!

Use @ProjectJupyter to build reliable and delightful software fast. A single notebook creates a python module, tests, @github Actions CI, @pypi/@anacondainc packages, & more

fast.ai/2022/07/28/nbd…

Use @ProjectJupyter to build reliable and delightful software fast. A single notebook creates a python module, tests, @github Actions CI, @pypi/@anacondainc packages, & more

fast.ai/2022/07/28/nbd…

What can you create with #nbdev with @quarto_pub? Well, for starters, check out our beautiful new website.

Created with nbdev, of course!

nbdev.fast.ai

Created with nbdev, of course!

nbdev.fast.ai

That website is generated automatically from the notebooks in this repo.

Take a look around -- all the things you'd hope to see in a high-quality project are there. It's all done for you by nbdev. For instance, see the nice README? Built from a notebook!

github.com/fastai/nbdev/

Take a look around -- all the things you'd hope to see in a high-quality project are there. It's all done for you by nbdev. For instance, see the nice README? Built from a notebook!

github.com/fastai/nbdev/

nbdev v1 is already recommended by experts, and v2 is a big step up again.

"From my point of view it is close to a Pareto improvement over traditional Python library development." Thankyou @erikgaas 😀

"From my point of view it is close to a Pareto improvement over traditional Python library development." Thankyou @erikgaas 😀

Here's an example of the beautiful and useful docs that are auto-generated by nbdev+Quarto.

nbdev.fast.ai/merge.html

nbdev.fast.ai/merge.html

Here's an example of an exported function in a notebook cell. This is automatically added to the python module, and the documentation you see on the right is auto-generated.

nbdev.fast.ai/merge.html#nbd…

nbdev.fast.ai/merge.html#nbd…

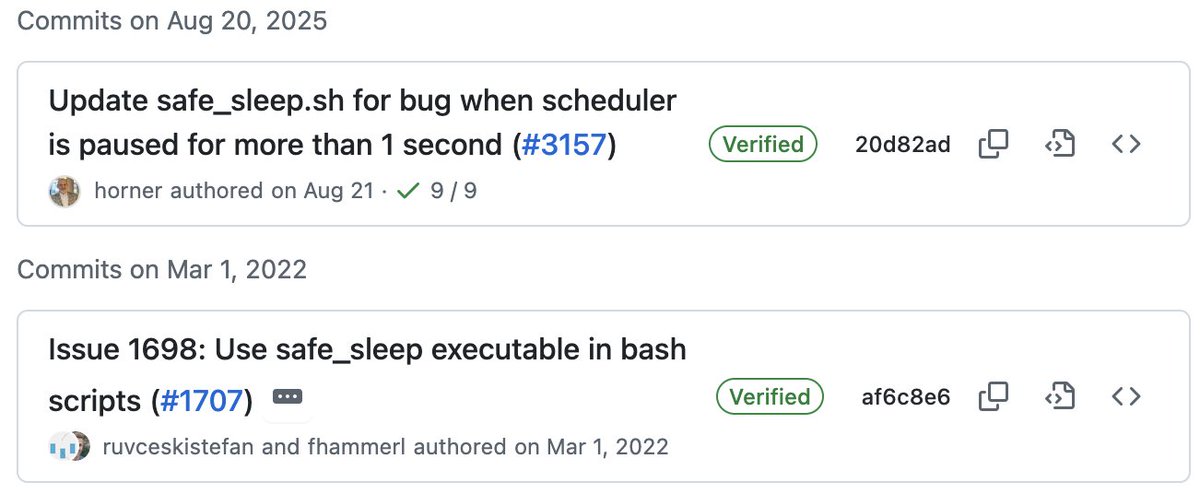

Every time we update a notebook to change the docs, library, or tests, everything is checked by @github Actions automatically

Here's the @pypi pip installer that's auto-generated. See the description? That's created for you from the notebook you use for your documentation homepage (just like the README, and the description for your conda package)

pypi.org/project/nbdev/

pypi.org/project/nbdev/

I've barely scratched the surface in this brief tweet thread! For much more information, take a look at the blog post authored with @HamelHusain

fast.ai/2022/07/28/nbd…

fast.ai/2022/07/28/nbd…

This launch wouldn't have been possible without some amazing people. I'd especially like to highlight Hamel & @wasimlorgat, who made nbdev2 a far better product than it would have been without them, JJ Allaire @fly_upside_down & the @quarto_pub team, & the @fastdotai community

• • •

Missing some Tweet in this thread? You can try to

force a refresh