🇦🇺 Co-founder: @AnswerDotAI/@FastDotAI ;

Prev: Professor@UQ; Stanford fellow; @kaggle founding president; founder @fastmail/@enlitic/…

https://t.co/16UBFTWzwQ

27 subscribers

How to get URL link on X (Twitter) App

https://twitter.com/valigo_gg/status/1994169596929024103

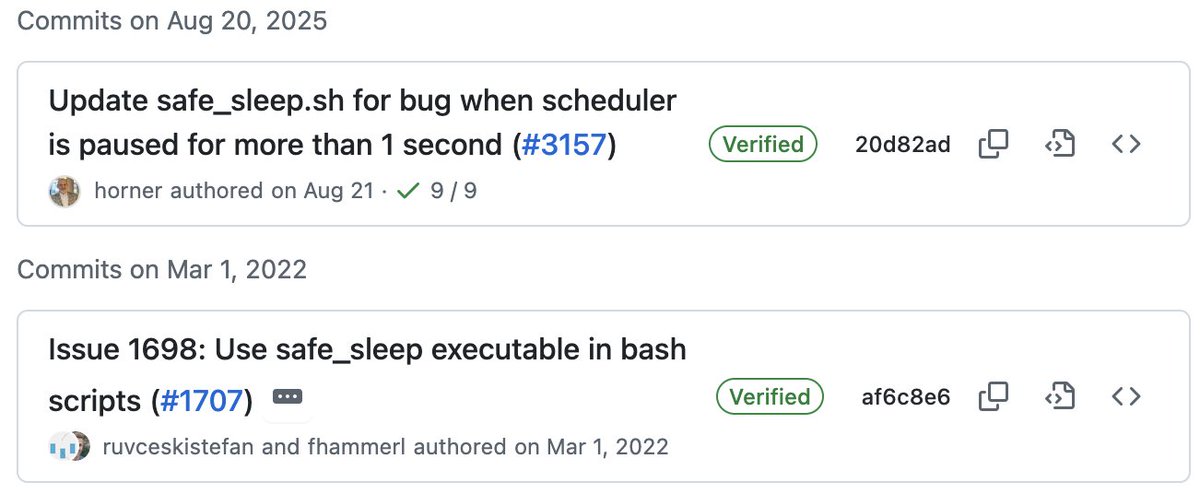

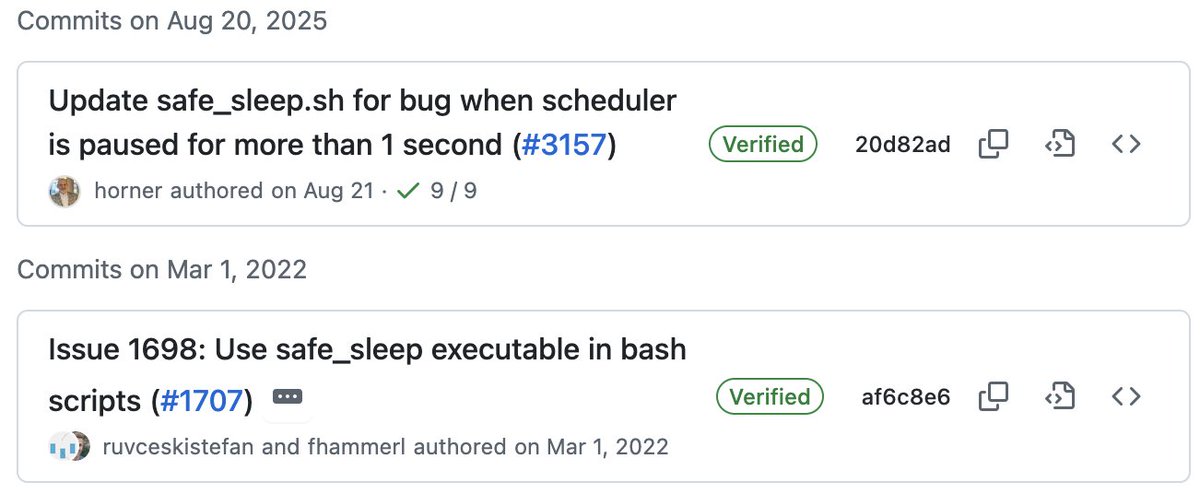

`safe_sleep` was added in 2022.

`safe_sleep` was added in 2022.

https://twitter.com/jeremyphoward/status/1926025745731559669

Here is the full original: daodejing.org .

Here is the full original: daodejing.org .

https://twitter.com/levelsio/status/1906054159364710654There have been many other important contributions, including attention (Bahdanau et al), transformers, RLHF, etc.

https://twitter.com/davidbombal/status/1886875600025215420

If you are using a Chinese cloud based service hosted in China, then your data will be sent to a server in China.

If you are using a Chinese cloud based service hosted in China, then your data will be sent to a server in China.

ModernBERT is available as a slot-in replacement for any BERT-like model, with both 139M param and 395M param sizes.

ModernBERT is available as a slot-in replacement for any BERT-like model, with both 139M param and 395M param sizes.https://twitter.com/PyTorch/status/1857500664831635882I wonder if the @PyTorch analysis behind this is mistaken. I suspect most of the pypi installs they’re seeing are from CI and similar. Conda installs are the standard for end user installation of PyTorch afaik

Here's the official API docs with details on pricing:

Here's the official API docs with details on pricing:

To get started, head over to the home page: .

To get started, head over to the home page: .https://twitter.com/HamelHusain/status/1808850347169100261In this case @HamelHusain has enough reach on twitter that he got someone to notice and fix the mistake. But that’s not a solution for most people.

To replicate:

To replicate:

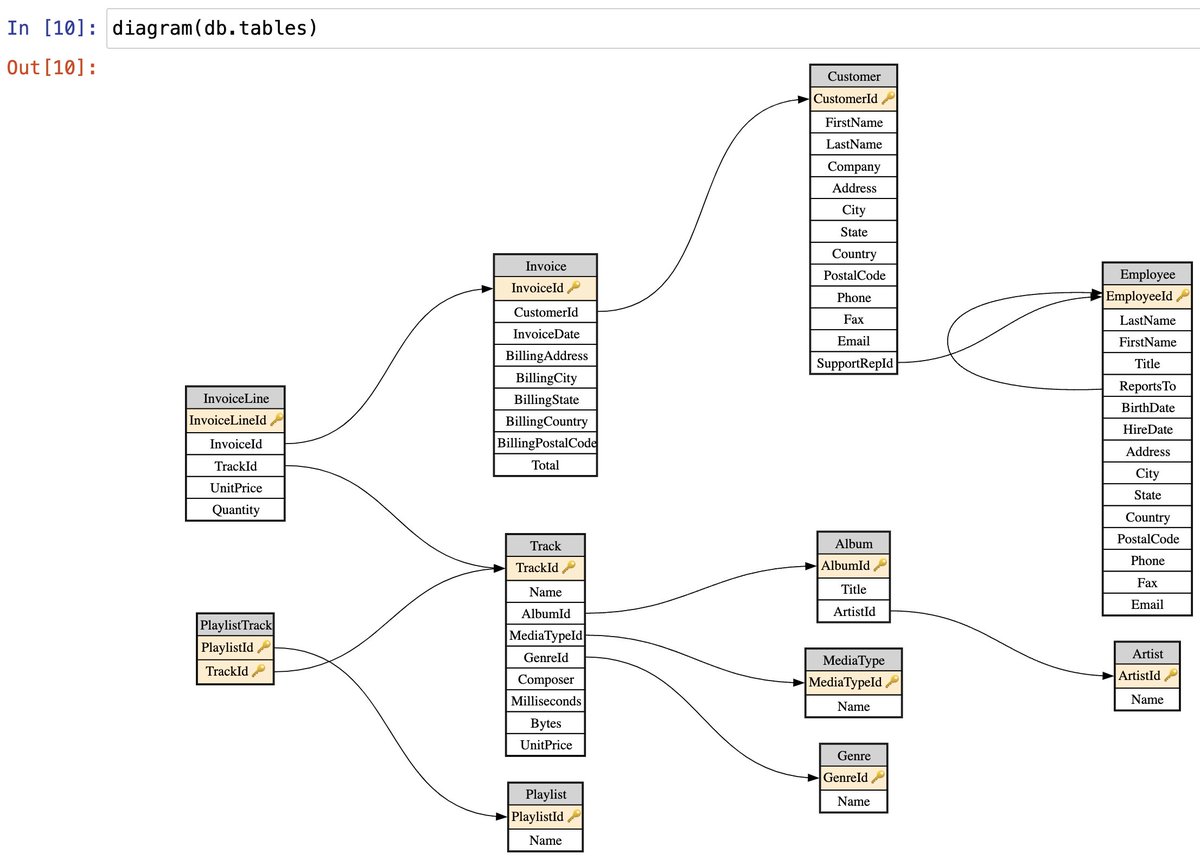

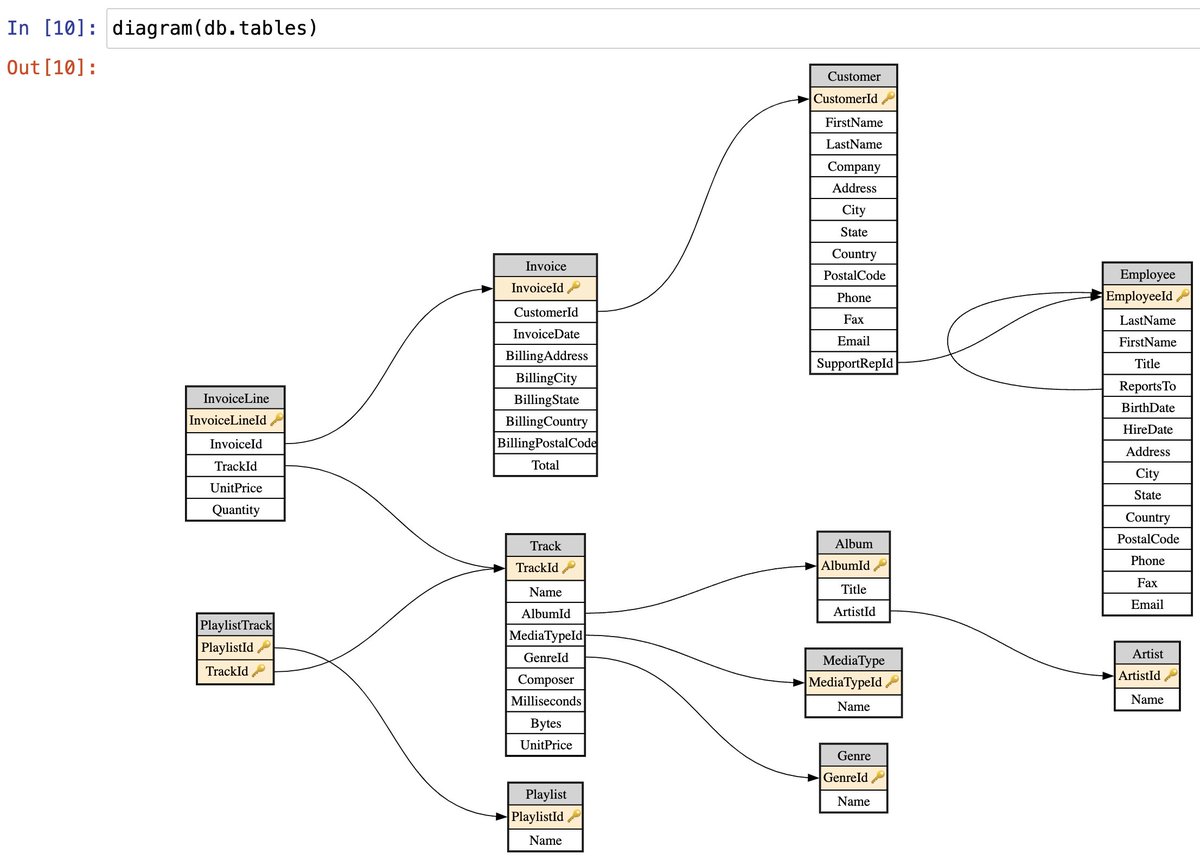

To install it, just do `pip install fastlite`. It'll install sqlite-utils automatically. (And sqlite itself is already installed with Python.)

To install it, just do `pip install fastlite`. It'll install sqlite-utils automatically. (And sqlite itself is already installed with Python.)