As an ECS on Fargate fan, I got a lot of questions about the recent incident / outage.

Let's talk about it... 🧵 1/43

TL;DR: meh. Incidents happen and this one did not teach us anything new about building on AWS. I expect more profound drama during these roaring 20s!

Let's talk about it... 🧵 1/43

TL;DR: meh. Incidents happen and this one did not teach us anything new about building on AWS. I expect more profound drama during these roaring 20s!

Disclaimer: everything fails all the time! I view incidents as "normal" and this thread reflects that.

Yes, AWS had incidents. Yes, us-east-1 is HUGE so it gets interesting incidents. Yes, AWS is bad at communication.

This is the default and well-known state!

Yes, AWS had incidents. Yes, us-east-1 is HUGE so it gets interesting incidents. Yes, AWS is bad at communication.

This is the default and well-known state!

I won't address bullshit arguments in here. Please go intellectually masturbate somewhere else!

No, multi-cloud is not a valid solution. No, multi-region is still really freaking complex and most of the time not worth it for the business. No, your datacenter won't do any better.

No, multi-cloud is not a valid solution. No, multi-region is still really freaking complex and most of the time not worth it for the business. No, your datacenter won't do any better.

Now, let's get to our incident! Let's do a recap and see what happened.

If you login to AWS and go to phd.aws.amazon.com/phd/home#/acco… you can see the full incident timeline and messages.

I'll spare you the horror and discuss the timeline here, using AWS' default PDT timezone.

If you login to AWS and go to phd.aws.amazon.com/phd/home#/acco… you can see the full incident timeline and messages.

I'll spare you the horror and discuss the timeline here, using AWS' default PDT timezone.

On August 24, around 13:00, in us-east-1, ECS on Fargate and EKS on Fargate start experiencing errors: new containers sometimes fail to launch.

Running containers are totally fine. After a couple retries new containers successfully launch too.

Some don't even notice the errors.

Running containers are totally fine. After a couple retries new containers successfully launch too.

Some don't even notice the errors.

Errors increase more and more.

At 15:30 errors are now common and AWS formally declares an incident. Personal Health Dashboards are updated.

It's officially an incident now, with people annoyed it took AWS this long to confirm the issue ranting on Twitter and on Slacks.

At 15:30 errors are now common and AWS formally declares an incident. Personal Health Dashboards are updated.

It's officially an incident now, with people annoyed it took AWS this long to confirm the issue ranting on Twitter and on Slacks.

Skilled AWS engineers investigate and debug the problem and try some potential fixes.

During all this time, containers may or may not successfully start. Some containers start, some don't — AWS reports times when 50% and 70% of calls succeed and that's inline with what I saw.

During all this time, containers may or may not successfully start. Some containers start, some don't — AWS reports times when 50% and 70% of calls succeed and that's inline with what I saw.

Since AWS does not have trivial incidents, it of course turns out it's a harder problem to solve than expected 😨

Looks like Fargate's underlying EC2 instances are not getting cleaned up properly and they become stuck.

There are indeed servers in serverless and they are cranky!

Looks like Fargate's underlying EC2 instances are not getting cleaned up properly and they become stuck.

There are indeed servers in serverless and they are cranky!

At 21:20 AWS decides to FULLY stop ECS on Fargate and EKS on Fargate container launches.

100% of requests fail now 😱

It's been 8 hours since the first error and 6 hours since the incident started. Skilled AWS engineers could not fix it otherwise so this drastic action is taken

100% of requests fail now 😱

It's been 8 hours since the first error and 6 hours since the incident started. Skilled AWS engineers could not fix it otherwise so this drastic action is taken

With all the requests stopped, AWS engineers manage to fix the problem.

Now, ECS on Fargate and EKS on Fargate are both highly complex distributed systems. You don't just turn a hyperscale service back on! Gradual recovery needs to happen and that's what we see unfold next.

Now, ECS on Fargate and EKS on Fargate are both highly complex distributed systems. You don't just turn a hyperscale service back on! Gradual recovery needs to happen and that's what we see unfold next.

At 00:45 AWS starts allowing some ECS on Fargate and EKS on Fargate containers to start.

It's up to the universe if you're part of the lucky few that get to start containers now. Literally a few random AWS accounts see some request succeeding now.

It's up to the universe if you're part of the lucky few that get to start containers now. Literally a few random AWS accounts see some request succeeding now.

For the next hour, highly experienced AWS engineers are watching the system come back up and things are continuing to look good.

More and more accounts are now successfully creating new ECS on Fargate and EKS on Fargate containers.

More and more accounts are now successfully creating new ECS on Fargate and EKS on Fargate containers.

By 01:55 the full 100% of AWS customers can start containers.

The system is still coming back up, so these containers are started at slower rates: 1 container per second per AWS account (1TPS).

At 04:00, since everything still looks good, the rate is increased to 2TPS.

The system is still coming back up, so these containers are started at slower rates: 1 container per second per AWS account (1TPS).

At 04:00, since everything still looks good, the rate is increased to 2TPS.

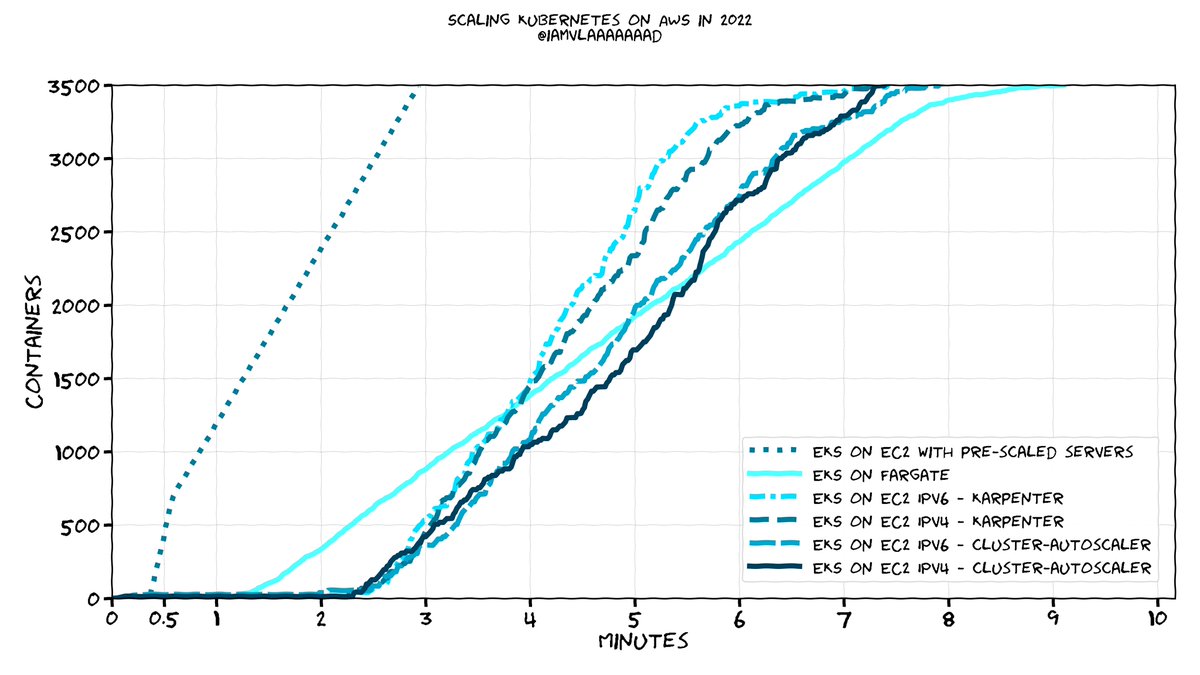

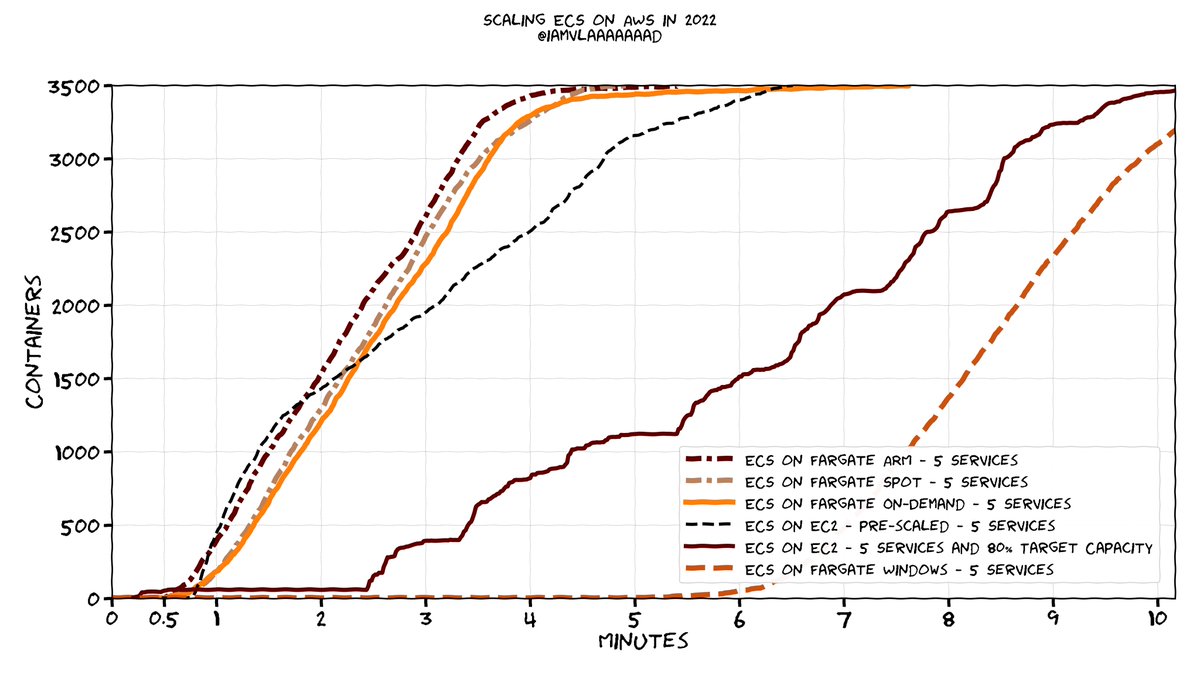

As an aside, in 2020, ECS on Fargate during normal operations and at full speed scaled at 1TPS!

This is slow, but not THAT slow.

This is slow, but not THAT slow.

At 04:34, since everything is still looking good, the rate is increased to 5TPS.

The fantastic AWS engineers continue to monitor things to make sure they don't break again, either from the same issue or from another issue due to the complex service coming back to life.

The fantastic AWS engineers continue to monitor things to make sure they don't break again, either from the same issue or from another issue due to the complex service coming back to life.

At 04:57, everything is progressing as expected and there are no issues, so the rate is yet again increased to 10TPS.

As an aside, in 2021, ECS on Fargate and EKS on Fargate at full speed scaled at 10TPS by default.

This is slow, but not that slow.

As an aside, in 2021, ECS on Fargate and EKS on Fargate at full speed scaled at 10TPS by default.

This is slow, but not that slow.

An hour later, at 06:05 things are still looking good and the rate is increased to 20TPS. The incident is formally declared as resolved.

Yay, we survived through this!

Yay, yet another incident in us-east-1!

Yay, Fargate got it's first big incident just before turning 5!

Yay, we survived through this!

Yay, yet another incident in us-east-1!

Yay, Fargate got it's first big incident just before turning 5!

TL;DR:

⛅ 13:00 to 21:20 degraded performance: some containers start, some don't

☠️ 21:20 to depending on your luck between 23:40 and 01:55 outage, all new launches fail. Already running containers are fine

🌤️ 00:45 to 06:00 degraded performance: containers start, but slower

⛅ 13:00 to 21:20 degraded performance: some containers start, some don't

☠️ 21:20 to depending on your luck between 23:40 and 01:55 outage, all new launches fail. Already running containers are fine

🌤️ 00:45 to 06:00 degraded performance: containers start, but slower

As an aside, ever since Lambda came out, AWS builds on AWS. They don't have another separate AWS or any special privileges.

AWS services that run on ECS on Fargate also experiencing degraded performance or outages: CloudShell, App Runner, and more!

AWS services that run on ECS on Fargate also experiencing degraded performance or outages: CloudShell, App Runner, and more!

I'd love for AWS to publish "Service A depends on X, Y, and Z" and to alert all Service-A users if Service-Z has an outage.

We know AWS maintains a dependency graph cause they talked about how when new regions are launched sometimes they discover loops 🤣

#awswishlist

We know AWS maintains a dependency graph cause they talked about how when new regions are launched sometimes they discover loops 🤣

#awswishlist

Back to our incident, now that we know what happened, did we learn anything new from this incident? Any changes in how we architect?

Let's talk about it...

TL;DR: nothing new if you've been doing cloud for a while, otherwise congrats on being one of the lucky 10.000!

Let's talk about it...

TL;DR: nothing new if you've been doing cloud for a while, otherwise congrats on being one of the lucky 10.000!

If you've been doing cloud for a while you likely did not learn anything new.

In the past we had multiple EC2 incidents where new instances would not start but running instances were fine => this is not a new failure mode.

Maybe some curiosities, but noting new or dramatic here

In the past we had multiple EC2 incidents where new instances would not start but running instances were fine => this is not a new failure mode.

Maybe some curiosities, but noting new or dramatic here

If you did not experience previous outages or if you did not have the opportunity to learn from previous incidents, congratulations!

You are one of the lucky 10,000 that get to learn from this outage!

To understand the 10,000 figure check out xkcd.com/1053/

You are one of the lucky 10,000 that get to learn from this outage!

To understand the 10,000 figure check out xkcd.com/1053/

🧑🏫 Lesson number 0: everything always fails and AWS is bad at communication!

🤷

Addendum: you can build a 99.9999% available service for 100 million or you can build a service that fails like... 3 days a year for 1 million. Business will often chose the latter and that's ok!

🤷

Addendum: you can build a 99.9999% available service for 100 million or you can build a service that fails like... 3 days a year for 1 million. Business will often chose the latter and that's ok!

🧑🏫 Lesson number 1: have good observability in place!

If your systems were impacted, did you notice the errors before AWS declared an incident? Did you understand them?

Genuinely the impact might've been too small to trigger anything, so it's ok if you did not notice it!

If your systems were impacted, did you notice the errors before AWS declared an incident? Did you understand them?

Genuinely the impact might've been too small to trigger anything, so it's ok if you did not notice it!

Potential indicator: 📈SLIs/SLOs for response times

If you were running at say 90% capacity, then response times and latency should go up, no?

Again, these might'n've triggered and that's ok! It depends on your scale — for example, only 1 of my clients had an SLI alert.

If you were running at say 90% capacity, then response times and latency should go up, no?

Again, these might'n've triggered and that's ok! It depends on your scale — for example, only 1 of my clients had an SLI alert.

Potential indicator:📈ECS/EKS events

For everything regarding your cluster an event is emitted: ECS can natively send events to EventBridge and for EKS you can parse the logs or use a third party tool.

This is quite an in-depth implementation tho — usually not worth it.

For everything regarding your cluster an event is emitted: ECS can natively send events to EventBridge and for EKS you can parse the logs or use a third party tool.

This is quite an in-depth implementation tho — usually not worth it.

Potential indicator: 📈Number of actual running containers versus number of desired running containers

This is debatably the easiest and most common metric. Depending on your scale, it might'n've been triggered tho!

Some clients had this trigger, some did not.

This is debatably the easiest and most common metric. Depending on your scale, it might'n've been triggered tho!

Some clients had this trigger, some did not.

🧑🏫 Lesson number 2: when AWS declares an incident, you should declare an incident too!

It's fine if you missed the sporadic initial errors, but once AWS declared an incident your on-call had to be alerted!

ALL my clients got altered and you should've had this too!

It's fine if you missed the sporadic initial errors, but once AWS declared an incident your on-call had to be alerted!

ALL my clients got altered and you should've had this too!

Yes, it may be a noisy alert at times, but it's worth it — this also creates a really nice culture of "oh, everything DOES fail all the time".

These events are served on a "best effort" basis, so also subscribe to RSS and Twitter feeds: docs.aws.amazon.com/health/latest/…

These events are served on a "best effort" basis, so also subscribe to RSS and Twitter feeds: docs.aws.amazon.com/health/latest/…

🧑🏫 Lesson number 3: when there's an incident, know what matters for your company!

AWS made it clear that only new containers are affected and proposed mitigations:

1) migrate from Fargate to EC2 if you can (hard, but cheap)

2) stop scaling down (easy, but expensive)

AWS made it clear that only new containers are affected and proposed mitigations:

1) migrate from Fargate to EC2 if you can (hard, but cheap)

2) stop scaling down (easy, but expensive)

At 15:30 the incident was official and it was made clear that *only new* containers are affected.

At 20:46 AWS explicitly said we should disable scaling down for Fargate containers since running containers are fine.

Only 30 minutes later were new launches disabled!

At 20:46 AWS explicitly said we should disable scaling down for Fargate containers since running containers are fine.

Only 30 minutes later were new launches disabled!

⏲️ your company cares more about staying up that money?

The second the incident started or, at worst, when AWS explicitly said it, you should've scaled up to a sane maximum.

Usually scaling up and down between 30 and 50 containers? Forced static 75 containers, scaling disabled!

The second the incident started or, at worst, when AWS explicitly said it, you should've scaled up to a sane maximum.

Usually scaling up and down between 30 and 50 containers? Forced static 75 containers, scaling disabled!

This is what most folks did and it made this incident a non-event.

"Oh, only new containers are affected. Meh, let's make sure we have no new containers for a while"

This is what most of my customers did. It took a while for all containers to successfully start though.

"Oh, only new containers are affected. Meh, let's make sure we have no new containers for a while"

This is what most of my customers did. It took a while for all containers to successfully start though.

If your on-call engineers did not feel comfortable making this tradeoff OR if your engineers did not know what your company prioritizes between money and staying up... you have a problem!

Seriously, look into this! ⚠️

Seriously, look into this! ⚠️

💸 Does your company care more about money that staying up?

That's a valid option too! Gracefully fail your apps, enable some downtime mode, do whatever you plan is in these cases. You do have a plan, right?

In my case, some customers just disabled a couple non-vital workflows.

That's a valid option too! Gracefully fail your apps, enable some downtime mode, do whatever you plan is in these cases. You do have a plan, right?

In my case, some customers just disabled a couple non-vital workflows.

The "switch workers from Fargate to EC2" line was interesting and unexpected.

For EKS clusters with both Fargate and EC2 workers this switch was trivial, if your code could handle it. Definitely something to architect for!

For anything else... I'd say it's over-engineering.

For EKS clusters with both Fargate and EC2 workers this switch was trivial, if your code could handle it. Definitely something to architect for!

For anything else... I'd say it's over-engineering.

🧑🏫 Lesson number 4: you need operational feature flags!

Again, everything fails all the time, so make sure you can handle failure gracefully.

Operational feature flags are lifesaving in moments like these.

Again, everything fails all the time, so make sure you can handle failure gracefully.

Operational feature flags are lifesaving in moments like these.

↕️ "We only have 30% of our desired capacity, flip the switch so we don't do that complex processing anymore. This way we can handle all the traffic with 30%"

↕️ "Service X is barely working due to the incident, let's disable that for now but we'll let the rest of the app work"

↕️ "Service X is barely working due to the incident, let's disable that for now but we'll let the rest of the app work"

↕️ "Oops, scaling is broken. Let's disable aggressive scaling and let's reuse workers since we don't know how easily new workers will come up"

These depend a lot on what your company is doing and on how your services are architected, but there are always options!

These depend a lot on what your company is doing and on how your services are architected, but there are always options!

🧑🏫 Lesson number 5: only one production AWS account is not ideal!

When things recovered, they went on a per-AWS-account basis. If you had multiple AWS accounts, some accounts recovered way faster that others. If you only had 1 AWS account that was at the end of the line...

When things recovered, they went on a per-AWS-account basis. If you had multiple AWS accounts, some accounts recovered way faster that others. If you only had 1 AWS account that was at the end of the line...

I think that's all the lessons from this incident, but I am biased on this since I've been doing cloud for a while now 🤔

Do you have any other learnings from this incident? Anything unexpected happen? Did your experience not match the one I had?

If so, please reply to this!

Do you have any other learnings from this incident? Anything unexpected happen? Did your experience not match the one I had?

If so, please reply to this!

In conclusion, meh.

If you've been doing cloud for a while, this was a "heh, Fargate got it's first big incident and it's not what I expected"

If you're newer to cloud, congrats! You got to learn 🎉

Read the unrolled version of this thread here: typefully.com/iamvlaaaaaaad/…

43/43

If you've been doing cloud for a while, this was a "heh, Fargate got it's first big incident and it's not what I expected"

If you're newer to cloud, congrats! You got to learn 🎉

Read the unrolled version of this thread here: typefully.com/iamvlaaaaaaad/…

43/43

• • •

Missing some Tweet in this thread? You can try to

force a refresh