You may know that @huggingface Accelerate has big-model inference capabilities, but how does that work?

With the help of #manim, let's dig in!

Step 1:

Load an empty model into memory using @PyTorch's `meta` device, so it uses a *super* tiny amount of RAM

With the help of #manim, let's dig in!

Step 1:

Load an empty model into memory using @PyTorch's `meta` device, so it uses a *super* tiny amount of RAM

Step 2:

Load a single copy of the model's weights into memory

Load a single copy of the model's weights into memory

Step 3:

Based on the `device_map`, store the checkpoint weights using @numpy or move it to a device for each group of parameters, and reset our memory

Based on the `device_map`, store the checkpoint weights using @numpy or move it to a device for each group of parameters, and reset our memory

Step 4:

Load a shard of the offloaded weights to the original empty model from the beginning onto the CPU and add hooks to change device placements

Load a shard of the offloaded weights to the original empty model from the beginning onto the CPU and add hooks to change device placements

Step 5:

Pass an input through the model, and the weights will automatically be placed from CPU -> GPU and back through each layer.

You're done!

Pass an input through the model, and the weights will automatically be placed from CPU -> GPU and back through each layer.

You're done!

Now here's the entire process (sped up slightly)

If you're interested in learning more about Accelerate or enjoyed this tutorial, be sure to read the full tutorial (with the complete animation) in the documentation!

huggingface.co/docs/accelerat…

huggingface.co/docs/accelerat…

This was completely inspired by @_ScottCondron, manim certainly has a learning curve but I think it came out pretty okay :)

https://twitter.com/_ScottCondron/status/1366117443136069639?s=20&t=PwCi3fyj7uFNATv-U7qk7Q

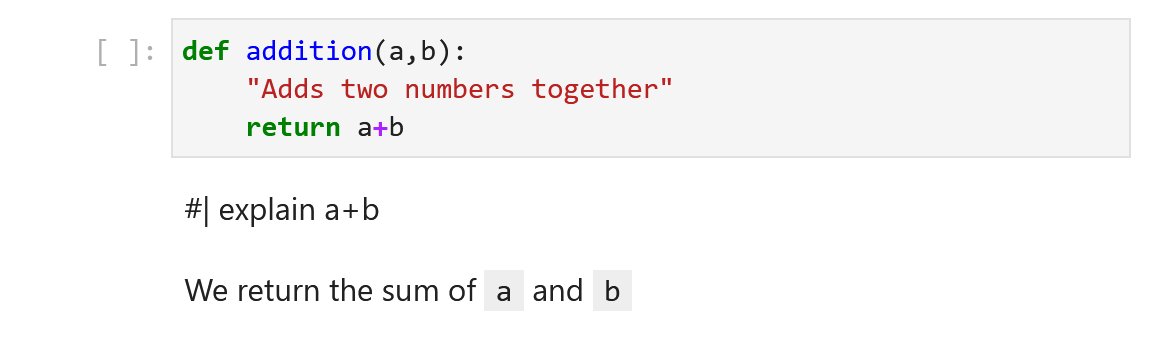

@huggingface @PyTorch For those who want to see the @manim_community code, it's live here: github.com/huggingface/ac…

• • •

Missing some Tweet in this thread? You can try to

force a refresh