Language Models have taken #NLProc by storm. Even if you don’t directly work in NLP, you have likely heard and possibly, used language models. But ever wonder who came up with the term “Language Model”? Recently I went on that quest, and I want to take you along with me. 🧶

I am teaching a graduate-level course on language models and transformers at @ucsc this Winter, and out of curiosity, I wanted to find out who coined the term “Language Model”.

First, I was a bit ashamed I did not know this fact after all these years in NLP. Surely, this should be in any NLP textbook, right? Wrong! I checked every NLP textbook I could get my hands on, and all of them define what an LM is, without giving any provenance to the term.

Here's an example from the 3rd edition of @jurafsky and Martin. Other books do it similarly.

I also quickly skimmed some popular NLP courses, including ones from Johns Hopkins, Stanford, Berkeley, etc., and they don't cover it either. You might be thinking that this detail is not important and thereby justifying its omission.

Normally, I would agree with you that there's no point learning random historical facts for their sake unless the provenance shows us to understand better what's being examined. So on we go to the quest! 🐈

Now, everyone knows Shannon worked out the entropy of the English language as a means to his end -- developing a theory of optimal communication.

His 1948 paper is very readable, but there is no mention of the term "Language Model" in it or in any of his follow-up works. people.math.harvard.edu/~ctm/home/text…

The next allusion to a language model was in 1958 by @ChomskyDotInfo in his famous paper on the "three models for the description of language", where he calls them "Finite State Markov Processes".

Chomsky got too caught up with grammar and did not think much of these finite state Markov Processes, and neither did he use the term "Language Model" for them. chomsky.info/wp-content/upl…

I also knew @jhuclsp had a famous 1995 workshop on adding syntax in language models ("LM95"). Going through the proceedings, it appears like the term Language Model was very operational by then. So, the term must have originated somewhere between 1958 and 1995.

After some digging, I have finally landed on this 1976 paper: citeseerx.ist.psu.edu/viewdoc/downlo…

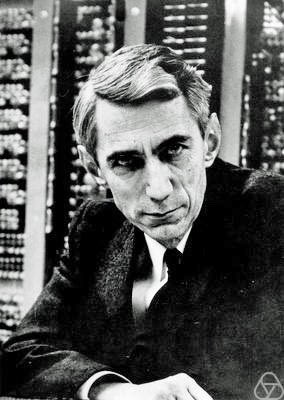

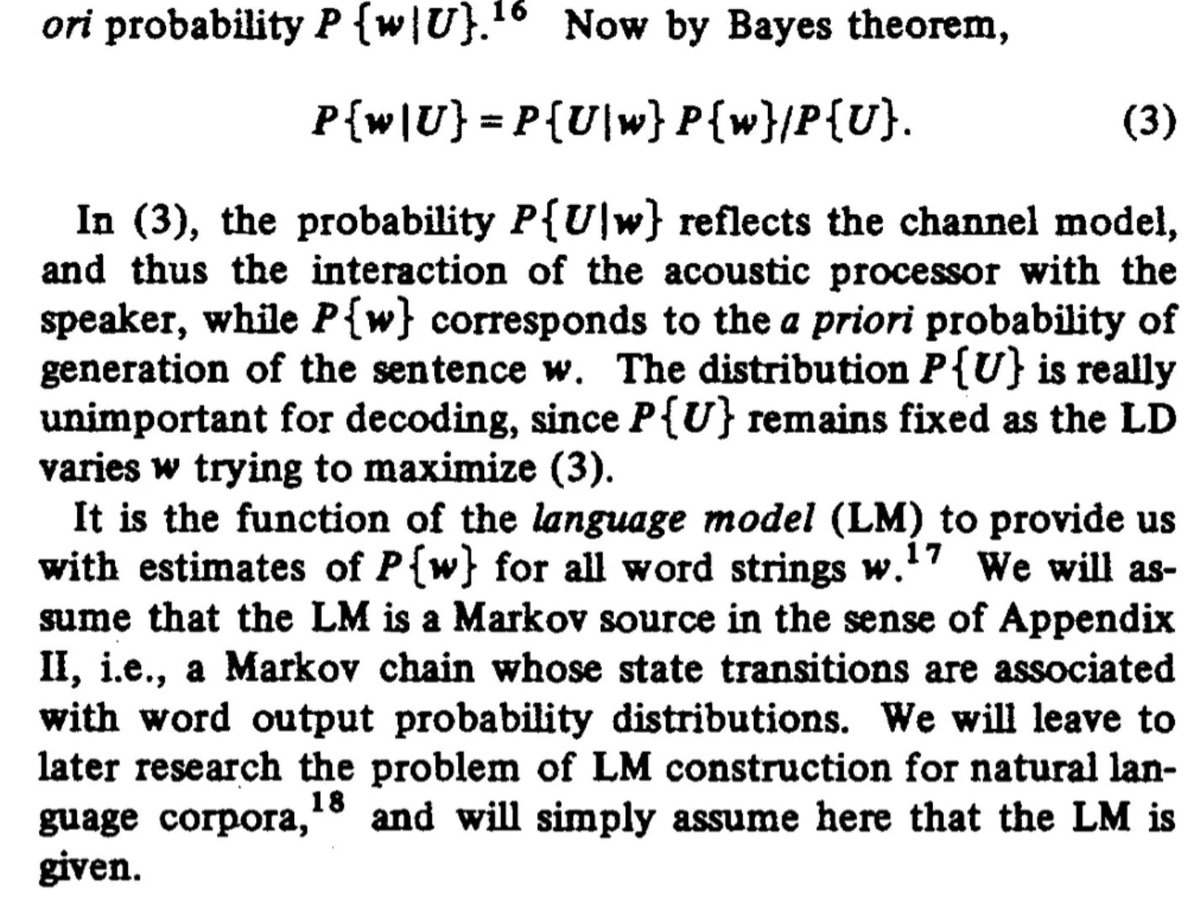

I feel quite confident that this is the first source, and Fred Jelinek was likely the first person to use the term "language model" in the scientific literature; it happens specifically in this paragraph where the term shows up in italics:

The paper itself is a landmark paper. It first described modern pre-deep learning ASR engines to the world, and the architecture described there is still used in countless deployments even today. Fred lays out the architecture as follows:

Later in the text, Fred clearly points out that the language model (used for the first time, in italics) is just one of the ways to do this linguistic decoding.

Footnote #17 is a dig at Chomsky, where Fred is basically saying, “don’t read too much into LMs. They just define the probability of a sequence. There’s nothing linguistic about it.”

This footnote is actually quite important. Today, with large language models sweeping NLP tasks across the board with their performance, it might be understandable to think LMs "understand" language.

@Smerity dug out this gem for me from 5 years ago (yes, I have friends like that) where I contradict that. Is he right?

If you are in the Fred Jelinek camp, Language Modeling per se is not an NLP problem. That LMs are used in solving NLP problems is a consequence of the language modeling objective. Much like the combustion engine science has nothing to do with flying, but jet engines use them.

In this world of neural LMs, I would define Language Models as intelligent representations of language. I use “intelligence” here in a @SchmidhuberAI-style compression-is-intelligence manner. arxiv.org/abs/0812.4360

Pretext tasks, like the MLM task, capture various aspects of language, and reducing the cross-entropy loss is equivalent to maximizing compression, i.e., “intelligence”.

So to land this thread, knowing where terms come from matters. It allows us to understand precisely when multiple interpretations are possible from the surface meaning of the term. Next time you want to cite something canonical for LMs, don’t forget (Jelinek, 1978)! 🧶🐈

PS: More AI folks should know about Fred Jelinek. He's a giant on whose shoulders many of us stand. web.archive.org/web/2016122819…

Correction: one of my earlier tweets refers to the paper in question as (Jelinek, 1978), but as evident from the bibtex entry, it’s (Jelinek, 1976).

• • •

Missing some Tweet in this thread? You can try to

force a refresh