1/ Hearing about #BERT and wondering why #radiologists are starting to talk more about this Sesame Street character?

Check out this #tweetorial from TEB member @AliTejaniMD with recommendations to learn more about #NLP for radiology.

Check out this #tweetorial from TEB member @AliTejaniMD with recommendations to learn more about #NLP for radiology.

2/ #BERT is a language representation model taking the #NLP community by storm, but it’s not new! #BERT has been around since 2018 via @GoogleAI. You may interact with #BERT everyday (chatbots, search tools).

But #BERT is relatively new to healthcare. What makes it different?

But #BERT is relatively new to healthcare. What makes it different?

3/ For starters, #BERT is “bidirectional”: it doesn’t rely on input text sequence (left-to-right or right-to-left). Instead, #Transformer-based architecture provides an #attention mechanism that help the model learn context based on ALL surrounding words.

4/ Interested in the architecture? Check out details from those who created the original model: arxiv.org/abs/1810.04805

5/ #BERT is PRE-TRAINED on a large corpus of text, specifically @Wikipedia and Google’s BooksCorpus. That’s ~3.3 million words! This allows us to leverage transfer learning, a technique to fine-tune pre-trained models for #NLP tasks.

Sounds great! But what’s the catch?

Sounds great! But what’s the catch?

6/ #BERT is a large model. The cost of improved performance? Time, computational power, and 💰. Fortunately, numerous “foundation models” and smaller BERT-derived models have been released over the last few years.

Carbon demands are also an issue: arxiv.org/abs/2104.10350

Carbon demands are also an issue: arxiv.org/abs/2104.10350

7/ Some of these are domain-specific models pre-trained for specific tasks. For example, BioBERT is trained on PubMed abstracts and PMC articles (~21 BILLION words). These models can be fine-tuned for downstream tasks.

doi.org/10.1093/bioinf…

doi.org/10.1093/bioinf…

8/ Wondering how #BERT-derived models are used in #radiology?

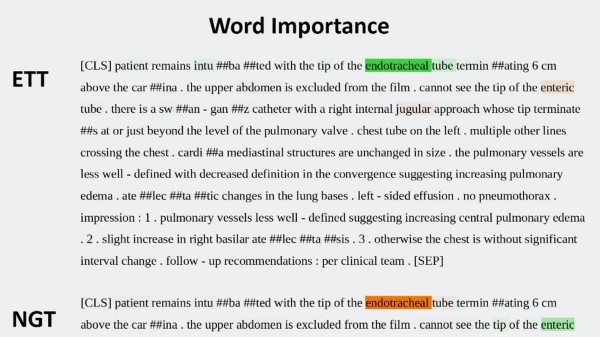

Start here with #RadBERT, the first set of foundation models trained on radiology reports, found to outperform baseline models on 3 #NLP tasks.

pubs.rsna.org/doi/10.1148/ry… @ChanNanHsu @UCSanDiego

Start here with #RadBERT, the first set of foundation models trained on radiology reports, found to outperform baseline models on 3 #NLP tasks.

pubs.rsna.org/doi/10.1148/ry… @ChanNanHsu @UCSanDiego

9/ Corresponding commentary on opportunities and risks of foundation models for #NLP in #radiology from @WalterWiggins @AliTejaniMD @DukeRadiology @UTSW_Radiology

doi.org/10.1148/ryai.2…

doi.org/10.1148/ryai.2…

10/ Next, explore some of the recent use cases.

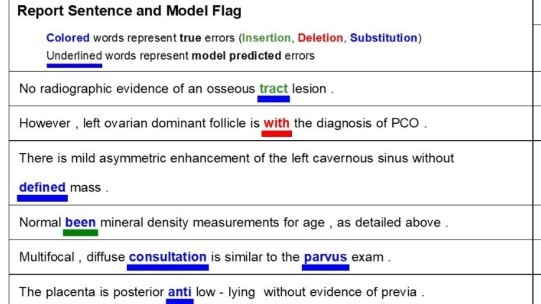

Researchers at @UCSFimaging applied #BERT to correct speech recognition errors in reports. BERT identified omissions, additions, and incorrect substitutions without original audio.

pubs.rsna.org/doi/10.1148/ry… @DrDreMDPhD @sohn522

Researchers at @UCSFimaging applied #BERT to correct speech recognition errors in reports. BERT identified omissions, additions, and incorrect substitutions without original audio.

pubs.rsna.org/doi/10.1148/ry… @DrDreMDPhD @sohn522

11/ An accompanying commentary from @HCheungMD @aabajian @UW_RadRes on the potential for transformer models to augment current dictation paradigms to improve accuracy and efficiency of radiology communication.

pubs.rsna.org/doi/10.1148/ry…

pubs.rsna.org/doi/10.1148/ry…

12/ Researchers from @UTSW_Radiology @UTSW_RadRes assessed #BERT-derived models (RoBERTa, DeBERTa, DistilBERT, PubMedBERT) to automate and accelerate data annotation.

doi.org/10.1148/ryai.2… @AliTejaniMD

doi.org/10.1148/ryai.2… @AliTejaniMD

13/ Commentary from @johnrzech @columbiaimaging @NYUImaging: Using #BERT models to label radiology reports doi.org/10.1148/ryai.2… #transformers #AI

14/ #BERT models can predict oncologic outcomes from structured #radiology reports – but not yet at the level of radiologists

doi.org/10.1148/ryai.2… @MatthiasAFine @uniklink_hd @UKHD_radonc

doi.org/10.1148/ryai.2… @MatthiasAFine @uniklink_hd @UKHD_radonc

15/ Thanks for YOUR attention! We've summed up the articles cited here: pubs.rsna.org/page/ai/blog/2…

Stay tuned for more on the latest, cutting-edge developments from @Radiology_AI !

Stay tuned for more on the latest, cutting-edge developments from @Radiology_AI !

• • •

Missing some Tweet in this thread? You can try to

force a refresh