It's Friday and that means it's time for the @aifunhouse Week in Review!

As always, it's been a wild week in #AI!

DreamBooth, Instant NeRF, Make a Video, and more ... let's get in!

🤖🧵👇🏽

As always, it's been a wild week in #AI!

DreamBooth, Instant NeRF, Make a Video, and more ... let's get in!

🤖🧵👇🏽

1. First up, DreamBooth, a technique from Google Research originally applied to their tool Imagen, but generalizable to other models, allows for fine-tuning of text-to-image networks to allow generation of consistent characters across contexts and styles. dreambooth.github.io

3. Here's our thread from earlier this week:

https://twitter.com/aifunhouse/status/1575506574986166273

4. Want to dig into DreamBooth for your own (likely questionable) purposes? Github and Colabs yonder:

https://twitter.com/psuraj28/status/1575123562435956740

5. Next - in a rare departure from Large Language Models and text-to-image, NVidia's Instant NGP with instant NeRF dramatically reduces the amount of time required to infer 3D scenes from a 2D images. Think about this as uber-photogrammetry. github.com/NVlabs/instant…

6. Great how-to on getting that Instant NGP installed, compiled, and running here:

developer.nvidia.com/blog/getting-s…

developer.nvidia.com/blog/getting-s…

7. Additional tools in the repo allow for mesh generation, SDF, gigapixel image approximation, volume rendering, camera moves, interactive rendering with multisample DoF, slicing, and rad visualizations of what's happening under the hood in the neural net.

8. Next up, coming in hot from Meta AI is Make-A-Video, a paper and perhaps? a set of hosted tools (sign up if you're interested - shocker thanks Zuck 🙄) capable of text-to-video, image tweening, and video variation creation with pretty decent results.

makeavideo.studio

makeavideo.studio

9. Subjectively, the Make-A-Video output's quality is reminiscent of GAN image output ~2 years ago, which is in no way a small feat. The images are stable, have decent detail and resolution, and plausible lighting and subjects.

10. They do have some GAN-like undesirable artifacts as well, including harsh edges, lack of definition in detailed areas, and a crushed color palette. Lots of room for improvement but impressive set of early results in what is sure to be the next frontier for image generation.

11. Bonus sample from Make-A-Video: "A golden retriever eating ice cream on a beautiful tropical beach at sunset, high resolution"

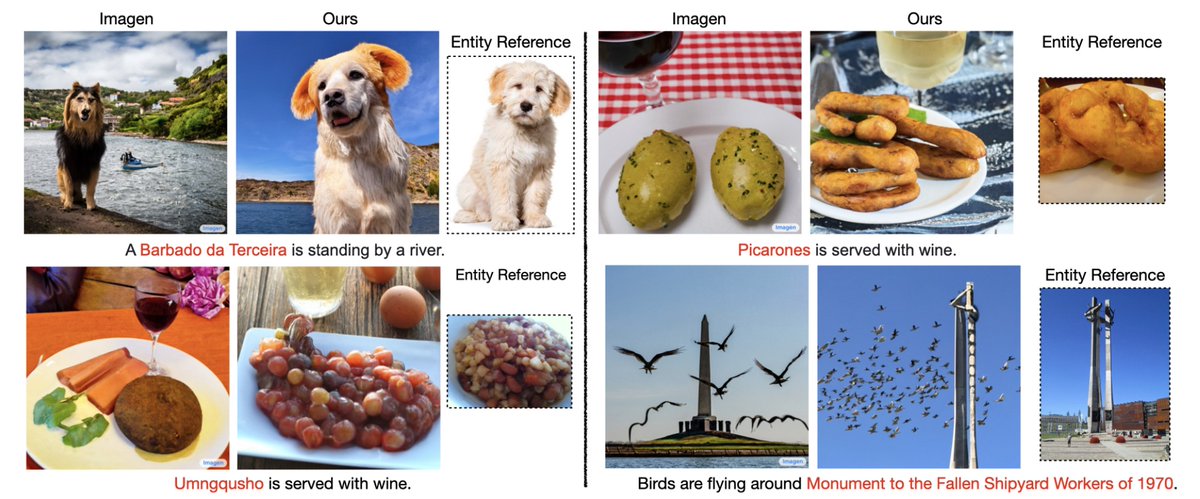

12. Next up - in a similar vein to DreamBooth we have Re-Imagen, which acheives now SoTA results in image retrieval for "even for rare or unseen entities", even with the challenging COCO and WikiImages datasets:

https://twitter.com/_akhaliq/status/1575544270811004928

13. According to the paper, Re-Imagen outperforms StableDiffusion and DALL-E 2 in terms of faithfulness and photorealism with human raters, mostly in low-frequency entities.

14. You can think of this as a one-shot learning implementation of the sort of thing DreamBooth and Textual Inversion are capable of, and the results are indeed impressive.

15. That's it for the @aifunhouse Week in Review!

What were your favorite announcements, demos, or papers this week? What did we miss?

What were your favorite announcements, demos, or papers this week? What did we miss?

16. Did you love this thread? Of course you did! You're no dummy and you have a nice smile!

Follow @aifunhouse for more tips, tutorials, tricks, and roundups from this Cambrian Explosion of AI crazy!

Follow @aifunhouse for more tips, tutorials, tricks, and roundups from this Cambrian Explosion of AI crazy!

17. RT this thread to let your friends know where the best roundups can be found (it's here BTW).

• • •

Missing some Tweet in this thread? You can try to

force a refresh