Today we announced a new feature on Pixel 7/Pro and @GooglePhotos called "Unblur". It's the culmination of a year of intense work by our amazing teams. Here's a short thread about it

1/n

#MadeByGoogle #fixedonpixel #Pixel7 #PhotoUnblur

@GooglePixel_US

1/n

#MadeByGoogle #fixedonpixel #Pixel7 #PhotoUnblur

@GooglePixel_US

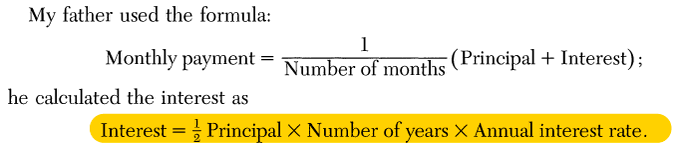

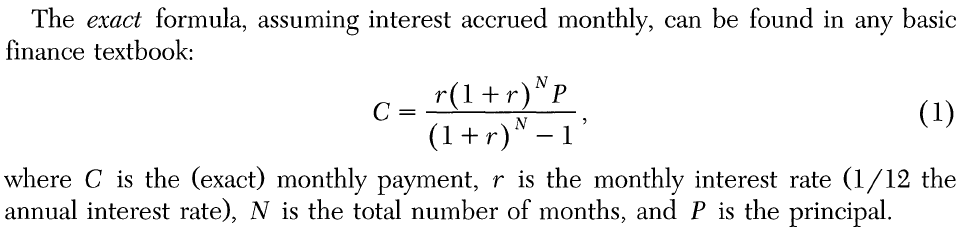

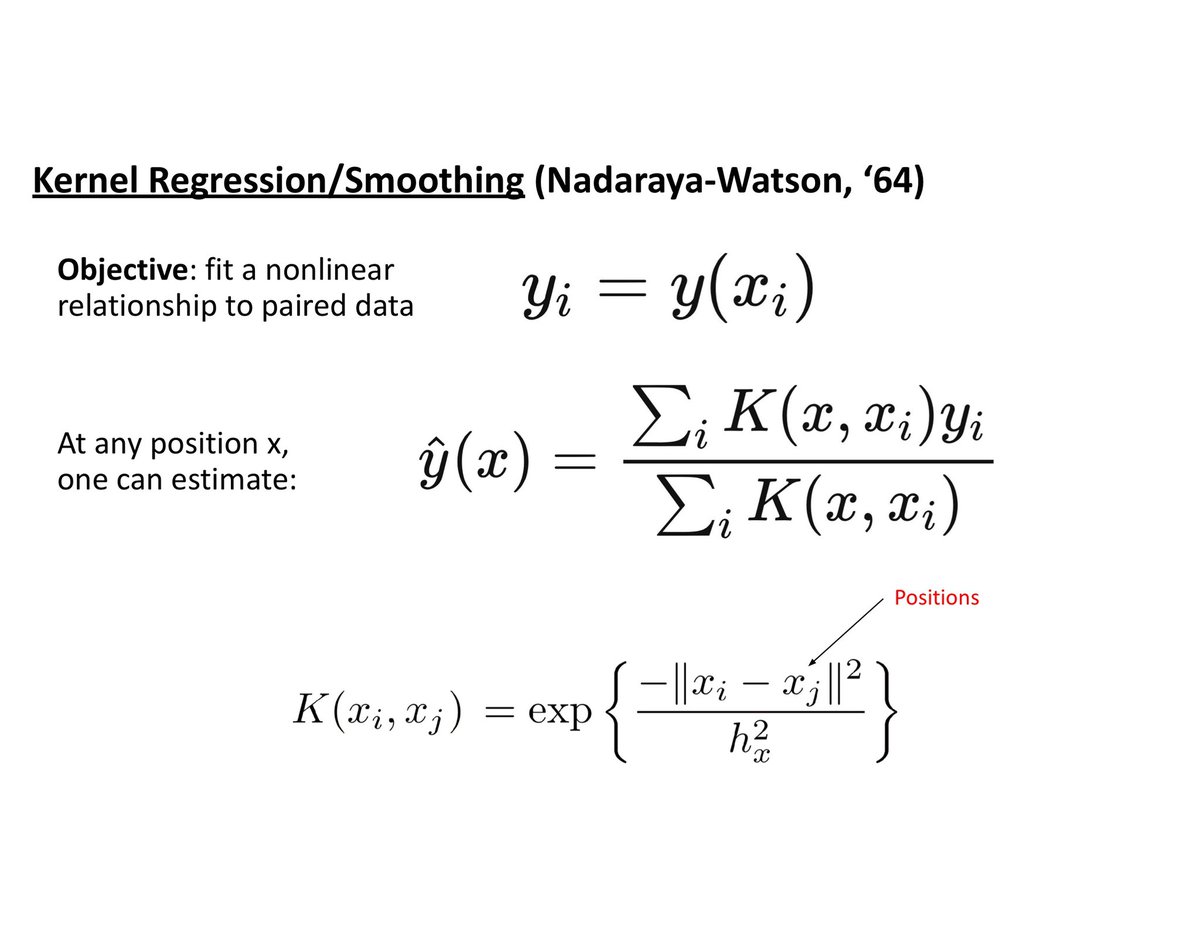

Last yr we brought two new editor functions to Google Photos: Denoise & Sharpen. These could improve the quality of most images that are mildly degraded. With Photo Unblur we raise the stakes in 2 ways:

First, we address blur & noise together w/ a single touch of a button.

2/n

First, we address blur & noise together w/ a single touch of a button.

2/n

Second, we're addressing much more challenging scenarios where degradations are not so mild. For any photo, new or old, captured on any camera, Photo Unblur identifies and removes significant motion blur, noise, compression artifacts, and mild out-of-focus blur.

3/n

3/n

Photo Unblur works to improve the quality of the 𝘄𝗵𝗼𝗹𝗲 photo. And if faces are present in the photo, we make additional, more specific, improvements to faces on top of the whole-image enhancement.

4/n

4/n

One of the fun things about Photo Unblur is that you can go back to your older pictures that may have been captured on legacy cameras or older mobile devices, or even scanned from film, and bring them back to life.

5/n

5/n

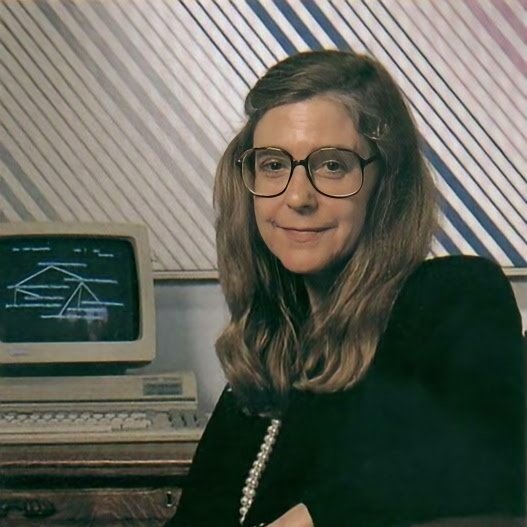

It's also fun to go way back in time (like the 70s and 80s !) and enhance some iconic images like these photos of pioneering computer scientist Margaret Hamilton, and basketball legend Bill Russell.

6/n

6/n

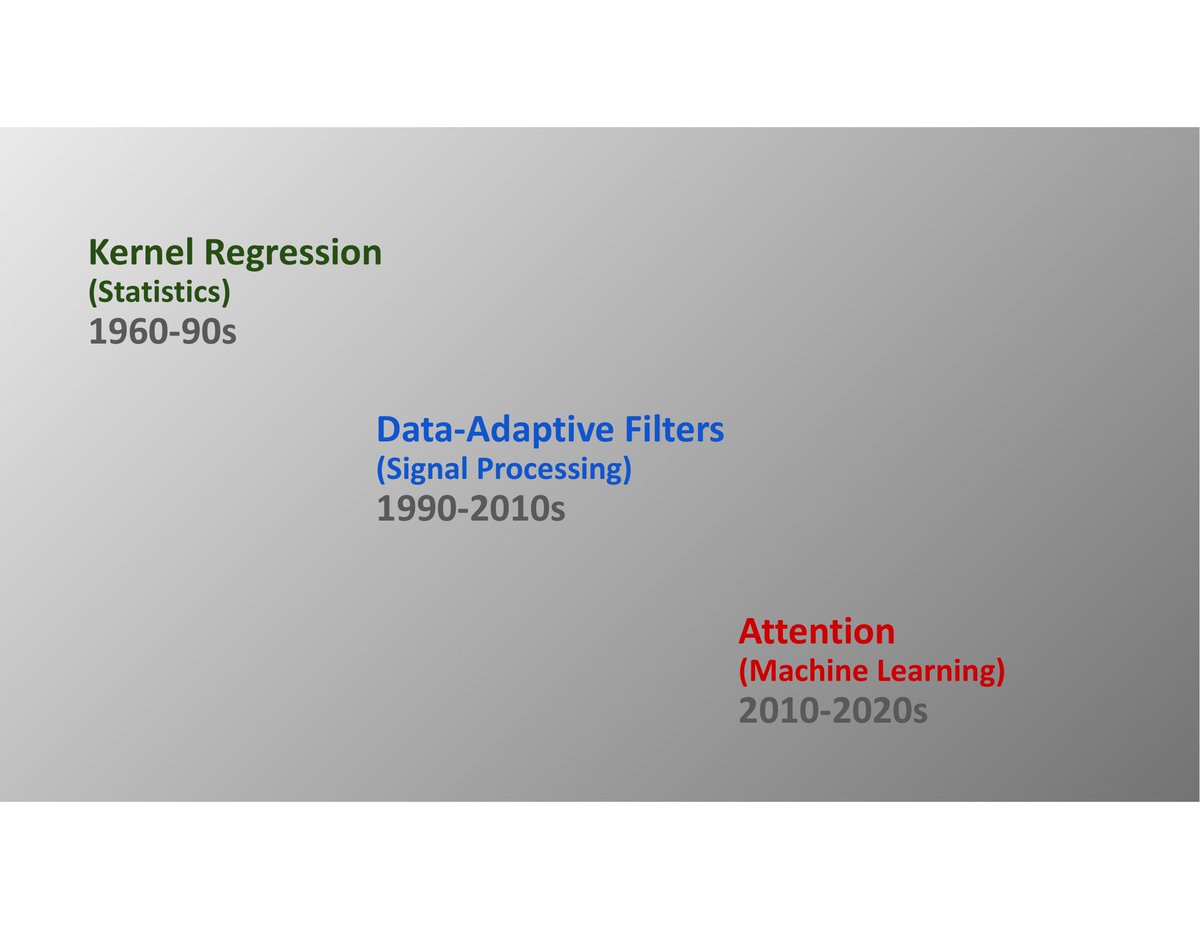

Recovery from blur & noise is a complex & long-standing problem in computational imaging. With Photo Unblur, we're bringing a practical, easy-to-use solution to a challenging technical problem; right to the palm of your hand

w/ @2ptmvd @navinsarmaphoto @sebarod & many others

n/n

w/ @2ptmvd @navinsarmaphoto @sebarod & many others

n/n

Bonus: Once you have a picture enhanced with #PhotoUnblur, applying other effects on top can have an even more dramatic effect. For instance, here I've also blurred the background and tweaked some color and contrast.

• • •

Missing some Tweet in this thread? You can try to

force a refresh