Ok, the #googlecloudnext announcements are coming out, and let's explore one in particular.

Cloud Workstations are in public preview! This isn't your parent's virtual desktop. It's a fast, flexible dev environment with security in mind. Let's explore together in a 🧵 ...

Cloud Workstations are in public preview! This isn't your parent's virtual desktop. It's a fast, flexible dev environment with security in mind. Let's explore together in a 🧵 ...

Browser-based IDEs are a thing now. It's how Googlers do a lot of their engineering work. It's ready for primetime.

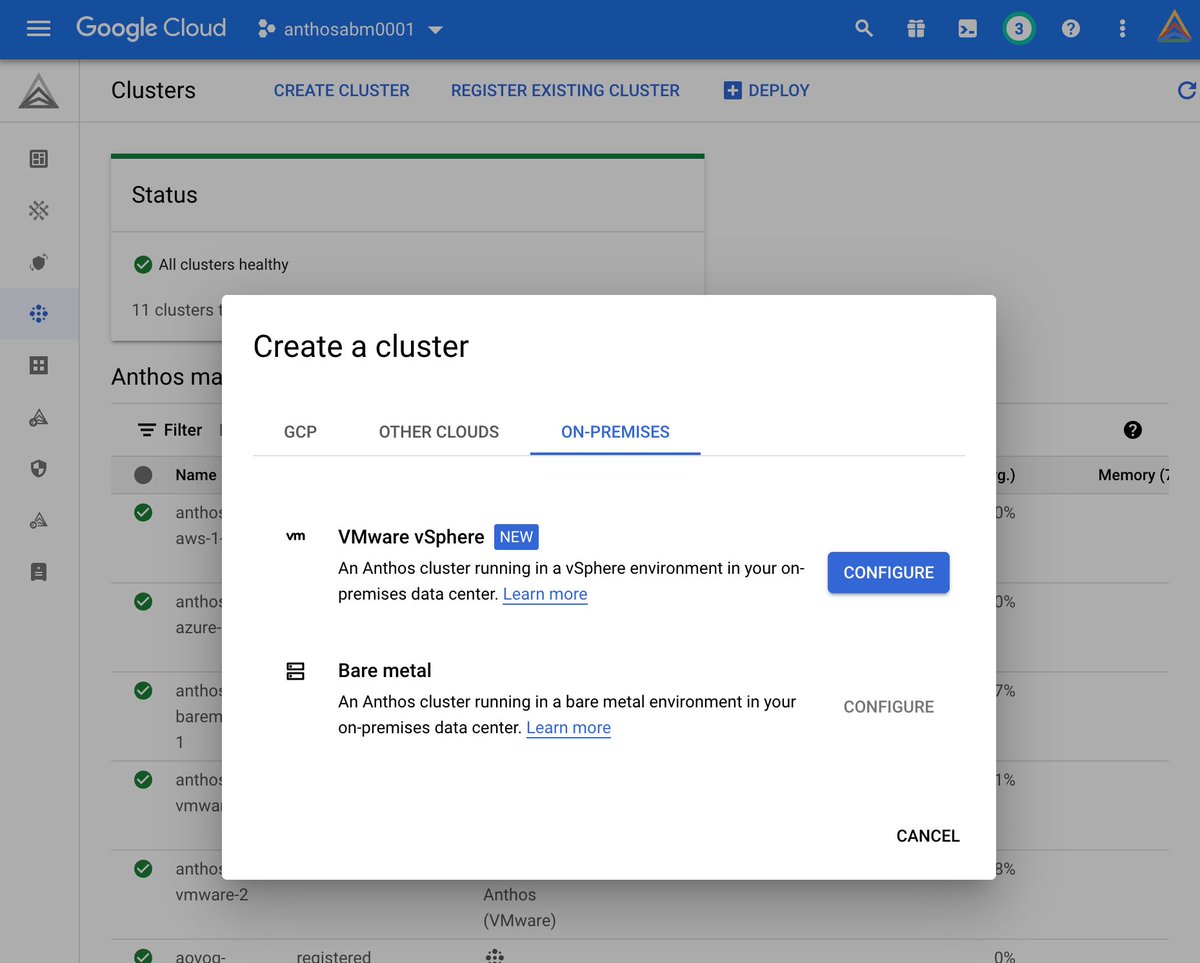

To start with @googlecloud Cloud Workstations, you create a cluster. It manages lifecycle and networking things. I can put these around the world, close to devs.

To start with @googlecloud Cloud Workstations, you create a cluster. It manages lifecycle and networking things. I can put these around the world, close to devs.

Once I have a cluster, I create a Workstation configuration. You can imagine your Platform team or Ops team setting up some default configurations.

One big part of the configuration is the assignment of resources. You can have some MASSIVE Workstations, which could be handy for compute or memory-intensive app development and testing.

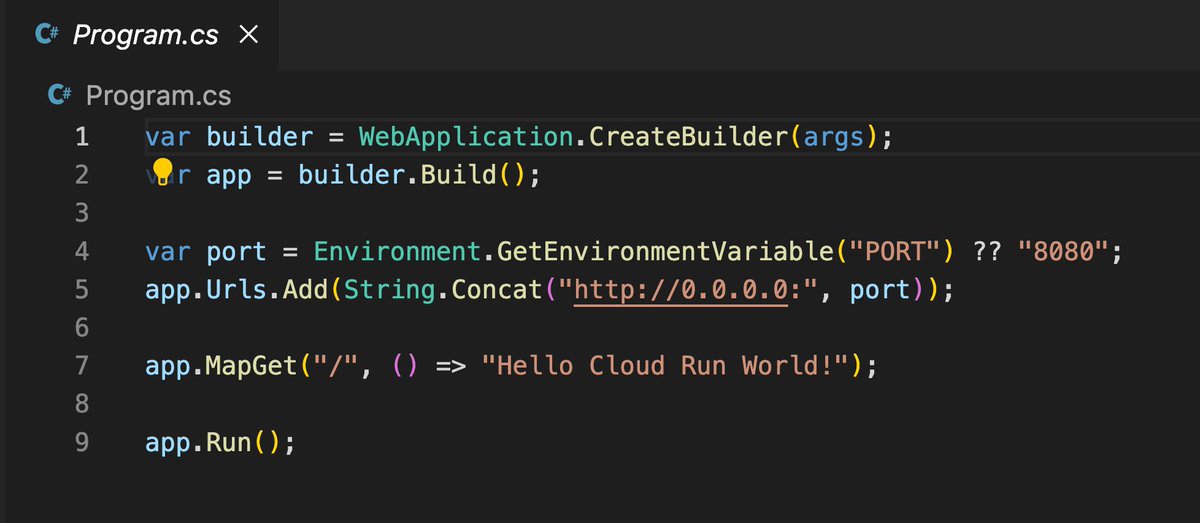

Here's where it gets fun. We've partnered with @jetbrains to offer any of their fantastic IDEs in the environment. Or use vanilla @code. Or bring your own setup. It's just a container.

I create an individual Workstations instance, and then start it up. Once it's running, I have a few options for how to connect to it.

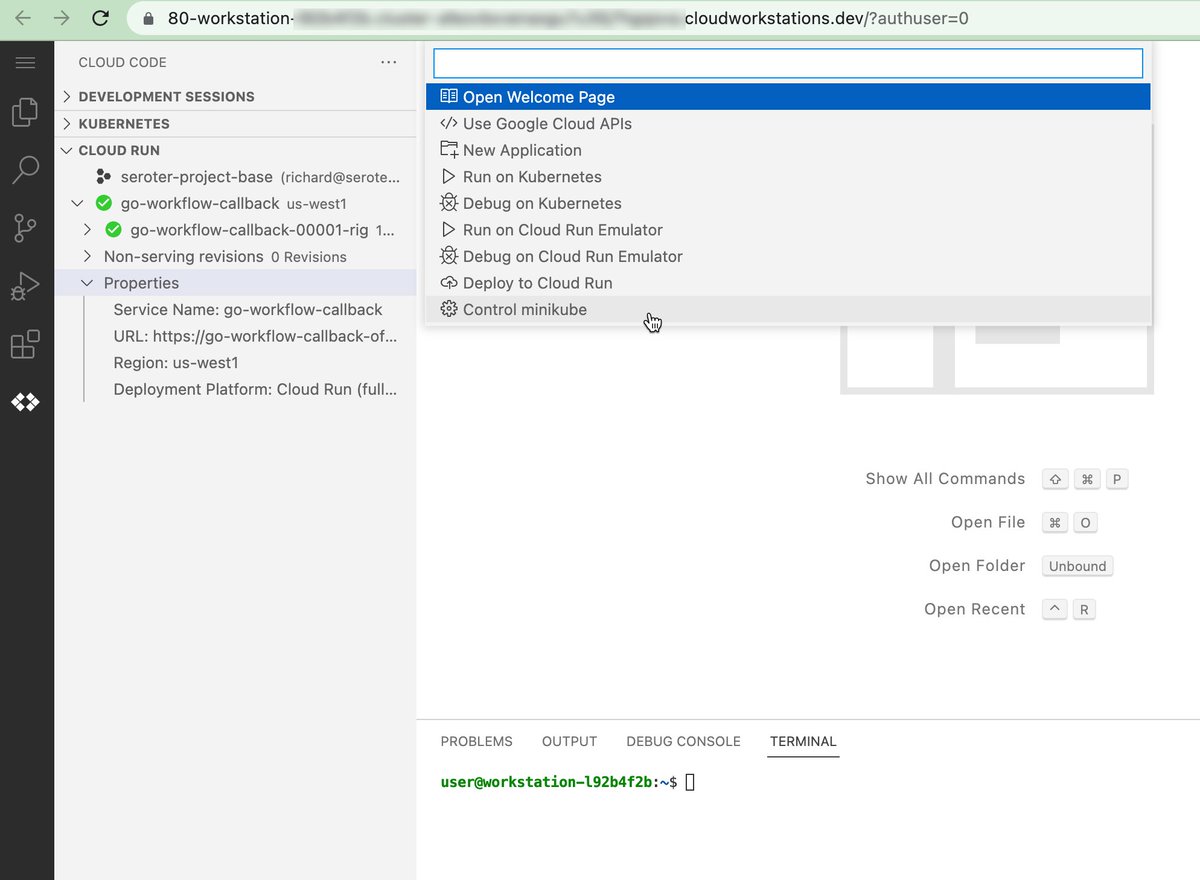

Here, I jumped right into an instance from the @googlecloud Console. It loaded so fast I didn't even realize it was running already. And you see I have full @code here. I can install extensions as I see fit.

This isn't just a code editor. That's no fun. This is a full compute environment. It's loaded with @googlecloud Code, so I can easily create local minikube clusters, and much more. Develop, compile, test, and do whatever you need to do.

Every human on Earth with a Google account can access a free Cloud Shell at shell.cloud.google.com. This takes a step further.

Get secure, durable, managed Workstations with premium IDEs and flexible resource allocations. Read the docs and try it out!

cloud.google.com/workstations

Get secure, durable, managed Workstations with premium IDEs and flexible resource allocations. Read the docs and try it out!

cloud.google.com/workstations

• • •

Missing some Tweet in this thread? You can try to

force a refresh