Today marks an extremely exciting day for fans of #nbdev, I'm releasing a new project, "nbdev-extensions"! This pypi package will contain features myself and others have thought of and I've brought to life in the nbdev framework for everyone to try!

muellerzr.github.io/nbdev-extensio…

1/5

muellerzr.github.io/nbdev-extensio…

1/5

The first extension is a `new_nb` command. This will quickly generate a new blank template notebook for you to immediately dive into as you're exploring nbdev, and is fully configurable for how your notebook's content should be:

2/5

2/5

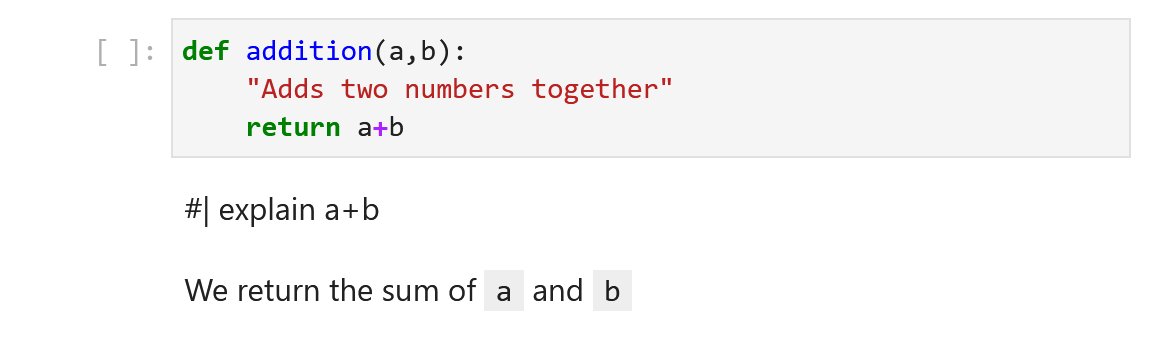

The second (and my favorite) extension is a new note annotation tool I'm calling "Code Notes". Take a code cell above, and in markdown cells below you write notes on particular sections of that code. The documentation will reflect these notes in a beautiful table:

3/5

3/5

This allows you to enter more of a flow state, your code above can still be clear and how you originally wrote it, but now your documentation (or notes) can detail whatever you'd like in further explanation, it's a win-win!

4/5

4/5

If you're interested in #nbdev, please try this library out! And if you do like it, make sure to give it a ⭐ on Github, and myself (@TheZachMueller) a follow to keep up with the latest and greatest extensions I come up with 😄

github.com/muellerzr/nbde…

5/5

github.com/muellerzr/nbde…

5/5

• • •

Missing some Tweet in this thread? You can try to

force a refresh